In this session, Seth Dobrin, IBM’s Former Chief AI Officer and newly appointed President of the Responsible AI Institute, and Jai Natarajan, VP of Strategic Business Development at iMerit share insights into the best practices for deploying artificial intelligence responsibly.

Seth’s career path has naturally led him to the field of responsible AI, as it is one of the critical pillars for getting AI adopted at a wide scale. A human geneticist by training, Seth started his AI journey during graduate school, having worked across a variety of startups and in academic environments ever since, eventually joining IBM in the early 2010s. His role with IBM involved corporate transformation projects leveraging data and AI.

He noticed first-hand that the biggest challenges for conducting digital transformation in enterprises is the trust and adoption of AI by the people that are going to be using them and the people who are gonna be impacted by them. When being faced with the deployment of a new tool, common questions he noticed being asked are: Is the tool giving the correct answers? Do I understand how the tool works? Is the tool biased? Is the tool transparent?

Responsible AI Is Expanding

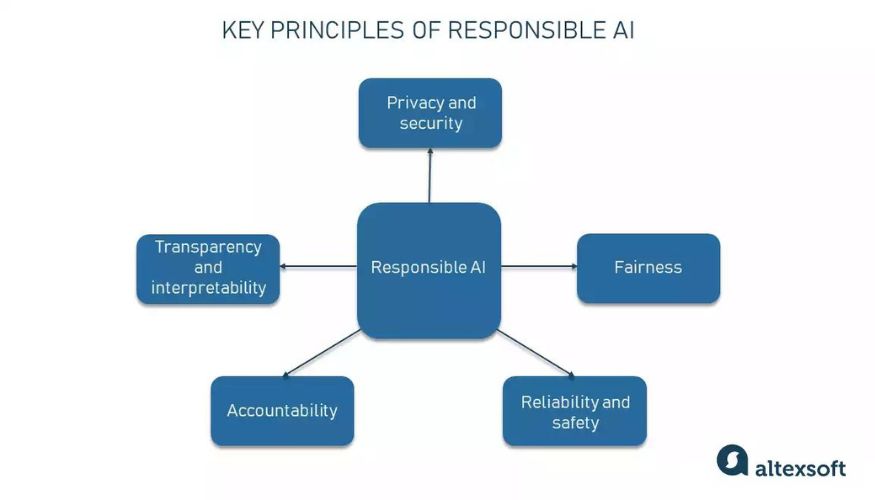

Over these past few years, Seth observed that the term ‘responsible AI’ expanded to encompass more than just bias. Today, it is also used to include the data, consumer protection, organizational preparedness and organizational structure.

Depending to our stance on responsible AI, model can either:

- Enable us to make better, more fair decisions

- Propagate poor decisions that humans have made in the past, being biased and having misogynistic, racist, and non-inclusive tendencies.

“AI is just math, and math is not biased.”

– Seth Dobrin

At its core, AI is just mathematics, and is not inherently biased. The math learns its bias by being trained with data based on poor decisions humans made in the past. This bias can be injected in the model the three ways:

- Through the underlying data, such as redlining, where specific populations were excluded from mortgages

- Through the interpretation of data. For example, through model selection

- Through data selection where the bias doesn’t live in the data, but in the labeling of the data and lack of certain populations being included.

The Path of AI Certifications

Validating responsible AI through industry-standard certifications, just as we do today with many other industries, can make deploying AI models much easier in terms of adoption and trust. Following in the footsteps of industry-standard certifications such as ISO, IEEE, and NIST, these independent assessments can be used to validate that an AI system is aligned with these standards.

These assessments can take three forms:

- Self-assessments – similar to how SOC2 is currently carried out, an internal team carries out an audit to validate the alignment with this standard

- 2nd party audits – a non-accredited external entity can validate any internal assessments against standard certifications

- 3rd party audits – an accredited external assessor can validate confirmation to assessment

To carry out these certification assessments, Seth identified six measures that can determine if the development of an AI system is responsible.

- Bias and fairness

- Transparency and explainability

- Robustness and safety

- Security

- Organizational preparedness

- Consumer protections

“Most problems worth solving with AI are not one model. They are multiple models designed to tackle different parts of the problem.”

– Seth Dobrin

Seth recommends that assessing for responsible AI should be carried out with every iteration. While this may result in extra effort during the development phase, leaving out these assessments until the end may only highlight issues when it’s too late. Doing the assessment at each step can help identify problems early on and only redo the past iteration if issues seep in. This is similar to security and privacy. These are not aspects that are left at the end. Software development today follows security and privacy by design.

Human-Centered AI

AI, at its core, should be developed and deployed to solve a human problem. Humans must be present throughout the whole lifecycle of AI. It’s important to determine who is going to use it, who is going to be impacted, and whether it is used to automate away humans or augment humans.

Whenever an AI system needs to make a decision that impacts humans’ health, wealth or wellbeing, we need humans in the loop. For example, a mortgage underwriter should take into consideration an AI system’s inputs, but be in charge of the final decision. In regulated industries, when a human agent makes a decision, they are often asked to explain why they made the decision. When an AI makes a decision, a human-in-the-loop would have to validate upfront why this AI made this decision, moving this last validation step earlier in the decision making process.

Biggest Case for Spending on Responsible AI

“Responsible AI isn’t real unless it’s a line item on the budget.”

Seth recommends that the success of an AI project should be measured on:

- Money generation

- Money saving

- Increasing satisfaction through interaction

The Boston Consulting Group published a study and asked whether companies deploying AI get better business outcomes with responsible AI. When looking at this metric across laggards and leaders, the leaders always said that responsible AI produces better business outcomes.

For engineers, responsible AI is a critical element to get their AI systems adopted in real-world scenarios. If the system is proven to be transparent, unbiased, and safe, it is much more likely to meet little resistance during adoption.

Similarly, responsible AI is much more likely to meet compliance regulations. Especially in industries such as finance, government, and healthcare, a non-compliant AI system will either be shut down or have to be redesigned.