Gaming Content Moderation

#1 reason automated moderation solutions fail to keep players safe in gaming is poor training datasets.

Data Annotation for Gaming

Community Managers rely on AI models for proactive content moderation, but most models are unreliable and inaccurate.

With our industry expertise, experience with sentiment analysis & NLP, powerful data pipelines, and best-in-class tooling, we help you curate high-quality datasets for enhanced model development with in-game nuances and sensitivity.

Improve ASR Models

We work with AI/ML teams across the gaming industry to customize automated speech recognition and player behavior classification models tuned to the standards of your player communities. We empower your community managers with easy decision-making to filter out inappropriate messages, so they can spend time where they are most needed.

Contact Us

Solution Highlights

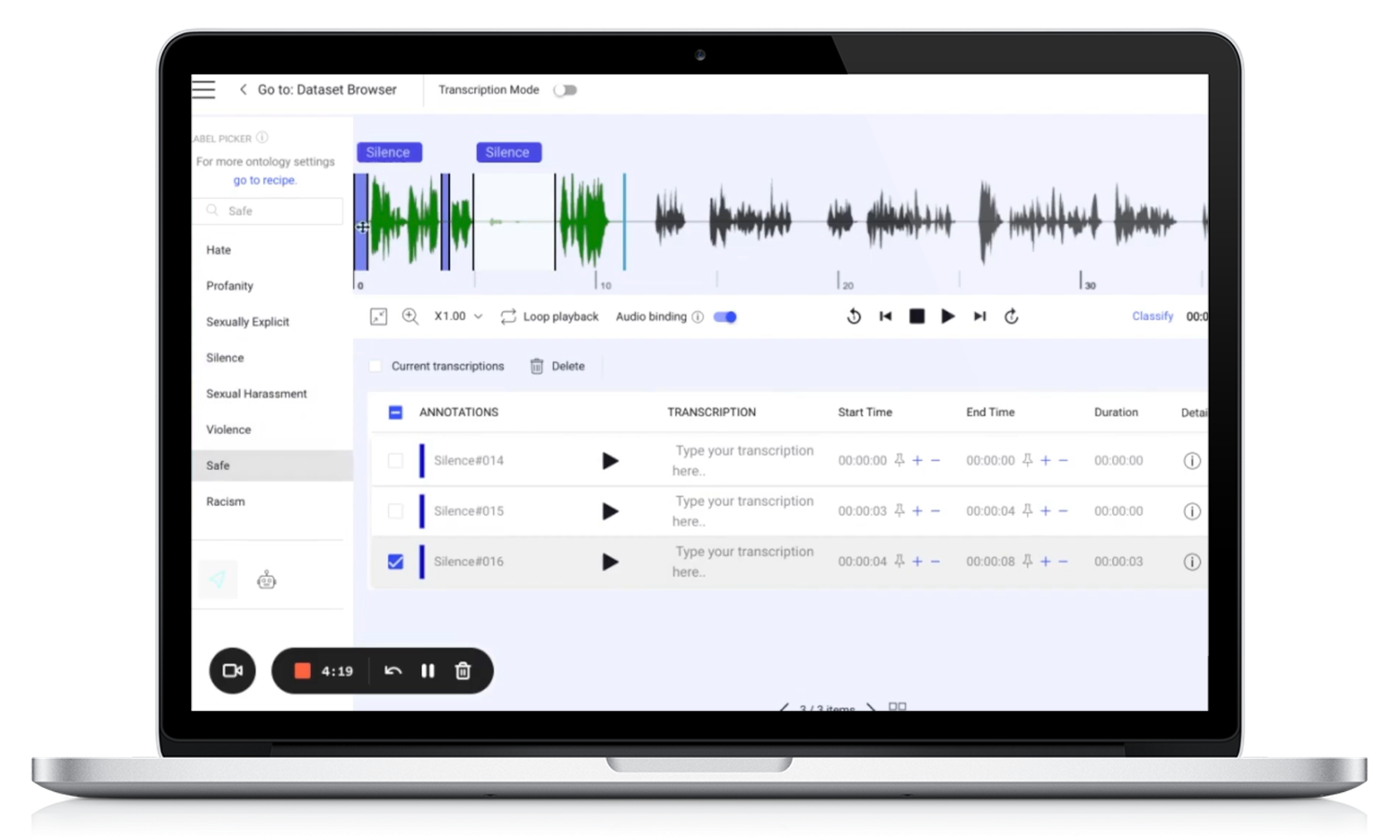

- Human-in-the-loop model validation workflows

- Fine-tuning content moderation models on contextual nuances

- Best-in-class audio studio with full transcription support

- Support all major languages and English varieties

- Custom lexicons and language models

- Transfer data securely using round-trip cloud storage systems

- Automate pre and post-data processing for scalable data

Case Study

Combating Toxic In-game Behavior with iMerit and Dataloop

iMerit worked with a leading game publisher in the US for content moderation annotation for one of their most popular games with 150+ million monthly players. With our domain expertise in gaming communities, language model development, and Dataloop, we curated high-quality datasets for the company with game sensitivities.

Read Case Study

Download Solution Brief