“Software-defined vehicles are reshaping the automotive landscape, with infotainment at the core of this transformation. AI, 5G, and cloud connectivity are driving next-gen in-car experiences, making vehicles more personalized, immersive, and responsive.” – Gartner

Imagine stepping into your car and having it greet you by name, adjust the climate to your liking, queue up your favorite playlist, and even suggest the best route based on real-time traffic. This isn’t some futuristic fantasy—it’s the reality of modern software-defined vehicles (SDVs), where infotainment is evolving from simple screens and speakers into immersive, AI-powered experiences.

Infotainment: The Smart System is Getting Smarter

The global automotive infotainment market is projected to reach $70.22 billion by 2027, but it’s not just about streaming music or maps anymore. Infotainment is not a smart, AI-powered environment, integrated with voice assistants, AR, and real-time connectivity.

From cloud-connected updates to gesture-controlled interfaces, infotainment is becoming an immersive, intelligent experience–a key part of software-defined vehicles (SDVs). While infotainment is not iMerit’s core focus, it plays a supporting role in the broader autonomous vehicle ecosystem we power through AI, annotation, and multimodal model development.

AI-Powered Personalization

Modern infotainment systems use AI to personalize media, navigation, and even interior settings. These models rely on diverse datasets to understand driver preferences and behaviors, unlocking context-aware interactions.

Voice and Gesture Controls

AI-enabled voice assistants and gesture controls reduce driver distraction and create smoother interactions. Training these systems requires high-quality annotated data, especially for speech, intent recognition, and motion tracking; in areas where iMerit supports scalable data labeling at expert precision.

Vision-Language-Action Models

Multimodal AI models are starting to interpret spoken commands alongside visual and contextual cues. Powering these systems demands well-aligned annotations across audio, video, and sensor data, critical for enabling real-world performance.

Learn how vision-language-action models are enhancing autonomous mobility through AI-powered multimodal systems.

Challenges in Building AI-Powered Infotainment Systems

While the promise of intelligent infotainment is clear, developing these systems comes with several technical challenges:

- High Data Volume and Multimodal Complexity : Infotainment systems depend on continuous inputs from multiple sources such as voice, video, sensor streams, and telemetry. Training AI models to handle this data fusion accurately requires not only vast volumes of data but also meticulous labeling across formats and modalities.

- Real-Time Responsiveness : Applications like driver monitoring, voice control, and AR navigation demand near-instant processing. Any delay caused by misaligned or noisy training data can degrade the system’s responsiveness and user trust.

- Low-Latency Model Inference on Edge Devices : Running advanced neural networks efficiently within vehicle hardware constraints (CPU/GPU limits) without compromising real-time user experience remains a key challenge.

- Managing OTA (Over-the-Air) Updates for AI Models : Ensuring safe, seamless deployment of AI model updates in infotainment systems without interrupting system stability or user interactions is essential as features evolve.

- Context-Aware Speech Recognition Under Noisy Conditions : Building models that can accurately interpret voice commands despite cabin noise, multiple speakers, or low microphone quality is a persistent challenge for infotainment AI teams.

- Fine-Tuning Multilingual NLP Models for Regional Variations : Infotainment systems deployed globally must adapt to a wide range of languages, dialects, and accents, requiring highly localized NLP models trained on diverse and nuanced datasets.

- Sensor Fusion Accuracy with Varied Cabin Configurations : Infotainment AI must perform consistently across different car models and sensor placements, making calibration and annotation alignment critical for model generalization.

- Annotating Complex User Intent from Ambiguous Commands : Commands like “play something relaxing” or “take me somewhere good” can be interpreted differently depending on user history, tone, or context, making precise labeling of training data more nuanced.

- Consistency in Annotation of Multimodal Interactions : Synchronizing annotations across voice, vision, and gesture inputs is essential for training models that respond accurately to blended commands.

- Annotation Accuracy in Speech Data with Overlapping Voices : Labeling voice data where multiple occupants are speaking, or where music or environmental noise overlaps, requires advanced QA processes to maintain training quality.

Why High-Quality Data Annotation Matters in Advancing Infotainment Systems

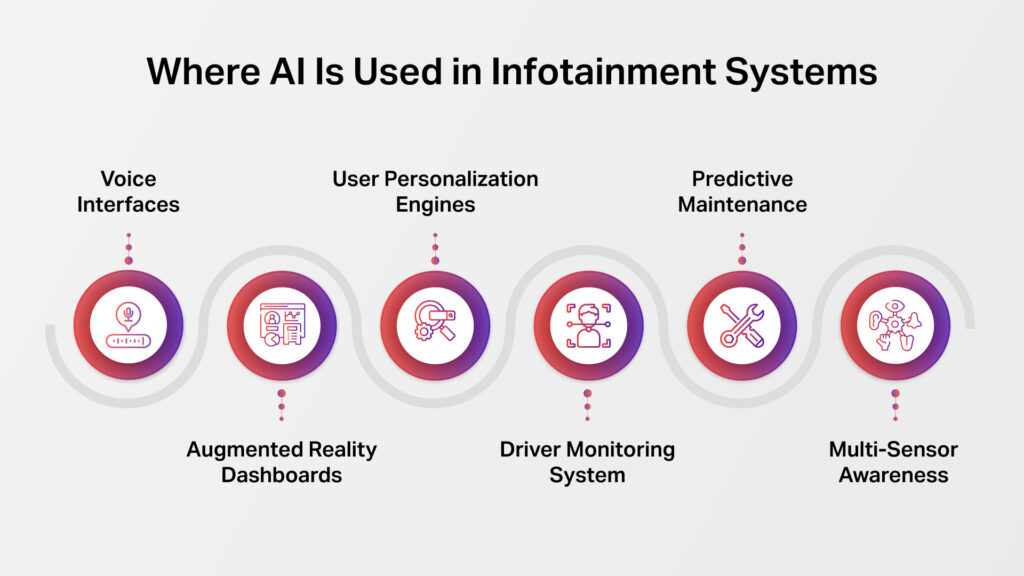

AI is increasingly embedded across infotainment systems—from voice commands and AR dashboards to personalized recommendations and real-time environmental awareness. These intelligent experiences are only possible with robust, accurately annotated data powering the machine-learning models behind them.

Where AI Is Used in Infotainment Systems

- Voice Interfaces: Natural language processing (NLP) models enable hands-free control through voice commands for navigation, media, and vehicle functions.

- Augmented Reality Dashboards: AR head-up displays (HUDs) provide real-time overlays of navigation routes, speed limits, and hazard warnings—keeping drivers informed without shifting their focus off the road.

- User Personalization Engines: AI analyzes driver behavior to adjust media, climate, seat settings, and navigation routes, creating a personalized in-cabin experience.

- Driver Monitoring System: Infotainment systems can use camera data and facial analysis to detect signs of fatigue, distraction, or drowsiness, triggering real-time alerts to keep drivers engaged and safe.

- Predictive Maintenance : AI analyzes real-time data from sensors and driving patterns to predict wear and tear of key vehicle components, like brake pads, tires, or battery health.

Infotainment systems then display timely maintenance alerts directly on the dashboard, helping drivers take proactive action and avoid breakdowns. - Multi-Sensor Awareness: Infotainment systems increasingly tap into the camera, LiDAR, and radar data to support context-aware recommendations, passenger detection, or real-time safety alerts.

These capabilities are foundational to in-cabin sensing applications such as driver monitoring, occupancy detection, and behavioral analysis. Explore iMerit’s in-cabin sensing solutions to see how high-quality data powers intelligent in-vehicle systems.

Why Data Annotation Matters

Training Voice Assistants

AI models for voice recognition must be trained on large, diverse datasets that capture a range of accents, languages, and commands. Inaccurate or biased annotations can lead to poor command recognition and user frustration. iMerit supports multilingual voice data labeling at scale to improve model accuracy and responsiveness.

Powering AR Dashboards

AR HUDs rely on precisely labeled visual data like lane markings, signage, and objects to project accurate, real-time overlays. Even minor annotation errors can lead to incorrect navigation cues. iMerit’s expertise in pixel-level image annotation ensures reliability for real-world use. These dashboards can also display AI-generated insights, like wear and tear of parts or upcoming maintenance needs, directly within the driver’s field of view, making system health more accessible and actionable.

Multi-Sensor Fusion in Infotainment

Modern infotainment systems use multi-sensor fusion to power in-cabin awareness and personalization.

For example, combining data from cameras, LiDAR, and radar enables the system to monitor driver attention, detect passenger presence, and adjust settings like climate or media based on seat occupancy.

It can also trigger alerts, such as warning the driver of an approaching vehicle or detecting if a child has been left in the back seat. These same sensor inputs support AR navigation overlays by aligning depth and visual data to render accurate visuals on head-up displays (HUDs). Accurate, synchronized annotation of these multimodal streams is essential for these features to function reliably. iMerit provides high-quality data labeling to support advanced sensor fusion in infotainment applications.

Improving Personalization Algorithms – AI-driven personalization in infotainment depends on well-structured data from user behavior analytics, driving patterns, and contextual inputs. High-quality labeled data allows models to predict and adapt to user preferences more effectively.

Without accurate data annotation, infotainment systems may struggle with voice recognition errors, unreliable AR projections, and inconsistent personalization, ultimately diminishing the user experience.

How iMerit Helps Build Smarter Infotainment Systems

At iMerit, automotive AI data annotation services are designed to support the development of intelligent, next-generation infotainment systems.

Core areas of expertise include:

- Speech and NLP Data Annotation : We annotate diverse voice datasets to train AI-powered assistants in NLP, enabling seamless voice interactions, intent recognition, and multilingual command understanding.

- Computer Vision Annotation : From object detection to spatial mapping, our high-quality annotations support AR dashboards and head-up displays, ensuring accurate overlays for navigation, alerts, and contextual content.

- Multi-Sensor Fusion Data Labeling : iMerit provides synchronized labeling across inputs from LiDAR, cameras, and radar to help infotainment systems interpret in-cabin activity and surrounding environments with greater intelligence.

As software-defined vehicles continue to reshape mobility, high-quality annotated data will be essential in delivering the personalized, connected, and immersive infotainment experiences that define the future of driving.

Connect with iMerit to explore how our data expertise can support your automotive AI innovation.