From autonomous tractors combing through farmlands to robotic arms performing millimeter-precise movements on factory floors, robots are getting smarter, faster, and more reliable. But what powers their intelligence isn’t just better hardware or software. It’s data. And not just any data; high-volume, multimodal, deeply contextual data that’s been meticulously annotated.

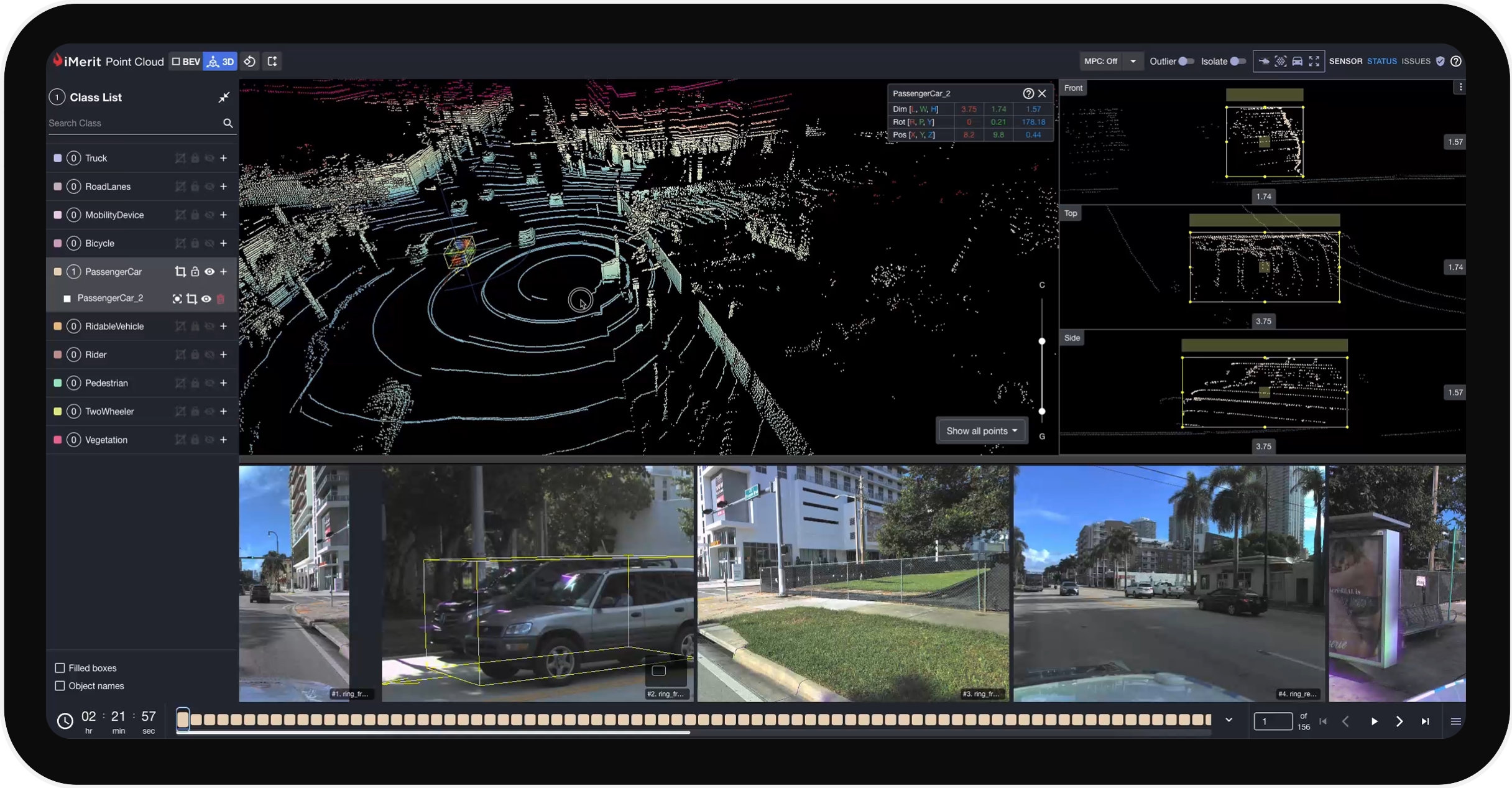

In robotics, annotation goes far beyond bounding boxes. It means synchronizing LiDAR scans with camera feeds, tracking object interactions across time, and adapting to every domain—whether it’s dusty orchards or high-glare industrial zones. Accuracy isn’t optional; it’s mission-critical.

Let’s break down the unique complexities of robotics data annotation, the specialized workflows and tools rising to meet them, and how iMerit powers next-gen robotics with scalable, domain-specific annotation workflows built for multimodal data.

Why Robotics Needs Specialized Data Annotation

Robots operate in dynamic, often unpredictable environments. Whether navigating a crowded warehouse or identifying crop maturity in a vineyard, they rely on multiple data inputs—RGB imagery, LiDAR, IMU, radar, and more. For machine learning models to interpret this data correctly, it must be accurately annotated.

Some key use cases include:

- Object Detection & Tracking: For obstacle avoidance, item handling, and navigation

- Semantic Segmentation: To understand environments (e.g., floors, shelves, humans), differentiating between zones like walkways, machinery, or vegetation.

- Pose Estimation: For accurate manipulation or navigation tasks, ensuring robotic arms or mobile units understand spatial orientation

- SLAM (Simultaneous Localization and Mapping): Mapping environments in real-time for autonomous navigation

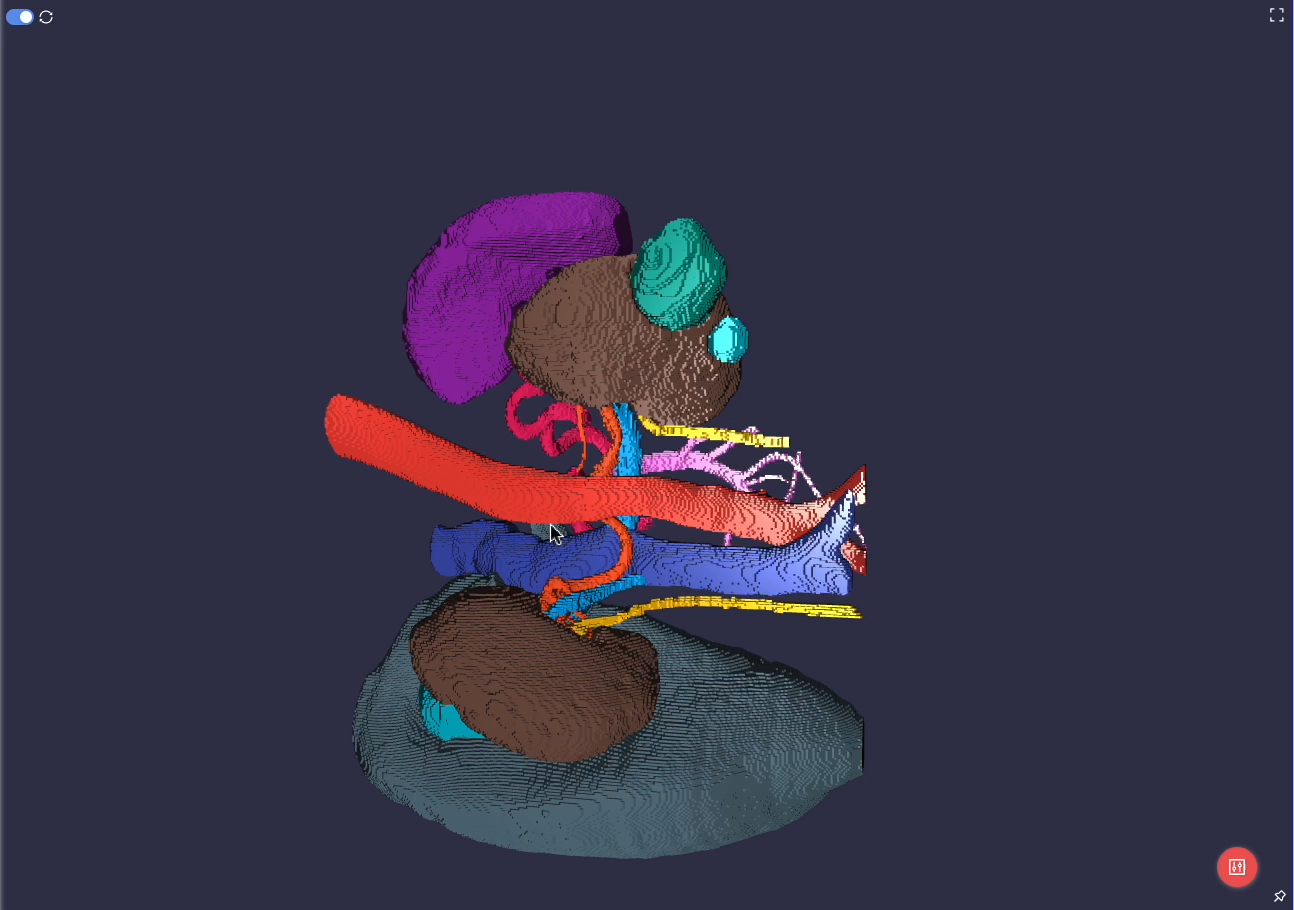

- Medical Robotics Annotation: Robots in surgical settings rely on annotated 3D point clouds, video, and medical images to track tools, recognize gestures, and navigate anatomical landmarks for precision and safety.

- Household Robotics Annotation: From LiDAR mapping to instance segmentation, annotated data helps home robots navigate cluttered spaces, recognize human activities, and interact safely with unpredictable environments.

Without accurately labeled training data, robots risk misjudging their surroundings, leading to performance issues, safety hazards, or operational inefficiencies.

The Challenge of Annotating Multimodal Robotics Data

1. Sensor Fusion Requires Precise Synchronization

Robots rely on multimodal inputs like LiDAR, cameras, and IMUs. Annotating these streams demands spatial and temporal alignment—every frame must match across modalities. Even slight mismatches between a LiDAR scan and a camera frame can result in inaccurate labels and model confusion.

2. Complexity of 3D Annotation

3D data, especially point clouds, adds depth, occlusion, and motion into the mix—factors absent in 2D annotation. Drawing accurate 3D bounding boxes or performing segmentation requires advanced tools and a spatial understanding of the environment.

3. Scaling Across Massive Volumes of Multimodal Data

From pilot runs to real-world deployments, robots generate enormous data volumes. Managing and annotating this data at scale, while maintaining quality and consistency across modalities, is a significant operational challenge.

4. Domain-Specific Labeling Standards

Annotation guidelines vary by environment. For example, a robot in an orchard will encounter organic, irregular shapes, while a factory robot navigates structured metal objects. Each domain brings distinct object classes, behaviors, and contextual cues.

5. Environmental Variability Demands Flexible Annotation

Robots must perceive accurately when lighting, weather, and object arrangements change. Annotation teams need to adapt to this variability, ensuring consistent labels across scenarios like low-light warehouses or reflective industrial zones.

6. Multimodal Perception Increases Annotation Complexity

Combining 2D and 3D data improves robotic perception but complicates annotation. Annotators must ensure labels are coherent across modalities, requiring not just technical expertise but also a unified view of how robots interpret their environment.

New Approaches and Tools Solving the Complexity

1. Human-in-the-Loop (HITL) Workflows

Rather than relying solely on automation, many robotics teams now adopt HITL workflows, a collaborative loop where AI does the initial labeling and human annotators validate and refine the outputs. This approach is especially useful for edge cases like partial occlusions or object overlaps.

Example: In warehouse automation, HITL workflows are used to fine-tune labels for forklifts navigating tight spaces, where overlapping sensors may cause ambiguity in raw outputs.

2. Generative AI and Foundation Models for Pre-Annotation

Foundation models like GPT-4, LLaVA, or SAM are now being integrated into annotation pipelines. These models help generate labels based on visual or language cues, accelerating annotation without compromising accuracy.

Use case: Labeling semantic actions in a robotic arm’s picking sequence using natural language inputs. GenAI models can interpret instruction logs and match them with visual cues, improving contextual labeling.

3. Advanced 3D Annotation Tools

3D Annotation platforms are becoming more interactive and intuitive, offering tools like:

- Smart brushes for segmentation

- Real-time 3D visualization and zoom

- Multi-sensor overlays

- Temporal annotation for video sequences

These tools reduce manual effort and improve annotation precision across time-series and 3D datasets.

4. Advanced 3D Annotation Tools

The latest platforms support multi-sensor annotations, letting annotators view and label data from multiple synchronized streams at once. This is particularly useful for mobile robots and AMRs (autonomous mobile robots) that rely on fused RGB + LiDAR input for object detection and path planning.

How iMerit Addresses These Challenges

At iMerit, we bring together domain-trained annotation specialists, advanced tools, and a human-in-the-loop model to ensure both scalability and quality.

Here’s how we approach robotics annotation:

Human-in-the-Loop + Automation

We combine machine learning-based pre-labeling with human validation and refinement to deliver accurate annotations, which are especially valuable for complex 3D, multimodal, or motion-based data.

Domain-Specific Annotation Teams

Our teams are trained in the language of robotics. Whether it’s identifying ripeness levels in fruit or segmenting a robotic gripper’s interaction with objects, annotators apply domain awareness to their tasks.

Specialized Tools for Robotics Annotation

iMerit supports annotation across image, video, 3D point cloud, and sensor fusion data using tools designed for robotics-specific workflows, enabling:

- 3D Bounding Boxes and semantic segmentation for spatial awareness

- Instance-level tracking across video frames for continuous monitoring of objects

- Interpolation and temporal smoothing to maintain annotation accuracy across high-frame-rate sensor data

- Brush tools and polygon masks for intricate object-level segmentation

3D point cloud annotation is vital for spatial perception in robotics. By labeling LiDAR or depth sensor outputs, robots gain a richer understanding of their surroundings, detecting obstacles, estimating distances, and identifying surfaces or objects in real-time.

Use cases include:

- Navigation: Labeling floor planes, walls, and dynamic obstacles for indoor SLAM

- Manipulation: Mapping item dimensions and positions to guide robotic arms

- Field Robotics: Terrain classification and crop detection in agriculture

- Sensor Fusion: Combining video and LiDAR for more accurate perception pipelines

iMerit supports dense and sparse point cloud annotations, including multi-sensor fusion formats, helping robotics teams build robust, spatially aware AI systems.

Real-World Examples: Robotics in Action

Robotics plays a pivotal role across industries—from manufacturing lines and warehouses to agricultural fields. However, some of the most dynamic and impactful use cases are emerging in household and medical environments, where robots interact closely with humans and operate in highly variable, sensitive settings. These domains demand an extra level of precision, safety, and contextual understanding, powered by high-quality annotation.

| Sector | Annotation Applications |

|---|---|

| Manufacturing | Defect Detection, Weld Point Labeling, Motion Profiling |

| Warehouse Automation | Tracking objects, labeling pallets in LiDAR, and no-go zones |

| Agriculture | Segmenting crops/weeds, LiDAR-based terrain mapping, and Precision Agriculture |

| Medical Robotics | Labeling surgical tools, patient movement, and anatomy in scans |

| Household Robotics | Object identification, gesture tracking, and room segmentation |

Did you know: iMerit supports dynamic annotation pipelines for real-time systems, enabling live video and sensor data annotation with inertial feedback.

What’s Next for Robotics Data Annotation?

As robotics systems grow more advanced, the data annotation infrastructure will need to keep pace. Some emerging trends include:

- Simulation-to-Real Transfer: Generating synthetic data in simulated environments to pre-train models before real-world deployment

- Self-supervised Learning: Reducing reliance on fully labeled datasets using auto-labeling and contrastive learning

- Domain-Specific Foundation Models: Pre-trained vision and language models tailored for robotics, offering more accurate annotations out of the box

Conclusion

Every smart robot begins with smart data. And behind that data lies an annotation that brings structure, context, and actionability. Whether your robotics system navigates shelves, homes, operating rooms, or agricultural fields, multimodal annotation ensures it understands the world it operates in.

A critical enabler of this intelligence is 3D point cloud annotation, transforming raw spatial data into actionable insight. Whether it’s identifying obstacles in a factory, mapping terrain in a field, or tracking human presence in a home, point cloud annotation allows robotic systems to “see” and understand depth, shape, and movement in real-time. Combined with other data types like RGB, thermal, and sensor logs, it becomes the foundation for SLAM, object tracking, and environment segmentation.

With over a decade of expertise and a robust human-in-the-loop pipeline, iMerit helps robotics teams annotate faster, smarter, and more accurately, turning raw 3D data into multimodal data into real-world intelligence.

Looking to power your robotics AI with multimodal annotation expertise?

iMerit provides high-quality data annotation services for 3D perception, sensor fusion, and robotics workflows—backed by human-in-the-loop precision and scalable infrastructure.