In robotics, learning is an ongoing process. Unlike traditional machine learning models that train once and deploy, robots operate in dynamic, ever-changing environments. Continual learning enables robots to adapt by incrementally learning from new data without forgetting prior knowledge. This adaptability relies on feedback data loops, 3D point cloud perception, and human-in-the-loop (HITL) systems to ensure safe and effective learning.

In this blog, we explore how continual learning works, the critical role of feedback loops, and how iMerit’s data teams and Ango Hub platform enable scalable, high-quality robotic learning.

What is Continual Learning in Robotics?

Continual learning allows robots to improve performance over time by learning from new experiences without requiring complete retraining. This is essential in robotics, where dynamic environments and unexpected scenarios are common. Unlike static models, continual learners adapt to new tasks, handle edge cases, and correct errors based on real-world interactions.

Examples of Continual Learning

Robots equipped with continual learning can evolve by adapting to environmental changes and acquiring new skills over time, often referred to as robotic upskilling.

- Warehouses: Mobile robots adjust to updated shelf layouts and navigate around new obstacles using 3D point cloud mapping for spatial awareness.

- Manufacturing: Quality control robots refine object defect detection models as new materials or geometries appear in 3D scans.

- Agriculture: Field robots interpret 3D terrain and vegetation structure changes, adapting navigation and crop assessment on the fly.

- Healthcare: Surgical robots enhance tool tracking and tissue differentiation with each new annotated 3D volumetric scan.

- Autonomous Vehicles: LiDAR-based 3D point cloud data helps AVs interpret traffic anomalies or construction zones, learning from rare edge cases in real-time.

- Retail: Inventory robots map store layouts in 3D, adjusting to newly stocked shelves or rearranged aisles.

- Construction: Site robots refine handling of new tools, materials, or site layouts, improving precision in dynamic work zones.

Each scenario underscores the importance of accurate and timely 3D data labeling, a challenge compounded by volume, variety, and velocity.

From One-Time Training to Continuous Evolution

Traditional machine learning involves training on a dataset, evaluating, and deploying. However, real-world environments—roads, warehouses, hospitals—are not static. Continual learning transforms deployment into an opportunity for improvement, enabling robots to learn from each interaction, especially through high-dimensional data like 3D point clouds, and become more reliable and efficient over time.

Feedback Loops: Powering Continual Learning in Robotics

Feedback loops are the backbone of continual learning, enabling robots to refine models based on real-world performance and turning operational data into training opportunities. In high-stakes environments like autonomous vehicles or medical robotics, multimodal feedback, including LiDAR and point cloud data, is critical. Human-in-the-loop (HITL) systems are playing a pivotal role.

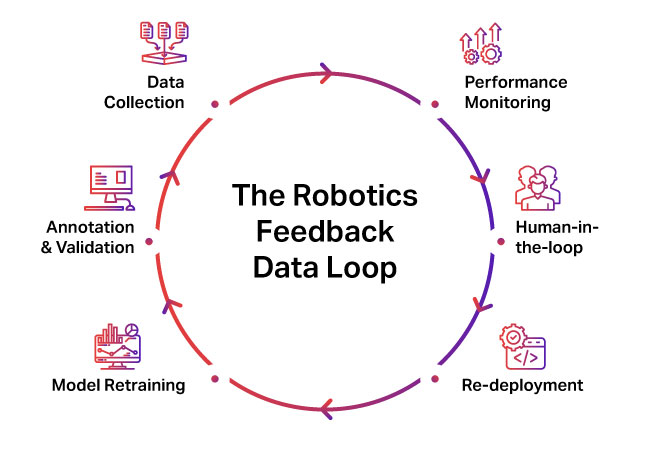

The Feedback Cycle

At its core, a robotics feedback loop includes the following steps:

- Deployment: Robots operate in real-world environments, collecting spatial and semantic data via LiDAT, RGB cameras, radar, etc.

- Data Collection: Massive volumes of 3D point clouds and time-synced sensor data are generated. Multimodal data (LiDAR, video, IMU, GPS, audio) is captured during operation.

- Performance Monitoring: Edge cases or anomalies are flagged, such as misclassifying objects in sparse or occluded 3D scenes.

- Annotation & Validation: 3D annotations, like object bounding boxes or semantic segmentation in point clouds, are added, often with HITL oversight.

- Model Retraining: Cleaned and labeled data improves perception modules, especially those relying on spatial inference from point cloud data.

- Re-deployment: Updated models perform better in complex, real-world conditions.

This loop ensures that the robot doesn’t just repeat behavior; it evolves intelligently over time.

What Counts as Feedback?

Feedback isn’t limited to errors; it includes any signal that can help improve performance.

- A misaligned LiDAR-camera fusion frame may signal calibration drift.

- A 3D detection failure at night could reveal a domain shift in point cloud reflectance patterns.

- A missed pedestrian in a dense point cloud may uncover labeling or model bias.

- Human overrides often expose model blind spots.

- Low-confidence predictions are crucial for retraining.

- Skipped obstacles may indicate segmentation errors.

These aren’t just anomalies, they’re critical learning opportunities.

Challenges That Can Drown Your Feedback Loop

Feedback loops are critical for continual learning, but their execution is fraught with complexities due to the nature of robotic data and operational pitfalls. These challenges can undermine the effectiveness of the feedback process if not addressed with robust systems and human oversight.

Data Complexity and Criticality

Robotic feedback data is inherently complex, characterized by its volume, variety, and velocity. For example, an autonomous vehicle can generate 1–2 terabytes of sensor data per hour, including camera footage, radar returns, and LiDAR scans. Across a fleet, this scales to petabytes daily. Within this vast dataset, only a small fraction, such as a few seconds of a cyclist swerving unexpectedly, contains critical edge cases that drive meaningful learning. Identifying and isolating these anomalies requires meticulous review and contextual understanding.

Key complexities include:

- Volume: Autonomous vehicles generate terabytes of 3D sensor data daily, making it challenging to identify valuable feedback points from vast datasets.

- Variety: Multimodal sensor (LiDAR, radar, RGB, depth) requires accurate fusion and alignment to avoid misinterpretations, such as a robot misjudging an obstacle’s distance.

- Velocity: Rapid processing of feedback is crucial in high-speed environments to avoid outdated models. Delays can result in recurring errors.

- Label Noise: Mislabeling edge cases during retraining can degrade model performance, making the system worse rather than better. For instance, labeling a pedestrian’s unusual movement as an error when it was contextually correct can confuse the model.

- Ambiguity: A robot’s “mistake” in one scenario might be correct in another. For example, a visual anomaly in agricultural robotics might stem from lighting, crop disease, or sensor miscalibration, requiring human interpretation to avoid incorrect generalizations.

- Latency: Slow 3D annotation workflows mean robots continue learning from outdated or inaccurate interpretations.

- Consistency: When multiple annotators are involved, inconsistent labeling practices can introduce noise, leading to model confusion.

- Edge Case Rarity: Unique 3D interactions, like a child emerging from behind a parked truck, are sparse and hard to mine but invaluable.

- Sensor Fusion: Properly merging 3D point clouds with other sensor streams (e.g., IMU or GPS) is crucial to maintain spatial accuracy.

- Temporal Context: Errors may stem from events several seconds earlier, necessitating time-series analysis to provide accurate context.

These complexities highlight that effective continual learning requires not just more data, but clean, contextual, and structured data that accurately reflects real-world interactions. Without this, robots risk stagnating or learning incorrect behaviors.

Operational Pitfalls

Beyond data complexity, feedback loops face practical challenges that can derail their effectiveness. Poorly designed or managed loops can introduce more noise than signal, undermining continual learning. Common pitfalls include:

- Label Drift: Over time, inconsistent labeling practices, such as varying interpretations of edge cases, can lead to model confusion and performance degradation. For example, if one annotator labels a low-confidence prediction differently from another, the model may learn conflicting patterns.

- Annotation Bottlenecks: Manual annotation, especially for complex multimodal data, can be slow and resource-intensive. Delays in processing feedback data hinder the responsiveness of the learning cycle, causing robots to repeat errors.

- Poor Data Prioritization: Treating all data equally wastes time and resources on irrelevant or redundant information. For instance, an hour of autonomous driving data may contain only a few critical moments, but without smart filtering, teams may annotate unnecessary segments.

- Quality Assurance: Noisy or inconsistent 3D labeling, such as misclassified edge cases or inaccurate point-level segmentation in point clouds, can degrade model performance by distorting its understanding of depth, distance, and object boundaries, leading to incorrect behaviors during retraining.

These operational challenges underscore the need for efficient, scalable data pipelines supported by automation and human-in-the-loop processes. Platforms like Ango Hub, which streamline annotation and collaboration, are essential to overcoming these hurdles.

Did You Know? An hour of autonomous driving can generate terabytes of sensor data, but only a fraction is useful for retraining. Smart filtering and annotation are critical to making feedback loops effective.

Human-in-the-Loop and RLHF: Closing the Gaps in Robotic Learning

Solving 3D perception challenges requires human insight. HITL systems and Reinforcement Learning from Human Feedback (RLHF) ensure robotic learning is contextual, consistent, and correct, especially in environments rich with spatial complexity and edge cases.

Human Oversight for Data Complexity

Autonomous systems generate massive volumes of multimodal data, including terabytes of LiDAR, radar, RGB, and depth streams. HITL teams help filter out irrelevant sequences and surface the most valuable edge cases, like unexpected object occlusions or rare 3D interactions for retraining. This ensures feedback loops focus on what truly matters.

Clarifying Ambiguity and Edge Cases

Automation often struggles with ambiguous 3D data, such as distinguishing between a fallen branch and a pedestrian’s shadow, or identifying whether a point cloud blob is a pole, person, or tree. Human reviewers apply real-world reasoning to make these judgment calls accurately, preventing misclassifications that could degrade model performance.

Enforcing Consistency and Preventing Label Drift

One of the biggest threats to continual learning is label drift, where annotations become inconsistent over time or across teams. HITL enforces strict annotation protocols, peer reviews, and training standards to ensure that 3D labeling remains consistent, coherent, and reliable.

Accelerating Feedback Loops with Accuracy

Slow annotation cycles can stall model updates and allow errors to persist. HITL workflows combine speed and precision, delivering rapid validation and high-quality annotations, especially in spatially dense or noisy 3D datasets. This enables robots to learn from the most recent, relevant scenarios without inheriting flawed data.

RLHF for Robotic Decisions

Reinforcement Learning from Human Feedback (RLHF) teaches robots to make decisions that align with human preferences, especially in ambiguous 3D scenarios. Instead of simply choosing the most likely action, RLHF helps models select the most contextually appropriate or human-preferred response, improving safety, efficiency, and ethical alignment. It’s not just about recognizing an object; it’s about knowing how to respond.

HITL and RLHF don’t just patch gaps, they actively prevent feedback loop failures. By embedding human reasoning into the learning cycle, teams can overcome data overload, ambiguity, and operational bottlenecks, enabling robotic systems to learn continuously, safely, and accurately.

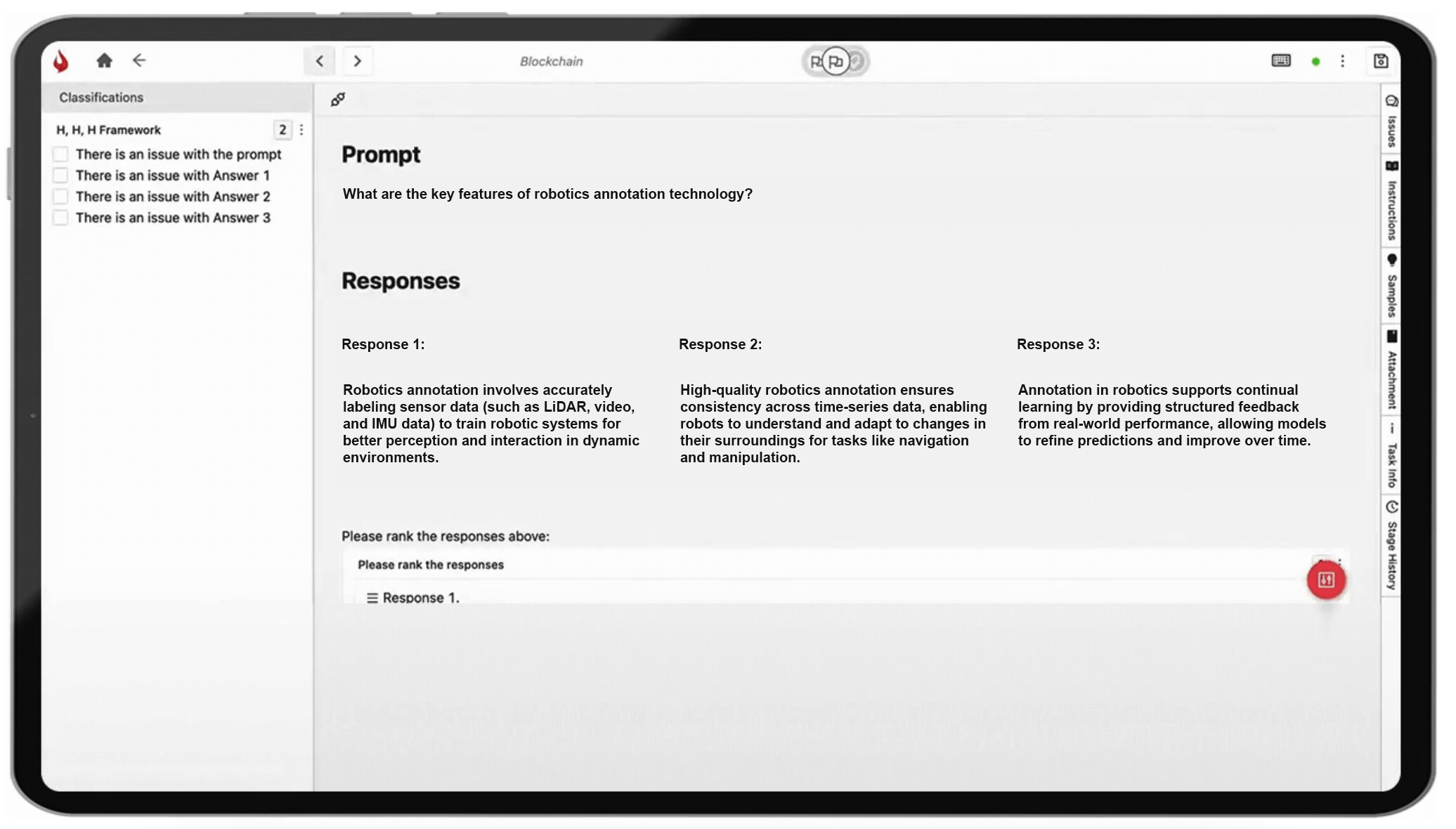

How iMerit Ango Hub Power 3D Feedback Data Loops

iMerit bridges the gap between robotics operations and continual learning by managing complex 3D feedback loops through human-in-the-loop workflows and the Ango Hub platform. From autonomous mobility to medical robotics and agriculture, iMerit ensures scalable, high-quality annotation of spatial feedback data across industries

Building effective feedback loops in robotics requires handling the scale and complexity of multimodal sensor data such as LiDAR, RGB, thermal, radar, 3D point clouds, video, and image inputs. iMerit and its Ango Hub platform address this challenge with a blend of automation, APIs, and expert oversight, enabling scalable data annotation, validation, and integration to drive continual learning.

How iMerit Helps

Ango Hub Platform

- Supports advanced annotation workflows for multimodal data, including 3D point cloud labeling and sensor fusion, ensuring accurate processing of complex robotic inputs.

- Automates key processes like pre-labeling, smart sampling, and workflow routing, reducing turnaround times and annotator fatigue.

- Integrates via APIs with MLOps pipelines, keeping model training cycles short and reactive.

- Provides collaborative tools and version-controlled feedback tracking to streamline teamwork, enable rapid iteration, and accelerate model updates.

- Enables time-series visualization to trace model behavior and errors, enhancing error correction.

iMerit’s HITL Workforce

- Specializes in multimodal perception annotation—LiDAR, radar, 3D, thermal, and beyond.

- Filters relevant 3D edge cases from vast robotic datasets.

- Delivers project-specific workflows that accelerate 3D model retraining.

From annotating rare medical anomalies and agricultural terrain changes to reviewing autonomous vehicle edge cases, iMerit bridges robotic operations and continuous model improvement, turning every interaction into a learning opportunity.

Real Robots, Real Impact

iMerit partnered with a medical device company to annotate surgical videos, achieving a 99% improvement in recognition accuracy for surgical AI models. These enhanced robotic surgery systems enable more precise procedures and better patient outcomes. Computer Vision for Robotic Surgery

Driving Robotic Intelligence with Feedback

In robotics, the difference between static models and adaptive intelligence lies in feedback, and 3D point cloud data is the foundation of that feedback. Continual learning, fueled by HITL systems, RLHF, and scalable 3D annotation, turns raw perception into meaningful progress.

iMerit bridges this critical gap between data and deployment. Whether it’s navigating chaotic urban streets, monitoring crop health, or assisting in surgical procedures, our human-in-the-loop teams and Ango Hub platform enable robotic systems to evolve safely, intelligently, and at scale.

Partner with iMerit to unlock the power of 3D continual learning.