Across autonomous vehicles (AVs), ADAS, and industrial and logistics robots, perception systems must operate with near‑perfect reliability (often 99.9%). Even a 95% success rate can still result in many daily failures. Many of these failures cluster in long‑tail scenarios, where unexpected or unusual situations occur with low individual probability but high impact. For example, an industrial robot safety audit reports 27 to 49 robot accidents every year due to gaps in risk assessment and unforeseen interaction scenarios.

But long tail is particularly acute in autonomous vehicles, which currently experience crashes at rates far higher than human-driven cars in complex and safety-critical situations. In fact, edge cases alone make up about 8% of AV crashes but cause 27% of injuries, nearly three times the injury rate of common incidents.

Yet, traditional field testing and real-world monitoring would take hundreds of millions of operational hours to naturally come across all rare scenarios.

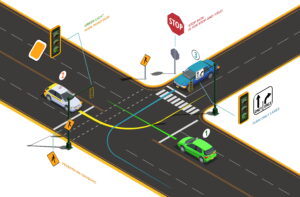

Scenario mining on high-definition (HD) maps addresses this challenge by analyzing recorded data to find safety-critical scenarios before they cause real-world failures. HD maps for autonomous driving include lanes, traffic lights, crosswalks, and more, along with sensor data, and help extract, classify, and recreate safety-critical scenarios for perception testing purposes.

In this article, we will explain how HD-map–based scenario mining addresses the long-tail testing problem and provides a step-by-step workflow for extracting these scenarios.

What Is Scenario Mining?

Scenario mining is the process of scanning raw sensor data and driving logs to identify, extract, and catalog specific traffic interactions relevant to safety validation.

Once scenarios are extracted and validated, they become repeatable test cases that can be used for perception testing, training models, and stress-test decision-making algorithms.

Since these scenarios are sourced from real-world data, they carry authentic behavioral patterns that simulation-only approaches might miss.

The Long-Tail Problem in AV Perception

Road driving environments follow a long-tail distribution where common events are familiar (light urban traffic, highway driving), but many rare and dangerous interactions occur so infrequently.

Long-tail scenarios can involve an emergency vehicle suddenly approaching from behind, roadwork with detours, or temporary lane markings. Other examples are pedestrians darting out from behind parked vehicles, sensor malfunctions in bad weather, and complex interactions at multilane intersections.

Although each of these safety-critical events might happen only once in millions of miles, they can cause severe failures when unhandled.

Relying solely on standard fleet testing to cover the long tail is impractical because real-world driving alone cannot capture all of them. So, teams turn to scenario mining, using logged sensor data and HD maps to query and extract short segments of driving data that match pre-defined safety-critical conditions.

And this targeted mining of edge cases (and broadly, any safety-critical scenario) speeds up perception testing and training beyond what raw mileage could yield.

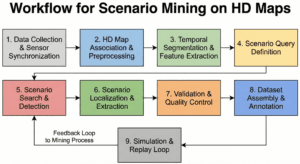

Workflow for Scenario Mining on HD Maps

The workflow below outlines how raw autonomous vehicle fleet data and high definition maps are processed to mine safety-critical scenarios.

Data Collection and Sensor Synchronization

The first step is to collect multi-modal driving logs from the AV fleet sensor suite. It includes LiDAR point clouds, radar returns, high-resolution camera frames, inertial measurement units (IMUs), GNSS (GPS) readings, and any existing perception outputs.

Since sensors operate at different frequencies, unsynchronized clocks can result in errors in data fusion. So, for accurate scenario mining, these logs must be time-aligned and calibrated so that every sensor measurement shares a common timestamp and coordinate frame.

- Clock Synchronization: Systems use the Precision Time Protocol (PTP) or Network Time Protocol (NTP) to establish a common network clock among all sensing devices. GPS Pulse Per Second (PPS) signals provide a universal time reference and ensure that timestamps are globally consistent.

- Hardware Triggering: Many architectures use an external synchronization control unit to send triggering signals to multiple devices to initiate data acquisition in parallel across cameras and LiDAR sensors.

- Dynamic Time Warping (DTW): In environments where GNSS signals are degraded, such as urban canyons, DTW-based velocity matching is used to perform temporal alignment between LiDAR, GNSS, and IMU data. It compares velocity profiles from different sensors to find the optimal time offset and reduces alignment errors.

Once all sensor streams are time-aligned and localized, each data point is registered to a global coordinate system that matches the high definition maps.

HD Map Association and Preprocessing

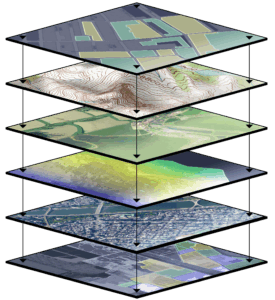

High-definition maps provide semantic and geometric layers because raw sensor data has limited context. A point cloud shows that something is ahead, but what is it? Is it a lane boundary or road debris? HD maps for autonomous driving show accurate locations down to a few centimeters, detailed lane shapes, and fixed objects like sidewalks and crosswalks. This prior information helps precisely identify the vehicle, even in tough spots like busy city streets or tunnels where GPS might struggle.

Map matching aligns the vehicle trajectory against HD map features to correct GPS drift and provide accurate situational awareness. The primary layers include:

- Geometric Layer: It describes the position of lanes, road edges, and medians. LiDAR scans are matched against these features to maintain a stable estimate of location.

- Semantic Layer: This layer includes details such as traffic signs, lane markings, and signals. It provides the rules of the road that influence the vehicle’s behavior.

- 3D Landmarks: Elevation models and 3D landmarks, such as poles or building facades, let the vehicle orient itself even when ground-level markings are obscured.

During preprocessing, tag each log segment with context metadata from the map. For instance, label the clip as an urban intersection, a highway merge, or a residential street. These context tags help prune searches later (skip city logs if a scenario only makes sense on a highway).

Temporal Segmentation and Feature Extraction

After log enriching with map context, the next step is feature extraction. But before extraction, break the logs into short temporal clips (typically 20–30 seconds each) to process interesting windows (to capture the causality of an event). While discarding miles of uneventful driving.

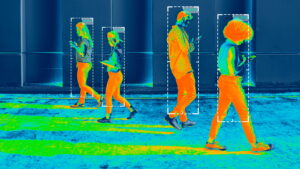

Feature extraction extracts the 3D trajectories of all actors in the scene (vehicles, pedestrians, and cyclists) using automated tracking algorithms. For every tracked object, the system calculates a set of safety-relevant attributes over time. These include relative velocity, acceleration, distance from the ego vehicle, lane occupancy, and distance to the nearest map feature.

Scenario Query Definition

Scenario mining requires a structured hierarchy to define what situation a relevant scenario. Teams use scenario templates that range from abstract logical definitions to concrete tests. Formal languages like ASAM OpenScenario, M-SDL, and Scenic encode these definitions. These languages capture both static elements (road geometry, signals) and dynamic maneuvers.

Here is a three-level hierarchy to manage the complexity of scenario definitions for perception testing:

- Abstract Scenarios: High-level descriptions using qualitative terms (ego vehicle approaching an intersection with crossing traffic).

- Logical Scenarios: Descriptions using parameter ranges (ego speed between 30–50 km/h, crossing vehicle speed between 20–40 km/h).

- Concrete Scenarios: Precise, executable tests with fixed values (ego speed 40 km/h, crossing speed 30 km/h, gap 15 meters).

Scenario Search and Detection

Once queries are defined, the search engine scans the enriched logs to detect instances that satisfy the scenario criteria using a combination of filtering techniques:

- Logical Filtering: First, use the attributes calculated during feature extraction (like velocity and distance thresholds) to filter out irrelevant clips.

- Spatial Filtering using HD Maps: Then use the semantic map layers to segment data by region type, such as intersections vs. highways. For example, if a query is intersection-centric, the system only scans segments tagged with “intersection”.

- Automated Detectors: Apply scenario-specific detectors to the remaining candidate clips. Scenario mining algorithms could be simple rule checks or modern methods like vision-language models (VLMs) that read a scenario description and find matches. Any clip that satisfies a scenario’s conditions is marked as a candidate instance.

For example, consider a query for “Unprotected Left Turns in Occluded Intersections.” The HD map lets the system instantly ignore all highway data and focus only on GPS segments tagged with “Left-Turn Lane” and “Unprotected Signal.” Further using the map’s geometric layer (which identifies permanent blind spots like bridge pillars or steep hills), the system finds high-risk scenarios where the vehicle must make a turn without a clear line of sight, scenarios that are otherwise invisible in unstructured logs.

Scenario Localization and Extraction

Each detected scenario occurrence must be precisely localized both temporally and spatially. It involves the exact start and end times of the interaction, often including buffer periods before and after the event to provide context for perception training. Similarly, define the spatial boundaries in map coordinates. Extract the map region involved and record the positions of all actors in the HD map frame.

Then, match each tracked object in the extracted clip to its corresponding role as defined in the scenario description, such as whether it is the pedestrian or the lead car. The final step is to extract these specific tracks and their associated attributes for use in the scenario.

Validation and Quality Control

Use a human-in-the-loop review to verify extracted scenarios. Automated mining can misfire, so domain experts review each scenario clip to confirm it matches the definition. Also, conduct consistency checks against map semantics and temporal continuity. Ensure that the scenario obeys the map, like the “crosswalk” was an actual zebra crossing in the map, and that the sequence of events is logically coherent.

Incorporate feedback to refine both the data and the process. Update scenario labels if the human review finds misclassifications, and refine the automated query rules to reduce future errors.

Dataset Assembly and Annotation

The final stage is to compile the verified scenarios into a structured, high-quality, labeled dataset. For each scenario clip, the dataset must include synchronized sensor data, the relevant HD map segments, and metadata headers (such as scenario ID, type, location, and weather).

Provide detailed visual perception annotations to support training and testing. Label every object in the scenario clip with precise bounding boxes or point clouds, classes, and trajectories relative to the map. Reference the map layers for things like lane boundaries and traffic lights to give complete contextual labels.

Furthermore, tag each scenario with additional attributes, such as the Operational Design Domain (ODD), road type, and environmental conditions. These tags allow stratifying the dataset and let separate analysis of perception performance in different contexts (highways vs intersections).

Simulation and Replay Loop

Import the extracted scenario into a simulator or digital twin of the HD map that provides controlled, repeatable testing conditions. Use the scenario as a seed to create synthetic variations. For example, change weather (sunny vs. rainy), adjust initial speeds, or spawn additional actors to see how robust the perception is.

Feed simulation results back into the mining process. If a perception failure is observed in the simulator, define new scenario queries to capture that pattern more broadly in the logs. Also, refine the map annotation if the simulation reveals missing map semantics.

How iMerit Supports HD Mapping and Scenario Mining

Implementing scenario mining at scale requires expertise in HD mapping, sensor data processing, and quality validation. We at iMerit provide human-assisted data services that improve the scenario mining workflow. Our teams of 1,100 data annotators bring domain expertise in mapping and perception to ensure high-precision data and robust coverage of edge cases.

Key iMerit capabilities include:

High-Precision HD Mapping and Semantic Curation

iMerit helps process static environment and real-world information into highly accurate map layers. This includes:

- 2D Lane Level Annotations: Mapping lane geometry, markings, and attributes with centimeter-level precision.

- 3D Localization: Labeling semantic relationships and object segmentations in 3D space.

- Multi-Sensor Fusion: Synchronizing 2D imagery and 3D LiDAR point clouds within a common coordinate system.

Scenario Hunting and Edge Case Management

iMerit experts help curate the long tail of driving data through scenario hunting. They find and document every unusual incident they come across so they can prepare for similar situations in the future. This human-in-the-loop (HITL) approach ensures that cases such as unexpected construction obstacles or pedestrians appearing out of nowhere are accurately labeled and integrated into the training pipeline.

A notable example is a global Robotaxi company’s collaboration with iMerit to improve the quality of its ground truth data. Previously, they used large-scale auto-labeling, but found the quality insufficient for safe deployment.

iMerit implemented a collaborative, human-in-the-loop workflow. The iMerit team worked on full-scene semantic segmentation and mapping scenarios to find any anomalies in the data. This process included two rounds of quality checks and weekly calibrations with the Robotaxi’s engineering team to align on taxonomy and attribute definitions. As a result robotaxi company achieved 95% annotation accuracy on the mined scenarios and a 250% efficiency improvement over their previous labeling process.

Conclusion

Safety-critical perception testing for AVs requires smart data mining. HD maps enable precise, semantic filters that let developers pinpoint rare events in vast logs. While autonomous vehicles are at the forefront, HD–map–based scenario mining is equally crucial for stacks that operate in mapped environments, such as ADAS, warehouse robotics, and autonomous logistics.

With the right process and tools, every emergency situation can be systematically captured and tested, without waiting for them to recur in live operations.

Building such a pipeline involves both advanced software (for map matching and scenario detection) and meticulous data annotation. But experts like iMerit can speed up the progress and deliver high-precision HD map layers, maintain up-to-date road rules, and apply human expertise to cover all of the safety-critical scenarios.

Are you looking for data experts to advance your HD mapping project? Here is how iMerit can help.