LiDAR & 3D Point Cloud Annotation for a Leading Autonomous Vehicle Company

Leading Autonomous Vehicle company was looking to build a highly precise, secure, and reliable system for the autonomous movement of vehicles while accurately interpreting information from multiple sensors, like navigation systems, vision modules, LiDAR, and Radar. Successful development and maintenance of a robust 3D perception stack would offer accurate depth estimation, object detection, and scene representation for the company.

Challenge

However, the performance of a 3D perception system depends on the quality of the available data and the associated labels and annotations. This company partnered with iMerit for LiDAR annotation across 2D image space and 3D point clouds to support its increasing training data needs. Here is what the Autonomous Mobility company was looking for:

- Targets of interest in the 2D/3D space

- Lane marking and road boundaries in the 2D/3D space

- Annotation of 2D Traffic Signs in camera frames

- Annotation of Lanes and Boundaries in camera frames

- Annotation of Targets in LiDAR Frames

Solution

RAMPING UP THE TEAM FOR 2D/3D SENSOR FUSION

Like our other client engagements, this project began with requirement gathering, followed by skill and system assessment. Our 3D sensor fusion and LiDAR experts selected the team of annotators that aced our internal assessment tests of annotation expertise by at least 80%. Our team of annotators under goes a rigorous training schedule before they can start working on any client projects:

- Basic road rules and in-house tool training is Level 1, a 5-day training program.

- Level 2 comprises GIS, Mapping, and Semantic segmentation, where annotators undergo another week of training.

- Level 3 is LiDAR annotation and simulations, which takes another week to complete.

On completing this training program with the 80% threshold, annotators undergo a Client Assessment Test/ Fitment Assessment for the project.

For this company, the team of annotators also underwent client tool training as they were going to use the AV company’s proprietary tool for annotations.

Result

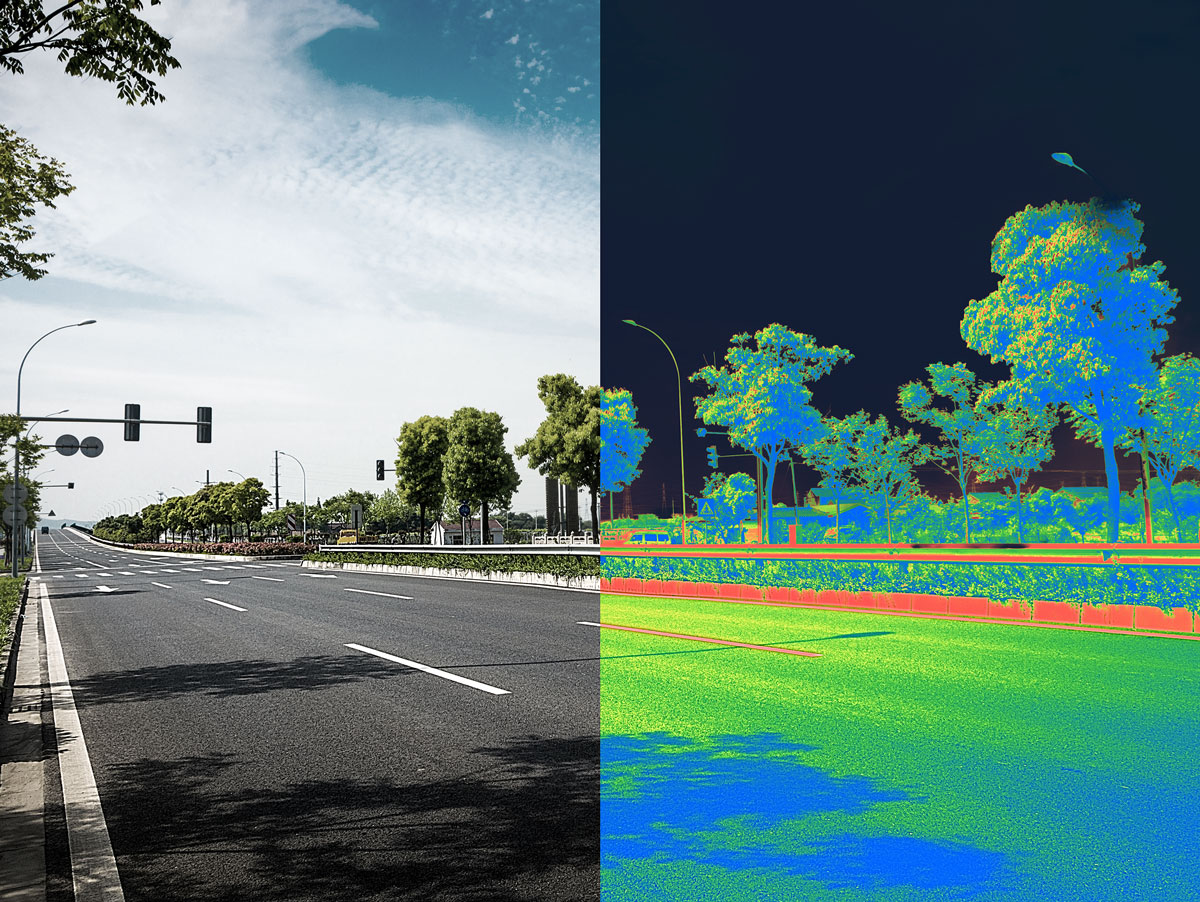

GROUND TRUTH FROM LiDAR ANNOTATION

iMerit adopted a two-stage approach to address the data challenges of the Autonomous Vehicle company.

Data Pre-selection and Curation

iMerit’s solution architects and Expert-in-the-loop selected the best available data to ensure thehighest data quality, richness, and diversity. The data pre-selection and curation ensured:

- Image quality (limited occlusions, no water droplets, etc.)

- Diverse road types, weather, lighting conditions, reflections, road geometry

Annotation and Attribution

Our team of annotators for the company helped with:

Detailed annotation guideline review to refine the guidelines as necessary.

With a minimum of 2-5 years of experience in annotation and attribution on 2D images, the team

could accurately demarcate:

- Raised boundaries (barriers, speed bumps)

- Crosswalks + attribution (occlusions, lane visibility)

- Road surface + attribution (asphalt, gravel, dirt, dry, wet, snow, icy)

With iMerit’s human-in-the-loop workflows, labeling and attribution on 3D LiDAR scenes for cars, pedestrians, poles, signs, and barriers, were achieved seamlessly.