Considering that 80% of the work required for an AI project is collecting and preparing data, determining how much data you will need is a critical first step to correctly estimate the effort and cost for the whole project. Rather than rushing to the common conclusion that ‘it depends’, we have put together a list of general recommendations from industry experts to kickstart your project.

Making Educated Guesses

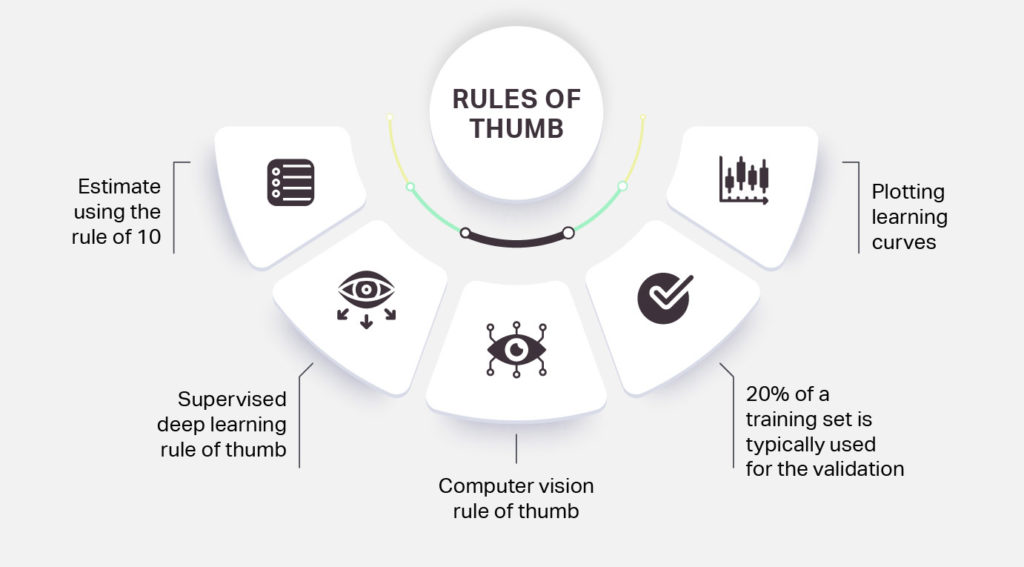

While there are no hard and fast rules for a minimum or recommended amount of data, you can arrive at feasible points by applying the following rules of thumb:

1. Estimate using the rule of 10: For an initial estimation for the amount of data required, you can apply the rule of 10, which recommends that the amount of training data you need is 10 times the number of parameters – or degrees of freedom – in the model. This recommendation came about as a way of addressing the totality of outputs available when combining the defined parameters.

2. Supervised deep learning rule of thumb: In their deep learning book Goodfellow, Bengio and Courville claim that 5,000 labeled examples per category is enough for a supervised deep learning algorithm to achieve acceptable performance which will match human performance. To exceed human performance, they recommend at least 10 million labeled examples.

3. Computer vision rule of thumb: When using deep learning for image classification, a good baseline to start from is 1,000 images per class. Pete Warden analyzed entries in the ImageNet classification challenge, where the dataset had 1,000 categories, each being a bit short of 1,000 images for each class. The dataset was large enough to train the early generations of image classifiers like AlexNet, so the author concluded that roughly 1,000 images is a good baseline for computer vision algorithms.

4. 20% of a training set is typically used for the validation: Another recommendation from the Deep Learning book is to use about 80% of the data for learning and 20% for validation. The validation set is the subset of data used to guide the selection of hyperparameters. To apply this recommendation in our context, if you have carried out a successful validation or proof of concept on your algorithm, we suggest quadrupling the amount of data you’ve used to develop your final product.

5. Plotting learning curves: To determine the efficiency of your machine learning algorithm, try plotting the learning curve of the sample size against the success rate. If the algorithm was trained adequately, the graph will look similar to a log function. If you found that the last two points plotted with your current sample size still have a positive slope, then you can increase the dataset for a better success rate. As the slope approaches zero, increasing the dataset is unlikely to improve the success rate.

The dangers of too little training data

While it may seem obvious to collect enough diverse, high-quality data to train your model, even some of the largest players in the industry fail to do so. Perhaps one of the most expensive and well-covered AI failures is the IBM Watson and University of Texas MD Anderson Cancer Center’s Oncology Expert Advisor system. The system was designed with the ambitious scope to ‘eradicate cancer’, with the first step being to ‘uncover valuable information for the cancer centre’s rich patient and research database’.

Unfortunately, the product ended up costing $62 million just to be cancelled after the AI algorithm was recommending unsafe and dangerous treatments. The main issue behind the poor performance was that Watson was trained on a small and narrow dataset, producing poor performance and ignoring other significant manifestations in cancer patients. Trained in natural language processing, the system only had accuracy scores ranging from 90 to 96 percent when dealing with clear concepts like diagnosis, but scores of only 63 to 65 percent for time-dependent information like therapy timelines.Amazon’s Rekognition had a similar failure. This computer vision system is used to detect and analyze faces by multiple government agencies including US Immigration. When Rekognition was trained using 25,000 publicly available arrest photos, the software incorrectly matched 28 members of Congress with people who had been arrested for a crime. Of these false matches, 40% were congressmen of color, despite only making up 20% of congress.

What to do if you need more datasets

To prevent these types of failures, we recommend the following methods for enlarging datasets:

1. Open Datasets: These great sources of data are from reputable institutions, and can be incorporated if you find relevant data:

- Google Public Data Explorer – As expected, Google Public Data Explorer aggregates data from multiple reputable sources and provides visualization tools with a time dimension.

- Registry of Open Data on AWS (RODA) – This repository contains public datasets from AWS resources such as Allen Institute for Artificial Intelligence (AI2), Digital Earth Africa, Facebook Data for Good, NASA Space Act Agreement, NIH STRIDES, NOAA Big Data Program, Space Telescope Science Institute, and Amazon Sustainability Data Initiative.

- DBpedia – DBpedia is a crowd-sourced community effort to extract structured content from the information created in various Wikimedia projects. There are around 4.58 million entities in the DBpedia dataset. 4.22 million are classified in ontology, including 1,445,000 persons, 735,000 places, 123,000 music albums, 87,000 films, 19,000 video games, 241,000 organizations, 251,000 species and 6,000 diseases.

- European Union Open Data Portal – Contains EU-related data for domains such as economy, employment, science, environment, and education. It is extensively used by European agencies, including EuroStat.

- Data.gov – The US government provides open data on topics such as agriculture, climate, energy, local government, maritime, ocean and elderly health.

2. Synthetic Data – Is a type of generated data which has the same characteristics and schema as ‘real’ data. It is particularly useful in the context of transfer learning, where a model can be taught on synthetic data, and then re-trained for real-world data. A great example to understand synthetic data is its application to computer vision, specifically self-driving algorithms. A self-driving AI system can be taught to recognize objects and navigate a simulated environment using a video game engine. Advantages of synthetic data include being able to efficiently produce data once the synthetic environment is defined, having perfectly accurate labels on the generated data, and lack of sensitive information such as personal data. Synthetic Minority Over-sampling Technique (SMOTE) is another technique for extending data from an existing dataset. SMOTE randomly selects a data point for the minority class, then finds its nearest neighbours and again randomly one of those is selected. The newly created data point will be synthesised randomly in the straight line between the two selected points.

3. Augmented Data – Is a technique that performs transformation on an existing dataset to repurpose it as new data. The clearest example of augmenting data is in computer vision applications, where images can be transformed with a variety of operations, including rotating the image, cropping, flipping, color shifting, and more.

Conclusion

Beginning any AI project begins with a guess. Educating that guess will be the difference between a successful outcome and a costly one. If you’re still struggling to get the results from your model after leveraging some of these methods, then speak with an iMerit expert today to learn more about how we’ve solved problems like this in the past.