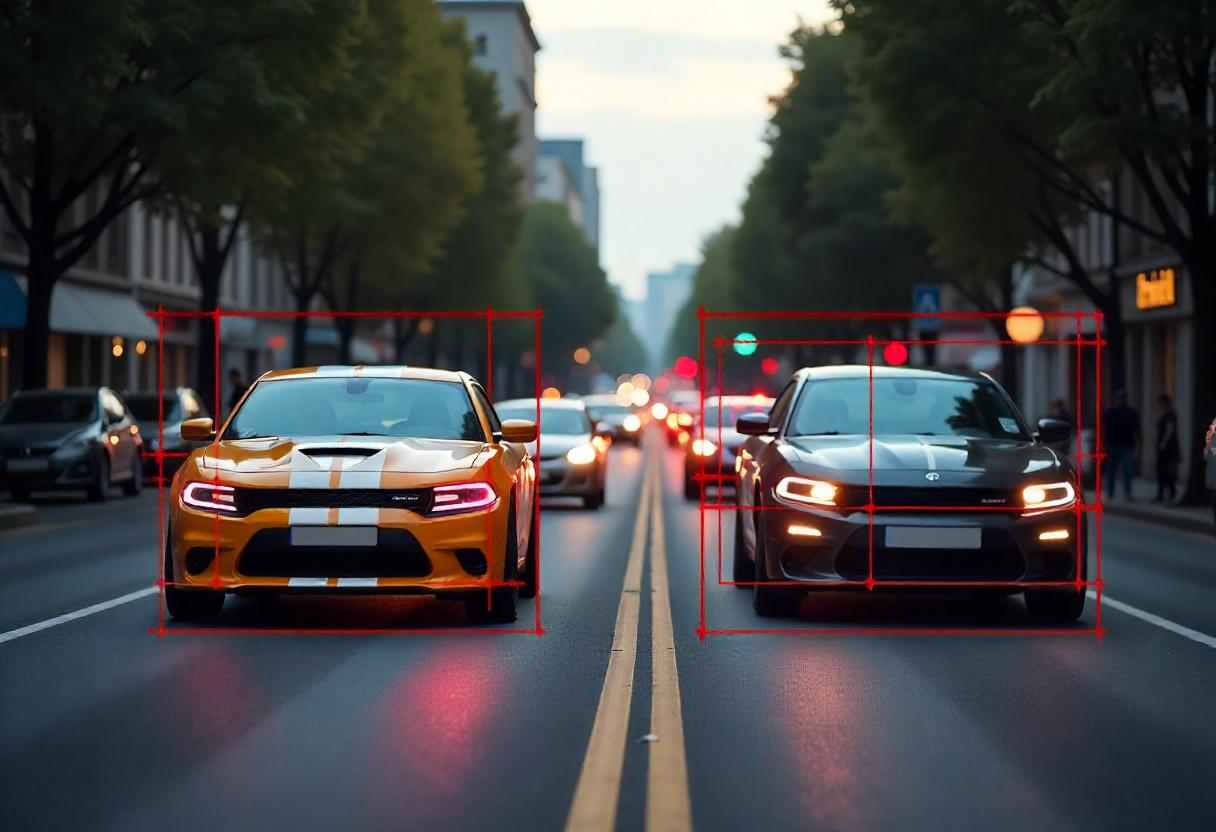

Autonomous vehicles might make up 25% of all cars by 2035. These vehicles could produce up to 4 terabytes of data every day. This highlights why sensor fusion is so important. Autonomous vehicles combine data from LiDAR, cameras, and other sensors through multi-sensor fusion to improve navigation accuracy and scene perception.

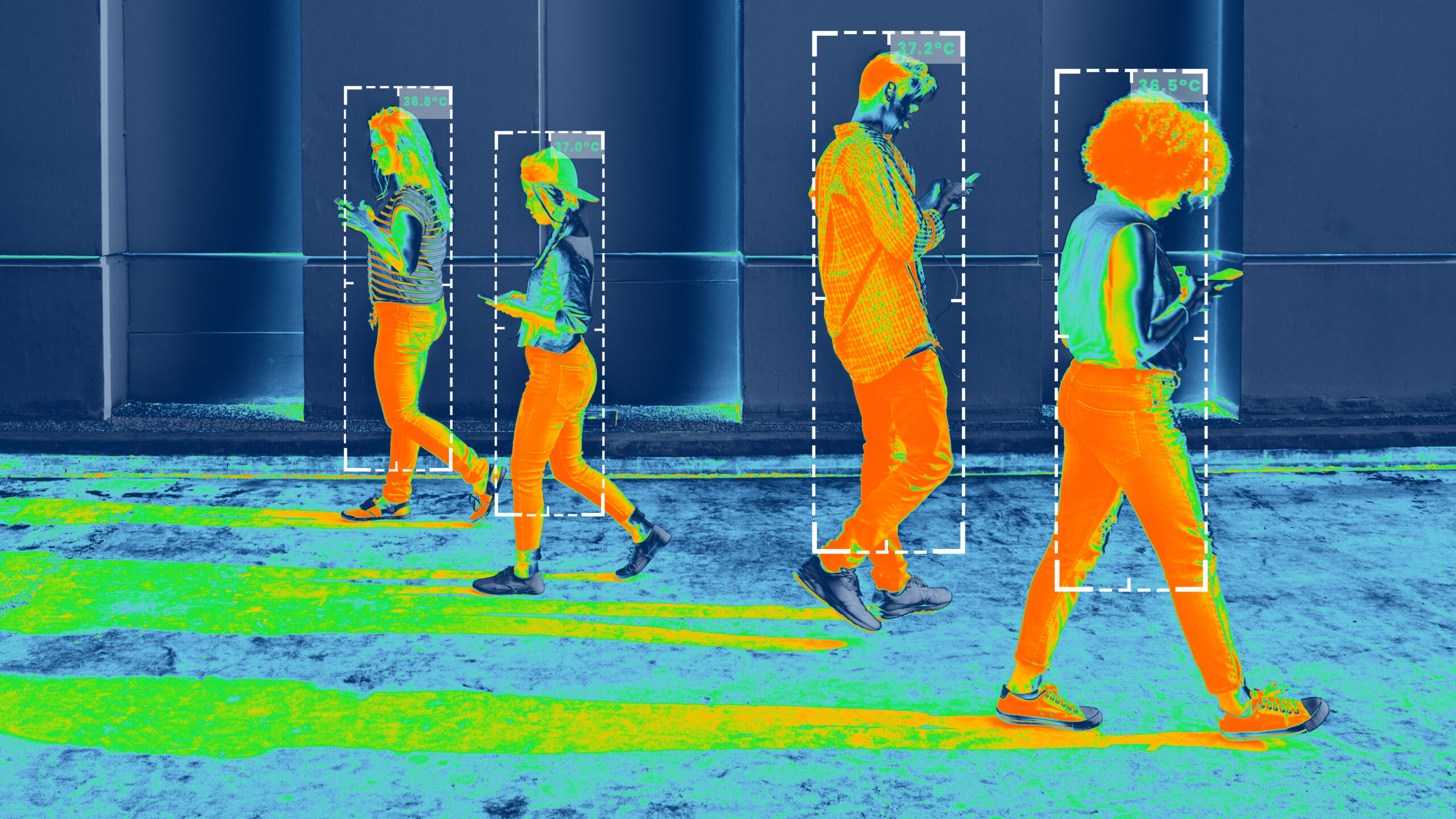

Each sensor input comes with noise and errors. For example, glare can blind a camera, or radar may pick up false reflections. These sensor uncertainties can cause unreliable object detection and positioning. Managing this uncertainty is essential for safety and performance.

Bayesian approaches provide a solid way of dealing with this uncertainty. Sensor data fusion can be seen as a probability-based inference problem. This blog will discuss how Bayesian methods help create stronger object detection and localization in a multi-sensor configuration.

We will also discuss case studies that show how good data labeling helps to power these advanced Bayesian sensor fusion systems. iMerit’s work on precise sensor data annotation plays a key role in developing uncertainty-aware, high-performing multi-sensor fusion systems.

Understanding Uncertainty in Multi-Sensor Systems

Uncertainty occurs when the system lacks important data. Sensors cannot produce 100% accurate data, and the same goes for models.

Types of Uncertainty

Identifying different types of uncertainty helps make systems safer. Aleatoric Uncertainty and Epistemic Uncertainty are the main types in sensor fusion. Let’s take a closer look at them:

| Aspect | Aleatoric Uncertainty | Epistemic Uncertainty |

|---|---|---|

| Definition | Uncertainty caused by random changes or differences in sensor readings. | Uncertainty caused by limited information, missing data, or imperfect models. |

| Cause | Sensors or the environment produce random effects such as sensor noise, measurement errors, or motion blur. | Being unaware of specific situations, objects, or areas. |

| Examples | Blur from camera movement, signal noise in radar, and image sensor quantization errors. | A drone discovering a new object or a self-driving car driving in undiscovered weather conditions. |

| Can it be reduced? | No, since it is built into the sensor. More data won’t help. | Data collection, better model development, and training in other environments can help. |

| In Sensor Fusion | Even if sensor fusion is perfect, a small amount of noise comes from every sensor. | Fusion systems can be enhanced by adding new data or adjusting their models when introducing different sensors or environments. |

Understanding aleatoric and epistemic uncertainty allows the design of sensor fusion systems to manage unpredictable noise and missing data. This makes machines safer and more reliable.

Sources of Uncertainty in Sensor Fusion

Even the most advanced systems face uncertainties that can degrade object detection, tracking, and decision-making. Let’s look at the main reasons why uncertainty occurs in sensor fusion systems.

Sensor Noise and Bias

Every sensor, including cameras, radars, and LiDARs, introduces measurement noise. The noise is unpredictable and random, similar to a photo blurred by motion or a radar signal that keeps changing due to interference.

On the other hand, bias causes all measurements to shift in the same direction. Even the best models can’t eliminate these problems since they are inherent to the sensor.

Temporal and Spatial Misalignment

Temporal and spatial mismatch is also a significant source of uncertainty. Different types of sensors can take measurements at various times and may not be situated together. A camera may capture a picture at one time, while a radar scan takes place just a few milliseconds later.

The data won’t be properly aligned when these differences are not considered. As a result, the object’s position may vary slightly between sensors, making it tough for the fusion system to accurately locate the object.

Data Association Errors

In some situations, several objects move around at once. If the data is not combined correctly, it can make it harder to track and decide what to do. The problem is tough when sensors have different resolutions or objects are in the same field of view.

To build sensor fusion systems that work well, it is necessary to understand these sources of uncertainty.

Fundamentals of Bayesian Inference in Sensor Fusion

Bayesian inference gives us a mathematical way to update predictions when new information becomes available.

Bayesian Framework

The main elements of this framework include:

- Prior: The prior represents an initial guess of an object’s location along with some uncertainty. For example, a drone may predict the wind speed and location before activating its sensors based on information from GPS.

- Likelihood: Each sensor has its own model for measurements and uncertainty. Multi-sensor fusion assumes that sensor errors are independent, which allows the likelihoods from each source to be combined.

- Posterior Estimation: According to Bayes’ rule, the posterior is proportional to the prior and the likelihood. Normalizing this product so the probabilities sum to one results in a more accurate estimate of the state, along with updated uncertainty.

Advantages

Bayesian inference is highly effective for sensor fusion, especially when detecting and locating objects. Let’s look at these benefits:

- Quantitative Uncertainty Representation: Every estimate is accompanied by a confidence level. This helps the system avoid being too sure, which can cause errors if the sensor data is unreliable or unavailable.

- Flexibility in Integrating Diverse Sensor Modalities: Bayesian inference is adaptable. It can merge information from various sensors, even if the sensor type differs. This fusion makes the system more precise and robust.

- Dynamic Model Updating: With each new piece of data, the system adjusts its worldview. This is known as dynamic model updating, and it ensures the system remains accurate even when things change.

- Robust Decision-Making Support: Bayesian inference allows machines to make more accurate decisions by integrating past data, sensor information, and confidence levels. This helps systems to reason under uncertainty, especially in dynamic environments.

Bayesian Techniques for Object Detection and Localization

Bayesian techniques help machines detect objects, estimate movement, and predict outcomes even with missing information. They combine probability, math, and sensor data to make well-informed guesses.

Kalman Filters

Kalman Filters combine models’ predictions with sensor data to predict an object’s location and motion. These filters update their predictions each time new data is introduced into the system, which is helpful for cars, planes, or robots. Here are some of its types:

- Standard Kalman Filter: The Standard Kalman Filter is designed for simple, linear movement that combines information from the model and sensor. It functions poorly when the path is non-linear or unpredictable.

- Extended Kalman Filter (EKF): The EKF handles non-linear systems. Non-linear curves are made simpler by breaking them up into straight lines through linearization. This helps track a car turning, though it may struggle with sudden or complex movements.

- Unscented Kalman Filter (UKF): This algorithm relies on deterministic sampling. It tests various points (called sigma points) to predict how the object will move. As a result, it can accurately follow objects that speed up or change direction quickly.

Human-in-the-Loop (HITL) in Bayesian Sensor Fusion

HITL uses a combination of human feedback and Bayesian models to make better predictions in uncertain conditions. Experts provide feedback, annotate the data, and monitor in real-time to direct the probabilistic models. This allows systems to manage uncertain situations, respond to changes, and make accurate predictions.

Uses of HITL in Bayesian Sensor Fusion

- Robotics: Bayesian models combine EMG signals, motion information, and human input to improve control settings in real-time. Human input improves assumptions when sensor data is noisy, enhancing prediction accuracy.

- Fault Diagnostics: Bayesian networks connect sensor data with expert-annotated failure patterns to diagnose and predict faults under uncertainty. This allows the system to learn from human insights, such as unexpected correlations or model failures.

- Experimentation: Bayesian optimization relies on probabilistic models to explore large experimental spaces, balancing exploration. HITL adds human feedback to guide the optimization process, refining the search.

Particle Filters

Particle Filters, also known as Sequential Monte Carlo Methods, are advanced techniques used to estimate the state of systems that are non-linear and have non-Gaussian noise. Instead of using a mean and variance to show uncertainty, Particle Filters use a set of particles with their weights.

They estimate the system’s state by moving and resampling particles using sensor data and models. As soon as new data arrives, particles are updated, making them suitable for live applications in changing circumstances.

Bayesian Networks

Bayesian Networks are probabilistic graphical models that represent conditional dependencies between variables. These networks act as guides that explain the connections between different items, including sensor readings and the environment.

Autonomous vehicles interpret sensor data using Bayesian networks. This allows them to anticipate moving obstacles quickly and adjust their routes for safer, more efficient travel.

Gaussian Processes

Gaussian Processes are a type of non-parametric Bayesian model. These processes model relationships between inputs and outputs, capturing uncertainty in spatial or temporal patterns. This helps in understanding the involvement of uncertainty and making predictions.

For instance, a robot can estimate an object’s location in all three dimensions, even if some data is not available. These Bayesian methods allow machines to detect and localize objects more accurately, even if the data is uncertain or noisy.

The Role of High-Quality Annotation in Uncertainty-Aware Models

High-quality annotations are necessary for uncertainty-aware models to learn the right patterns and probabilities. These models provide predictions and confidence levels. However, if annotations are incorrect, the model may misinterpret label noise as inherent uncertainty.

As a result, the model can make predictions that are too confident or not confident enough. Precise and consistent annotations reduce the risk of false correlations, allowing the model to focus on the authentic sources of uncertainty.

High-quality annotations allow models to make reliable predictions in cases like sensor fusion, object detection, or anomaly detection.

Integrating Bayesian Methods with Deep Learning

Bayesian methods are useful for modeling uncertainty, but may have trouble with large and complex data sets. Deep learning is particularly good at finding patterns in complex data. Combining these approaches helps design systems that are accurate and spot errors.

Bayesian Deep Learning

Bayesian techniques enhance deep learning models by incorporating uncertainty estimates into their predictions. Two common methods used in Bayesian deep learning include:

- Monte Carlo Dropout: This method applies dropout during inference and runs the model multiple times to estimate prediction uncertainty. It is simple to implement and does not require significant modifications to existing pre-trained deep networks.

- Bayesian Neural Networks: These networks assign probability distributions to their weights instead of fixed values. This approach allows the model to explicitly represent uncertainty in the data, making it more reliable for critical applications.

Hybrid Models

Hybrid models use both deep learning models and Bayesian Filters. For example, CNNs analyze raw data from sensors, while Bayesian Filters like Kalman or Particle can adjust predictions and uncertainty in dynamic environments.

With this integration, systems can handle tasks such as tracking objects, combining different types of sensors, and making decisions when uncertain.

Real-World Applications and Case Studies

To understand its impact, it is important to review the use of Bayesian sensor fusion in industries such as autonomous driving, robotics, and UAVs. The following case studies show how Bayesian methods are used in practice.

Autonomous Vehicle Perception

A leading autonomous vehicle company partnered with iMerit to enhance its 3D perception system through detailed annotation of LiDAR data in both 2D images and 3D point clouds. The project involved labeling key features such as lane markings, road edges, traffic lights, poles, and barriers using a structured human-in-the-loop process.

The iMerit team underwent comprehensive training covering basic road rules, annotation tools, mapping, semantic segmentation, LiDAR annotation, and simulation. Solution architects curated diverse datasets representing various road types, weather conditions, and lighting scenarios to ensure data quality and richness.

The outcome was a high-quality annotated dataset that significantly contributed to the client’s 3D perception system, improving object detection and environmental mapping to support safer and more reliable autonomous driving.

Multi-Modal Sensor Fusion and Object Tracking in Autonomous Racing

A multi-modal sensor fusion and tracking method was developed by researchers for use in high-speed autonomous racing. The system uses an Extended Kalman Filter (EKF) to combine different detection inputs and keep track of nearby objects.

Using a novel delay compensation, the system helps the perception software promptly update its object lists by reducing latency. This approach was deployed in events like the Indy Autonomous Challenge 2021 and the Autonomous Challenge at CES 2022.

The fusion and tracking approach resulted in residuals of less than 0.1 meters, showing its reliability and efficiency on embedded systems.

Multi-Sensor Data Fusion for Autonomous Flight of UAVs

A research project was conducted to integrate data from multiple sensors for unmanned aerial vehicles (UAVs) operating in challenging environments. The approach combined information from various sensors to enable UAVs to avoid obstacles and fly autonomously.

The system used a weighted average method along with data from global positioning systems, inertial measurement units, and three-dimensional optical detection and ranging.

Linear Kalman filtering was applied to the combined velocity data to support smooth navigation and obstacle avoidance in difficult flight conditions.

Best Practices for Reliable Multi-Sensor Fusion

Bayesian models are good at addressing uncertainty, but a multi-sensor system should be designed properly to ensure it functions and is safe. Here are some critical steps to ensure your multi-sensor fusion is reliable.

- Calibrate and Synchronize Sensors: To recognize space accurately, all sensors need to be in the same coordinate frame and synchronized. When time and position are in sync, it becomes easier to avoid errors while using several sensors.

- Model Sensor Uncertainties: Make sure the data fits the Gaussian distribution, remove any outliers, and set the covariance values in your filters. This ensures the model represents how sensors behave in real-life situations.

- Use High-Quality Training and Validation Data: Check that training data is labeled correctly, all sensors use the same labels, and objects are accurately located in 3D space. This is important for creating reliable models.

- Select the Right Fusion Algorithm: Choose an algorithm that matches the type of sensors and data you are using. Kalman filters are good for basic, linear situations, and particle filters are useful for more complex, non-linear data. If you are processing large and complex data, try using deep learning models.

- Plan for Edge Cases and Redundancy: Edge cases and failures in sensors should be part of the testing process for fusion systems. Using more than one sensor for the same task helps catch any errors.

- Continuous Monitoring and Update: A smart approach is to regularly update your models and priors with fresh data. Bayesian methods make it easy to add new information. When you retrain the model, you can use the old posteriors as the new priors.

Conclusion

Real-world systems are always uncertain because of noisy sensors, missing data, or environmental changes. Bayesian approaches allow you to model uncertainty, use past data with sensor information, and produce accurate predictions.

Key Takeaways

- Multi-sensor systems always have uncertainty because no sensor gives 100% accurate information.

- Aleatoric uncertainty occurs when things are random, while epistemic uncertainty is caused by not knowing enough.

- Sensor noise, incorrect positioning, and problems with associating data cause uncertainty in sensor fusion.

- Bayesian methods use mathematics to modify beliefs using what is already known and what is learned from new data.

Reliable, uncertainty-aware models are built on high-quality and accurate annotations. With iMerit’s Multi-Sensor Data Labeling Tool, you can easily integrate data from LiDAR, radar, and camera sensors to get accurate annotations for both 2D and 3D modalities.

It supports merged point clouds, 2D/3D drawables, and built-in validation tools to ensure consistency. With accurate and efficient data labeling, you can create AI models capable of Bayesian fusion, which makes them suitable for various applications.