When human annotators disagree, it raises a critical question: how can we trust an AI model trained on that data? This question highlights a major challenge in AI development. AI systems depend on human-labeled data to learn and improve. But when human annotators disagree, the data becomes unreliable, and so do the benchmarks we use to judge model performance.

Benchmarks play a crucial role in fields such as natural language processing (NLP), computer vision, and speech recognition. They serve as the standard for comparing model quality.

Inter-rater consistency (IRC) helps measure how much human annotators agree on a task. When IRC is high, we can trust the dataset. When it’s low, even a well-performing model can appear to fail. This directly impacts the reliability of AI benchmarks. If the benchmark is flawed, we can’t accurately assess how well a model performs.

Let’s explore how IRC affects the reliability of benchmarks and how it shapes the way we evaluate models.

The Foundation: Understanding Benchmarks and Human Annotation

AI and machine learning benchmarks are standard tests used to measure how well a model performs. They help researchers and developers compare different models using the same tasks and data. This makes it easier to track progress and understand what’s working.

For example:

- In image classification, a benchmark might test how well a model can identify objects in photos.

- In NLP, a benchmark could check if a model understands text or answers questions correctly.

- In speech recognition, benchmarks test how well a model turns speech into text.

These benchmarks use labeled data as a reference, and that is where human annotation comes in.

Many AI tasks do not have clear, automatic answers. For tasks like emotion detection, sentiment analysis, or summarizing text, we rely on human judgment. Humans review data and provide what we call the ground truth, the answer that models will learn from or be compared against.

There are different types of annotation tasks:

- Labeling objects in images or words in text

- Rating quality, tone, or correctness

- Transcribing audio into written words

- Segmenting parts of images, text, or video

But human annotation is not always easy. People may see things differently, especially in complex or subjective tasks. Some tasks can be tiring or unclear, which affects quality. This can lead to disagreement, and that’s why inter-rater consistency is so important.

Inter-Rater Consistency (IRC) Explained

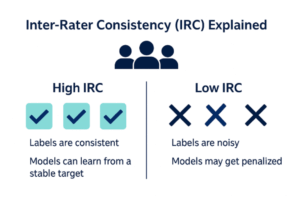

Inter-Rater Consistency (IRC) measures the degree of agreement among different human annotators when labeling or judging the same data. It tells us how reliable the labels are. If everyone gives the same answer, the task is clear and the data is strong. If people give different answers, the data becomes less trustworthy.

IRC is also known as annotator agreement or coder reliability. These terms are often used in data labeling and social science research. IRC is important because human labels are often used as the “ground truth” in AI benchmarks. But when human labels diverge, model training becomes unstable.

- If there is high IRC, the labels are consistent, and models can learn from a stable target.

- If there is low IRC, the labels are noisy. Models may get penalized even when their answers are reasonable.

This can lead to misleading conclusions. A model might seem to perform poorly, not because it’s wrong, but because the benchmark itself is unclear. That’s why good benchmarks must start with high inter-rater consistency.

For example, in a sarcasm detection experiment using Twitter data, researchers investigated the impact of context on annotation. Annotators labeled tweets for sarcasm under varying levels of contextual detail. The results revealed consistently low agreement. Krippendorff’s alpha scores were often below 0.35, and in some cases even negative, indicating less than chance agreement among certain annotator pairs.

The findings suggest that sarcasm identification is highly subjective and difficult, and low IRC can severely constrain the reliability and utility of such NLP benchmarks.

Common Ways to Measure IRC

There are several metrics used to measure agreement between annotators. Here are a few common ones:

- Cohen’s Kappa: Cohen’s Kappa measures agreement between two annotators on categorical labels. It adjusts for the level of agreement that could happen by chance, making it more reliable than simple percentage agreement.

- Fleiss’ Kappa: Fleiss’ Kappa is an extension of Cohen’s Kappa used when there are more than two annotators. It evaluates how consistently multiple people label the same set of items.

- Krippendorff’s Alpha: Krippendorff’s Alpha is a flexible metric that works with different types of data, including nominal, ordinal, interval, and ratio. It can also handle missing values, making it useful for complex annotation projects.

- Percentage Agreement: Percentage agreement is the simplest measure. It shows the proportion of times annotators gave the same answer. However, it does not account for agreement that could occur by chance.

- Correlation Coefficients (e.g., Pearson, Spearman): These are used when annotators give numerical ratings. Correlation coefficients show how closely the ratings follow the same pattern, rather than exact matches.

The Impact of Low Inter-Rater Consistency on Benchmark Reliability

Low inter-rater consistency IRC creates problems across the entire AI pipeline from training to evaluation. When human annotators disagree, the quality of the dataset suffers. This affects the ground truth, the model, and the benchmark itself.

1. Direct Impact on Ground Truth Quality

Low IRC adds noise and confusion to the dataset. When human labels are inconsistent, it’s hard to know what the model should learn. The model might be trained on labels that don’t reflect a clear or agreed-upon answer. This weakens the ground truth and makes it harder to trust the results.

Even when a model makes a reasonable prediction, it may be marked wrong simply because it does not match a confusing or inconsistent label.

2. Implications for Model Development

With low IRC, performance metrics like accuracy, F1, or BLEU scores are compromised:

- Model Misjudgment: A high-performing model might be penalized for diverging from noisy annotations, even when its outputs are closer to human intuition.

- Overfitting to Noise: In some cases, models learn to mimic labeling artifacts instead of task-relevant features.Unstable Leaderboard Rankings: Benchmark competitions often suffer from IRC noise, which can cause inconsistent rankings and create false performance gaps between models.

Furthermore, error analysis becomes ambiguous when both humans and models disagree. This makes it difficult to pinpoint the source of failure and determine whether it lies in the data, the model, or the task definition itself. Such uncertainty hinders iterative improvements and hampers model debugging.

3. Dataset Integrity and Reproducibility

Benchmarks with low IRC are less stable. Different teams might get different results using the same dataset. This reduces trust in the benchmark and the models tested on it. This results in:

- Low cross-benchmark transferability: A model trained on one version of a dataset may not perform similarly on another, even if the task is identical.

- Poor reproducibility: Different research groups using the same dataset but with different sampling or aggregation strategies may report inconsistent findings.

- Diminished longitudinal value: Benchmarks with low IRC degrade faster over time as expectations shift or annotator baselines evolve.

This is particularly harmful in high-stakes domains like medical diagnosis, legal classification, or safety-critical applications, where reproducibility and label confidence are non-negotiable.

When Models “Outperform” Humans

Sometimes, an AI model seems to perform better than human annotators. On paper, the model may show higher accuracy or match the benchmark more closely. But this can be misleading.

If the benchmark was built on low inter-rater consistency, the model may only be learning to match one version of the label, not the full range of human judgment. In these cases, the model is not truly smarter. It is just better at copying a narrow or noisy signal.

This becomes a problem in tasks that involve subjectivity, like emotion detection, content moderation, or summarization. If people do not fully agree on what is correct, a model trained on that data may reflect those same differences or even amplify them.

So when we say a model is “better” than humans, we need to ask, better compared to what? If the benchmark itself is flawed, the results can not be trusted. That is why improving inter-rater consistency is key before claiming that a model has reached or passed human-level performance.

For example, OpenAI’s ChatGPT models have reported strong performance on high-profile coding benchmarks like Codeforces. There have been notable concerns about the consistency of problem labels and grading in these benchmarks. For instance, the recent SWE-bench Verified dataset was explicitly created to address issues where problem solutions and success labels in earlier evaluation datasets were either ambiguous or inconsistently annotated by human raters.

OpenAI acknowledged the need for human-verified subsets to improve benchmark reliability. Despite strong model performance, gold standards for coding tasks still suffer from inter-rater and annotation inconsistencies. This complicates direct model-versus-human comparisons and challenges claims of benchmark superiority.

iMerit improves IRC by using trained annotators, clear instructions, and multi-step quality checks. The teams are experienced in managing complex tasks and reducing disagreement. This helps AI teams work with cleaner data, stronger benchmarks, and more dependable model evaluations.

Improving Annotator Agreement: Strategies and Contributing Factors

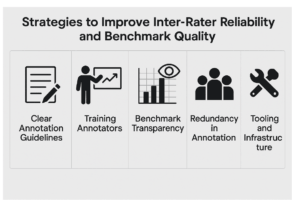

Improving inter-rater reliability helps make datasets more reliable and useful. When annotators agree more often, models can learn better, and evaluation becomes more trustworthy. Here are some ways to improve annotator agreement metrics, and also factors that directly affect IRR:

- Clear Annotation Guidelines: Ambiguity in task instructions often leads to disagreement. One of the best ways to boost IRC is to provide clear instructions. Annotators need to understand the task, what to look for, and how to make decisions. Good examples and edge cases can help reduce confusion and lead to better annotator agreement metrics.

- Training Annotators: Lack of experience can result in varied interpretations by the annotators. Regular training can help annotators understand the task better. It’s even more important in domains that need expert knowledge, like medical or legal data. Ongoing support and feedback also help maintain high quality.

- Benchmark Transparency: Benchmarks should always report inter-rater consistency. This shows how reliable the evaluation is. If IRC is low, users know the results may not reflect true model performance.

- Redundancy in Annotation: Assign multiple annotators per item. For instance, 3 to 5 annotators work on the same piece of data. If they disagree, a senior annotator or an expert panel can step in to make the final decision. For example, uses a layered QA process with human-in-the-loop checks and expert review to ensure high accuracy and consistency.

- Tooling and Infrastructure: Having the right tools and infrastructure makes a big difference in improving AI benchmarking quality. Annotation platforms should be easy to use and have built-in ways to check quality. iMerit uses advanced platforms and even creates custom tools to make the annotation process smooth and reliable.

Platforms like iMerit’s Ango Hub offer workflow automation, quality checks, and role-based task routing to streamline labeling and reduce errors. Features like real-time feedback, audit trails, and adjudication support help teams stay aligned and consistent. This is particularly useful for challenging tasks like multi-modal data labeling or spotting rare edge cases.

Conclusion

Inter-rater consistency is essential for fair and reliable AI model evaluation. Without it, benchmarks become noisy, misleading, and unreliable.

Here are the key takeaways:

- IRC shapes ground truth quality and impacts both model training and evaluation accuracy.

- Low IRC weakens benchmarks and leads to unreliable, inconsistent performance scores.

- Clear guidelines, proper annotator training, redundancy, and transparent reporting help improve IRC.

- Modern tools and human-in-the-loop systems like iMerit’s multi-layered QA boost label consistency.

- Subjective and complex tasks are more prone to inconsistency, so careful workflow design is crucial.

As AI systems are deployed in more sensitive and high-impact areas, the industry must invest in stronger inter-rater consistency practices. Future developments like AI-assisted annotation, more robust agreement metrics, and meta-benchmarking analyses will further advance benchmark integrity.

If you need high-quality data labeling with a focus on consistency and accuracy, iMerit provides scalable annotation solutions. Human-in-the-loop workflows and AI-powered tools ensure your datasets are reliable and ready for real-world use.