Audio transcription and AI speech-to-text are practically bursting with new use cases and applications. With the rise of artificial intelligence (AI), new possibilities for speech-to-text conversion are emerging daily. Software algorithms trained using advanced machine learning (ML) and natural language processing techniques bring us ever closer to a world where instead of humans performing transcription, fully-digital transcribers will conduct the task.

However, AI is struggling to compete with humans when it comes to accuracy. While much of the industry is focused on full-scale automation, the human component of most speech-to-text use cases will remain mandatory for the foreseeable future to ensure adequate performance outputs.

In this post, we will outline the current state of speech-to-text AI and assess the future trajectory of machine learning and natural language processing in this exciting field.

What is AI Speech-to-Text?

AI speech-to-text is a field in computer science that specializes in enabling computers to recognize and transcribe spoken language into text. It is also called speech recognition, computer speech recognition, or automatic speech recognition (ASR).

Speech-to-text is different from voice recognition as the software is trained to understand and recognize the words being spoken. In contrast, voice recognition software focuses on identifying the voice patterns of individuals.

Speech Recognition – How Does it Work?

Speech recognition requires a combination of specially trained algorithms, computer processors, and audio capture hardware (microphones) to work. The algorithms parse the continuous, complex acoustic signal into discrete linguistic units called phonemes.

A phoneme is the smallest distinct unit of sound that human language can be broken down into. Phonemes are the minimal units of sound that speakers of a language perceive as different enough to create meaningful differences between words; for example, English speakers recognize that “though” and “go” are two different words, because their first consonant sound is different, even though their vowel sounds are the same. A language may have more–or fewer–phonemes than it has letters or graphemes. For example, even though English has only 26 letters, some dialects contain 44 different phonemes.

To make things even more complex, a given phoneme’s acoustic properties differ depending on the speaker and the context the sound is in. For example, the “l” sound at the end of the word “ball” is acoustically closer to the vowel sound “o” than it is to the “l” sound at the beginning of the word “loud”, in many dialects of English. The algorithms mapping acoustic signals to phonemes need to take context into consideration.

The AI speech-to-text workflow consists of the following key steps:

- The sounds coming from a person’s mouth are captured by the mic. The sounds are converted from analog signals to digital files.

- The software then analyzes the audio files bit-by-bit, down to the hundredth/thousandths of seconds, searching for known phonemes.

- The identified phonemes are then run through a database of common words, phrases, and sentences.

- The software uses complex mathematical models to zero in on the most likely words/phrases that match the audio to create the final text output.

A Quick History of Speech Recognition

The first-ever speech recognition system was built in 1952 by Bell Laboratories. Called “Audrey,” it could recognize the sound of a spoken digit – zero to nine – with more than 90% accuracy when uttered by its developer HK David.

In 1962, IBM created the “Shoebox,” a machine capable of recognizing 16 spoken English words. In the same decade, the Soviets created an algorithm capable of recognizing over 200 words. All these were based on pre-recorded speech.

The next big breakthrough came in the 1970s, as part of a US Department of Defense-funded program at Carnegie Mellon University. The device they developed, called the “Harpy,” could recognize entire sentences with a vocabulary of 1000 words.

By the 1980s, the vocabulary of speech recognition software had increased to 20,000. IBM created a voice-activated typewriter called Tangora, which used a statistical prediction model for word identification.

The first consumer-grade speech-to-text product was launched in 1990 – the Dragon Dictate. A successor, launched in 1997 called the Dragon Naturally Speaking, is still in use on many desktops to this date.

Speech-to-text technology has improved in leaps and bounds since then, especially after the evolution of high-speed internet and cloud computing. Google is a market leader, with its voice search and speech-to-text product.

Current Uses of Speech-to-Text

In the past, speech-to-text was generally a specialized service. Businesses and government agencies/courts were the main users, for data recording purposes. Professionals like doctors also found the service quite useful.

These days, anyone with a smartphone and internet connection has access to some form of speech-to-text software. The need for its features has also exploded across enterprise and consumer markets. We can broadly divide the major demand for AI speech-to-text into the following sources:

- Customer Service

- Many enterprises rely on chatbots or AI assistants in customer service, at least as a first layer to reduce costs and improve customer experience. With many users preferring voice chat, efficient and accurate speech-to-text software can drastically improve the online customer service experience.

- For starters, AI chatbots with advanced speech recognition capabilities can reduce the load on the executives at call centers. Acting as the first line of service, they can identify the intent/need of the speaker and redirect them to the appropriate service or resource.

- Content Search

- Again, the explosion in mobile usage is fueling an increased demand for AI speech recognition algorithms. The number of potential users has increased drastically, thanks to public access to speech-to-text services available free on both iOS and Android platforms.

- There is a dizzying array of diversity among humans in voice quality, speech patterns, accents, dialects, and other personal quirks. A competent speech-to-text AI needs to be able to recognize words and whole sentences with reasonable accuracy to provide satisfactory results.

- Enterprises with smarter speech recognition tools will be able to stand out among the crowd. Modern users are notoriously demanding, with a very low tolerance of delays and substandard service. Digital marketing has emerged as a major driver for the evolution of AI speech-to-text, particularly on mobile devices.

- Electronic Documentation

- There are many services and fields where live transcription is vital for documentation purposes. Doctors need it for faster, more efficient management of patient medical records and diagnosis notes.

- Court systems and government agencies can use the technology to reduce costs and improve efficiency in record keeping. Businesses can also use it during important meetings and conferences for the keeping of minutes and other special needs.

- The 2020 COVID-19 pandemic also brought to light a new use case for speech-to-text. Due to the sheer number of remote meetings and video conferences, seamless speech-to-text functionality allows companies to extract intelligence, summarize meetings, and derive analytics by recording conversations.

- Content Consumption

- Global accessibility to content is a huge proponent of speech-to-text adoption. With online streaming replacing traditional forms of entertainment, there is an ever-increasing demand for digital subtitles. Real-time captioning has a massive market, as content is streamed across the globe to viewers from different linguistic backgrounds.

- There is a huge potential for the use of AI speech-to-text in live entertainment like sports streaming as well. Commentary with instant captions would prove to be a game-changer, improving accessibility and overall user engagement.

Role of AI/ML/NLP in Speech Recognition

Three buzzwords are closely associated with modern speech recognition technologies – artificial intelligence (AI), machine learning (ML), and natural language processing (NLP). These terms are often used interchangeably, but are in fact very distinct from one another.

- Artificial intelligence (AI) is the vast field in computer science dedicated to developing “smarter” software that can solve problems similar to how a human would. One of the main roles intended for AI is primarily to assist humans, especially in repetitive tasks. Computers with speech-to-text software do not get tired and can work a lot faster than humans.

- Machine learning is often used interchangeably with AI, which simply isn’t correct. Machine learning is a subfield within AI research that focuses on using statistical modeling and vast amounts of relevant data to teach computers/software to perform complex tasks like transcription and speech-to-text.

- Natural language processing is a branch of computer science and AI that focuses on training computers to understand human speech and text just as we humans do. NLP focuses on helping machines understand text, its meaning, sentiment, and context. The goal is to subsequently interact with humans using this knowledge.

Basic speech-to-text AI converts speech data into text. But when the speech recognition is for advanced tasks like voice-based search, virtual assistants like Apple’s Siri, for instance, NLP is vital for empowering the AI to analyze the data and deliver accurate results that match the user’s needs.

Other Important AI Techniques

Other advanced AI models and techniques are also commonly employed by developers working on speech recognition algorithms. The earliest and most popular of these are the Hidden Markov Models. It is a system for teaching AI to deal with random input data that contains unknown or hidden parameters.

In speech recognition, a major unknown would be the thought process or intention of the speaker – the AI has no way of predicting it. The Markov Models have been used to empower speech recognition AI to deal with this randomness since the early 1970s.These days, you often see the Hidden Markov Model combined with other techniques like N-grams and deep learning neural networks – complex systems that mimic the human brain using multiple layers of AI nodes and are capable of handling heavy workloads.

Challenges to Speech Recognition

Even the best automated speech recognition algorithms cannot achieve a 100% accuracy rate. The current going rate is 95%, first achieved by Google Cloud Speech in 2017. Numerous factors are responsible for creating this 5% error rate in the best speech-to-text AI in the world, including accents, context, input quality, visual cues, low-resource languages, and code-switching.

At the same time, the field is evolving rapidly. Modern ASR systems are integrating large language models (LLMs) to provide context-aware transcription, summarization, and semantic understanding. Multilingual and domain-specific models are now more common, and edge-based transcription is being adopted for privacy-preserving, low-latency applications. These trends help address some of the limitations inherent in traditional ASR systems.

Despite the advances in ASR, several factors still challenge accuracy. Some of the most common ones include:

- Accents and Dialects – even regular humans often have trouble understanding what someone is saying in their shared language, due to local differences in dialects and accents. Programming the AI to detect all these nuances takes time and is very challenging indeed.

- Context – homophones are words that have the same or similar sounds, but different meanings. A simple example is “right” and “write.” AI can often have trouble identifying these homophones in a sentence, without a robust language model and training on these words in relevant contexts.

- Input Quality – background noises can severely affect the ability of an AI to render an accurate text conversion of speech. If the speaker is suffering from a common ailment like the common cold or sore throat, the changes it brings to the speech can often throw off the software.

- Visual Cues – we humans rely not just on voice to send a message, our words are often complemented by expressions and gestures that enhance or sometimes drastically change the meaning of what is being said. AI has no way to decipher these cues, unless it is an advanced image and audio processing algorithm capable of analyzing both data sets in video files.

- Low-Resource Languages – developing ASR for languages that simply have a lower volume of recorded data, be they audio or video files containing sound, or recordings over a very limited domain or speech style.

- Code-Switching/Language-Mixing – in multilingual speech communities, people draw on a repertoire of multiple languages in a single conversation. This creates complexities for the language and acoustic models, as they need to be able to handle lexical and grammatical patterns in switching between languages and a larger overall set of possible patterns.

- Security – Security is another common challenge with speech-to-text software, especially in the enterprise sector. Current AI relies on cloud-based support – the servers and computational resources are often located remotely. Transmitting sensitive business or government data into the cloud poses significant risks. In defense uses, they overcome this by relying on on-site servers.

Speech-to-Text with iMerit

iMerit provides high-quality speech-to-text annotation across accents, dialects, and specialized vocabularies, enabling ASR models to perform reliably in diverse real-world environments. Our workforce includes iMerit Scholars, a team of highly trained subject-matter experts, who ensure accuracy and contextual understanding in complex speech datasets. This strong foundation also supports advanced speech-to-text use cases, where well-labeled speech data is critical for natural and context-aware synthesis.

For organizations comparing platforms, we’ve also covered some of the Top Speech-to-Text Annotation Tools.

Audio Transcriptions and Speech-to-Text AI Development

Machine learning requires vast amounts of processed data. Raw audio cannot be fed into an algorithm without risking poor accuracy. This creates a paradox: to reduce human labor in the future, significant labor is needed today.

To address this, many organizations now combine human-in-the-loop (HITL) workflows with AI transcription. Humans validate, correct, and annotate transcriptions, feeding them back into the system for continuous learning. This approach is especially valuable for specialized domains like healthcare, legal, or technical industries, where precision is critical.

Once audio data is transcribed and validated through HITL workflows, it can be further enriched with detailed annotations to train AI for more advanced understanding. Key annotation tasks include the following:

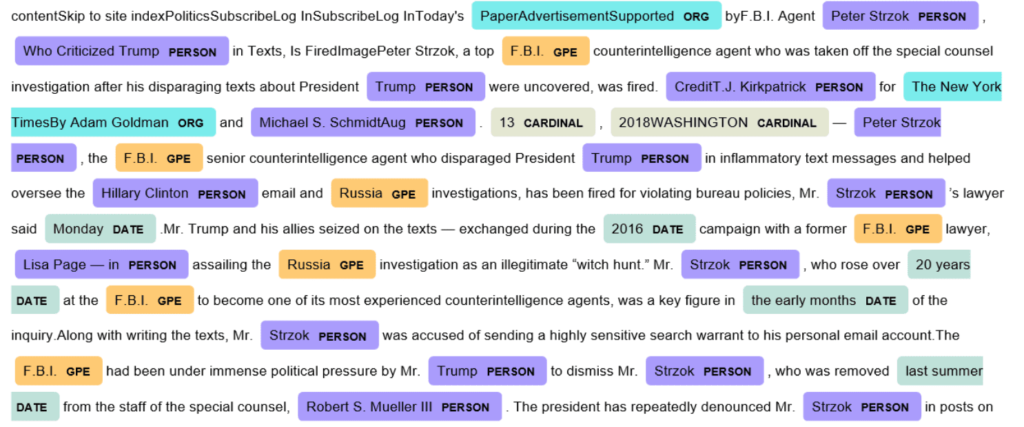

- Named Entity Recognition (NER)

- The transcribed text will often contain some words or phrases that are more important than others. These strings can be grouped into specific categories, like place names, organizations, people, processions, products, etc.

- NER is the process of identifying and classifying these entities into their specific categories. NER is also referred to as entity extraction or identification. A more complex semantic task involves interpreting relationships between entities in a text; for example, organization-employer – person-employee. Once the AI understands the relationships between the entities, it is better equipped to perform higher-level reasoning and execute tasks related to these entities.

- Sentiment & Topic Analysis

- Speech and text will often contain a lot of subjective data – user sentiments, positive or negative thoughts towards a particular product/topic, etc. Sentiment analysis is the process of mining text data to understand and identify such subjective information, often for marketing and customer service.

- Transcribed data with sentiment analysis annotations can be valuable for speech-to-text solutions that involve product marketing, customer feedback, troubleshooting, and other forms of social interactions. The algorithms can be trained to look for relevant patterns of subjective entities.

- Topic analysis further enables algorithms to classify texts, segment long conference calls into bite-sized units, and–powered by massive natural language models like GPT-3–even compose answers to questions and succinct summaries of long documents.

- Intent & Conversation Analysis

- Conversations initiated by speech-to-text users have a definite purpose. If the AI can recognize it instantly, it is in a better position to deliver a satisfactory service. Intent analysis is a vital task that enables this feature.

- Intent detection trains the AI to recognize the intention of the speaker, and in many cases, to interpret the action the user wants the AI to perform. This kind of text processing is essential in the creation of personalized chatbots. An AI chatbot in finance will have a different vocabulary to deal with when compared to one in retail.

The Future of AI Transcriptions

As deep learning neural networks improve, we are gradually approaching smarter “hard AI.” Today, algorithms still lag behind humans in deciphering nuances in speech, especially when specialized jargon, accents, or unique speech styles are involved. You can see this in public examples like YouTube captions, which perform well for native English speakers but struggle in more complex contexts.

At the same time, ASR systems are becoming increasingly context-aware and multimodal, combining audio and visual cues to interpret speech more accurately. Self-learning algorithms allow AI to adapt to new accents, dialects, and domain-specific language without constant human intervention. These innovations suggest that while humans will remain involved for high-precision tasks, AI will increasingly handle real-time transcription more efficiently.

Conclusion

AI speech-to-text is in an exciting phase, with voice assistants, search, and voice controls becoming part of everyday life. While state-of-the-art AI delivers 95% accuracy, human expertise remains crucial in high-stakes applications.

The integration of HITL workflows, adaptive ASR models, and multimodal contextual understanding represents the cutting-edge approach in 2025. These methods allow AI to continuously learn, adapt, and deliver higher accuracy while preserving privacy and supporting domain-specific needs.