Automating processes that require human judgment presents a paradox: How can we automate systems that need human involvement? The advancement of sophisticated AI systems across high-stakes domains, including healthcare and finance, makes human-in-the-loop (HITL) interactions more essential than ever.

Organizations strive to reduce human involvement while improving operational efficiency and scalability. Developing autonomous systems presents a dual challenge of maintaining safety standards alongside fairness and accountability requirements.

Let’s go over the evolution of HITL systems and the path toward more autonomous, accountable, and intelligent systems. We will discuss reciprocal learning, self-aware AI, design principles, and ethical frameworks to ensure responsible, transparent transitions.

Why We Still Need Humans in Automation

Automation continues to gain strength, but human participation remains vital for several reasons. Machines operate with superior data processing speed and pattern-based decision-making. Systems today lack the ability to match human capabilities when it comes to understanding context or emotional intelligence.

Automated systems that run without human supervision often turn small mistakes into major issues, particularly in fields like healthcare, finance, and transportation. For example, a machine might wrongly reject a loan application or give medical advice without understanding the full context. In these cases, human judgment acts as an important safeguard.

The Human-in-the-Loop (HITL) system serves as a fundamental tool for controlling AI behavior in challenging environments with unpredictable conditions. This allows systems to develop real-world expertise through human involvement in their learning and adaptation processes.

Bridging the Gap in Traditional HITL Models

Traditional Human-in-the-Loop (HITL) systems are designed to embed human oversight within automated decision-making processes. These systems place human operators in supervisory roles to validate machine output and implement override functions to achieve responsible and secure results.

In these implementations, humans are responsible for validating decisions made by the systems, rather than being involved in constant, interactive decision-making. The static nature of these systems creates problems because they lack transparency in how decisions are made and can’t learn from human feedback.

At the same time, these systems cannot adjust to changing goals or environments. These limitations become dangerous as AI systems gain deeper integration into critical operations. Systems must incorporate human insight as an active component that functions within the dynamic learning process.

Reciprocal Human-Machine Learning (RHML) establishes a real-time system that allows both humans and machines to adapt simultaneously. RHML transforms HITL from static supervision into a collaborative, evolving partnership that improves safety and performance.

Reciprocal Human-Machine Learning (RHML)

Reciprocal Human-Machine Learning (RHML) offers an iterative framework for human experts and AI systems to learn from each other. Rather than relying only on historical data or static rules, RHML allows AI to observe human decision-making in real time.

The co-learning process between humans and AI systems improves system responsiveness to evolving human needs and operational settings in high-stakes, fast-moving environments.

Additionally, the AI system collects feedback for continuous decision-making through online learning algorithms or human-supervised reinforcement learning. This adaptability allows for personalized decisions and context awareness, enhancing decision-making in dynamic environments.

Building Self-Aware AI Systems

The automation of HITL systems requires AI to develop practical self-awareness, which allows it to measure its performance with confidence and uncertainty levels. A model must recognize potential errors to ensure adequate human supervision and promote informed decision-making processes.

In fields like medical imaging, where accuracy is crucial, AI models can use techniques like Bayesian neural networks or model ensembles to estimate uncertainty and identify predictions that exceed confidence thresholds. This helps human experts identify when a model may be making an error or lacks certainty, guiding them on when intervention is necessary.

These systems provide developers with valuable insights into the decision boundaries of the AI, guiding them on when human intervention is necessary. This is particularly crucial in areas like medical diagnoses, legal document classification, and autonomous navigation, where expert review can be vital to ensure accuracy and safety.

Design Principles of Human-Centered Automation (HCA)

Intelligent and independent systems require both advanced technology and thoughtful design to achieve effective automation. Human-Centered Automation (HCA) provides essential principles to ensure that these systems effectively support users. Let’s discuss the core principles of HCA:

- Focus on the User: Automation technologies must adopt a person-centered approach by considering user requirements and preferences during the design process. Systems are designed to support learning and development, enabling users to take on more complex and meaningful tasks.

- Promote Human-Machine Collaboration: Machines and humans should collaborate in alignment to make decisions, while human operators retain the ultimate responsibility for all final decisions. Collaboration between humans and machines produces reliable results.

- Transparent and Flexible: Automated systems must explain their functions and decisions clearly. Users should be able to customize and adjust automated solutions to meet different needs and circumstances as organizational demands change.

Utilizing Synthetic Data for Preemptive Learning

Corpus creation trains AI systems with more extensive input sets, which boosts their ability to understand diverse situations. This makes them less prone to failure as they encounter new problems during actual use.

Models can perform stress testing in controlled environments using synthetic data. This helps facilitate better anticipation and failure prevention in real-world scenarios, improving system robustness.

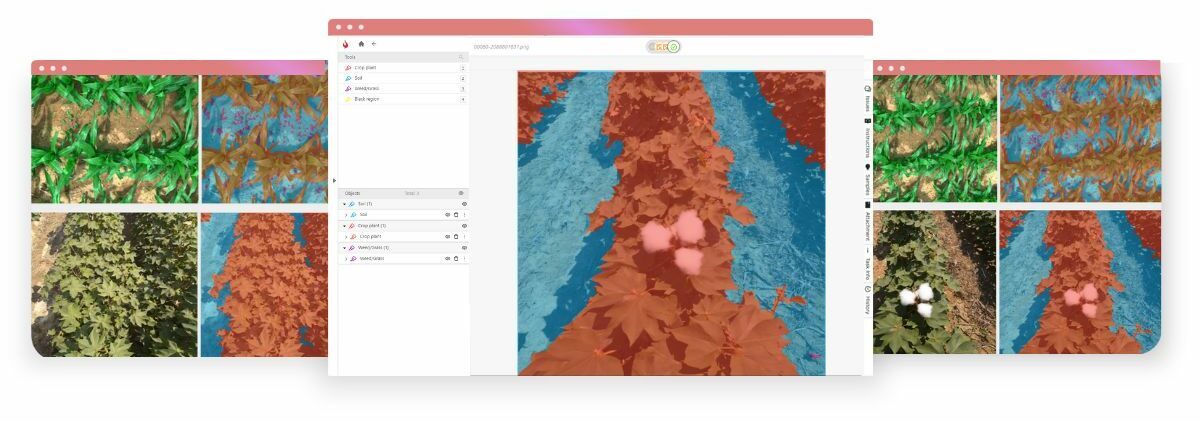

Agricultural synthetic data helps AI models achieve higher precision by allowing them to train across multiple farming environments. Self-driving vehicles benefit from corpus creation because actual field testing comes with substantial safety hazards and financial costs.

Source: Enhancing AI Precision in Agriculture with Synthetic Data

Transitioning Toward Human-on-the-Loop (HOTL) Models

As automation technology advances, the need for human involvement in fast-paced decision-making and data-intensive systems decreases. Human-on-the-Loop (HOTL) models enable machines to operate autonomously while allowing human operators to supervise and intervene when necessary.

HOTL is particularly valuable for time-sensitive applications, where human data entry can cause operational delays or safety risks. These models allow AI systems to function autonomously while ensuring human oversight remains available as a safeguard.

Robust protective measures should be integrated into HOTL systems to guarantee transparency and accountability. The system design must achieve three key attributes:

- Humans need to see how decisions are processed.

- Every system action and outcome must be trackable through audit trails.

- Strategies exist to specify scenarios involving human intervention.

The absence of protective measures puts HOTL at risk of losing its oversight abilities as automation systems could function without proper human supervision.

Ethics and Governance in HITL Systems

The increasing capabilities of automation systems necessitate stronger ethical frameworks and more robust governance structures. Systems require both high performance levels and responsible conduct, as well as transparency and fairness to be considered acceptable.

Human-in-the-loop systems require both ethical considerations and governance structures. The following principles guide the maintenance of trustworthy Human-in-the-Loop systems that respect human values:

- Accountability Frameworks: In HITL systems, accountability necessitates the straightforward assignment of responsibility for all decisions. The identification process involves determining all parties that develop AI systems and those using their outputs. A defined framework prevents loss of responsibility during automation and safeguards trust levels.

- Inverse Accountability Mapping: Individuals or groups can influence AI outcomes without formally accepting responsibility for their effects. The process of inverse accountability mapping uncovers hidden decision-makers to establish transparent decision-making processes.

- Meta-Governance Auditors: Independent meta-governance auditors use automated systems to monitor the ethical behavior of HITL systems. This system tracks human-machine relationships to identify violations or abnormal patterns, providing ongoing oversight beyond routine compliance assessments.

- Fairness and Bias Mitigation: The reinforcement of social biases emerges unintentionally when AI systems are trained on unbalanced or unrepresentative data sources. Fairness mechanisms create systems that ensure equal treatment for every user.

- Data Privacy and Security: Effective user data protection necessitates robust management practices, transparent consent protocols, and adherence to relevant security regulations. Automation fosters long-term trust by implementing privacy measures that safeguard user data.

Use-Cases of Human-in-the-Loop (HITL) Automation

Let’s go over how HITL principles are applied through real-world case studies.

Case Study 1: iMerit’s Integration of Human Oversight in AI Processes

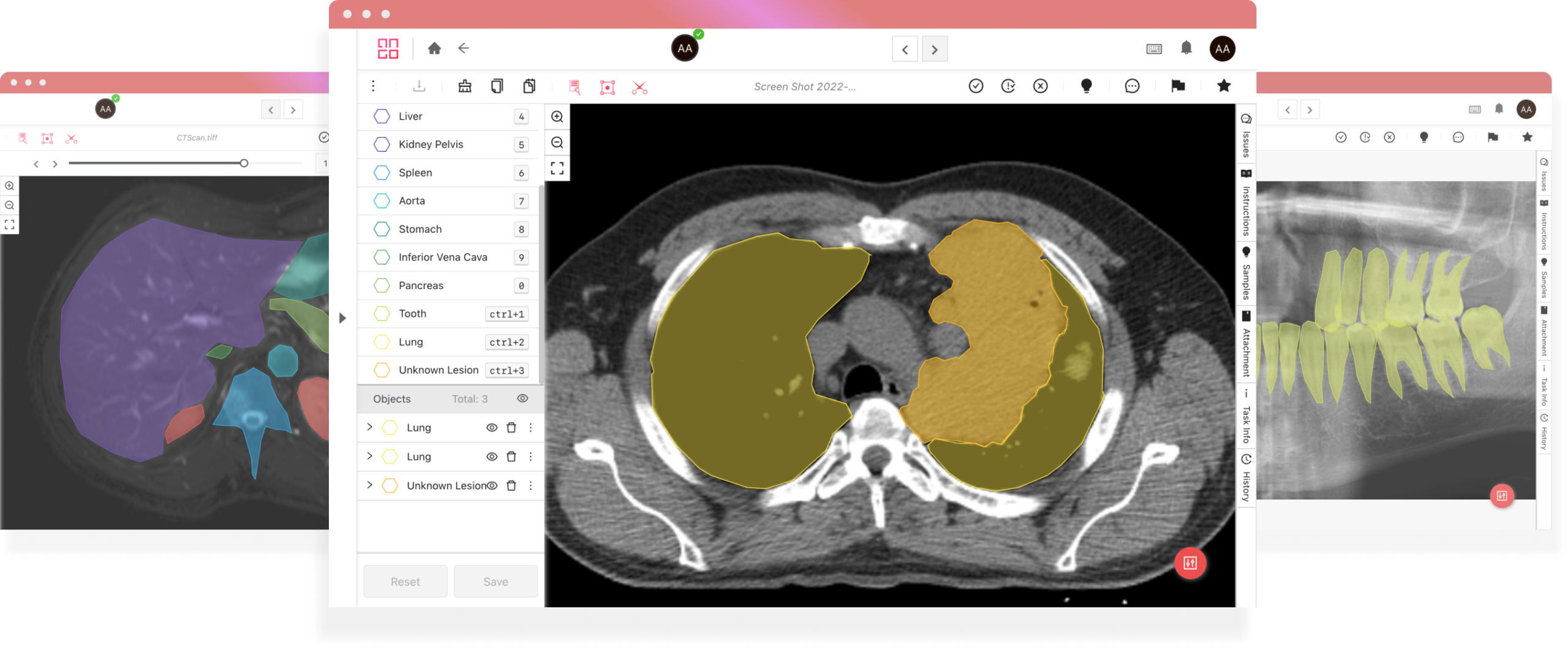

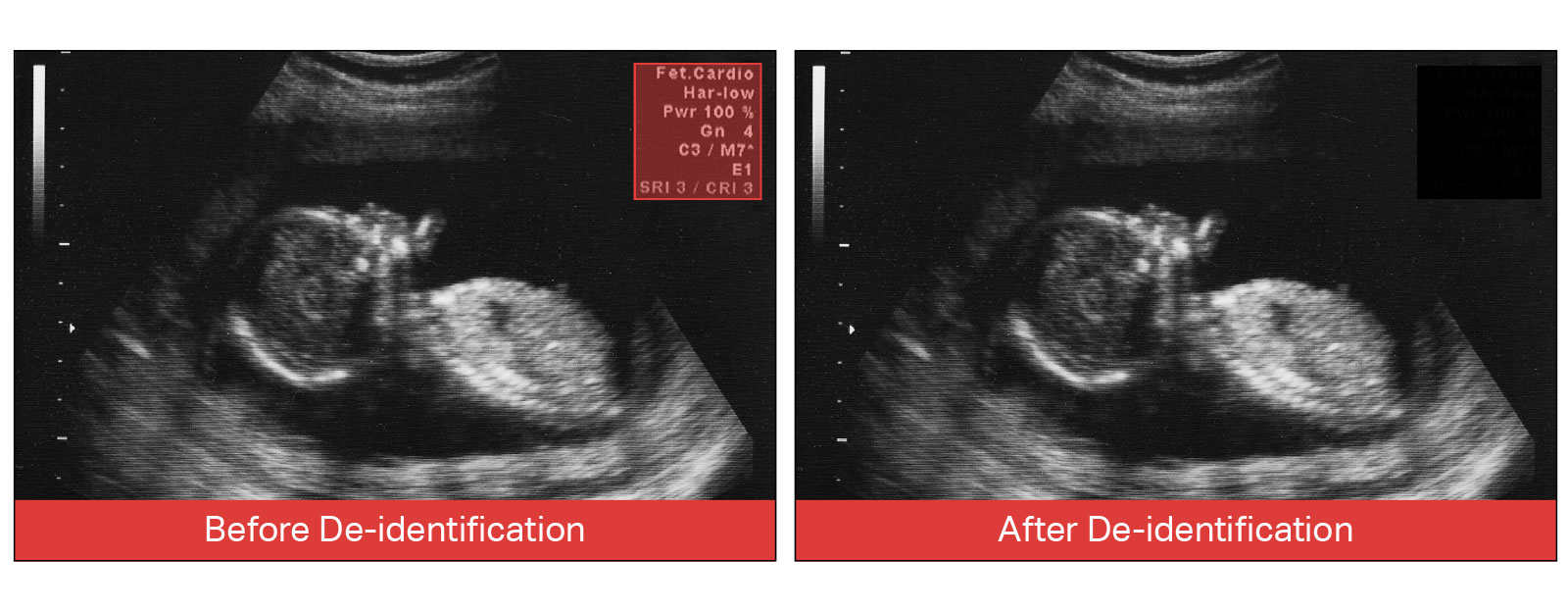

A top-three healthcare provider network wanted to use 20,000 ultrasound video recordings for AI model development. However, these videos contained burned-in patient details, hindering their accessibility. To address this, iMerit customized a de-identification process tailored to the healthcare provider’s unique ultrasound video collection.

The solution integrated Ango Hub’s automation capabilities with human-in-the-loop (HITL) verification to ensure accurate and complete de-identification. It removed all 18 HIPAA identifiers embedded in the scans.

After automated processing, iMerit’s healthcare data specialists conducted a detailed manual review of every video to verify the removal of all 18 HIPAA-protected health identifiers. The de-identification process was backed by strict data security protocols and compliance guidelines, safeguarding sensitive information throughout the workflow.

This combination produced a HIPAA-compliant dataset, which became a valuable resource for developing AI/ML models for disease diagnosis, treatment planning, and improving patient care. iMerit’s broader platform approach also incorporates Reinforcement Learning with Human Feedback (RLHF) methodologies, enabling continuous enhancement of AI systems through expert human evaluations.

Source: De-Identifying Ultrasound Footage

Case Study 2: Enhancing an LLM-Powered Chatbot for a Leading Tech Company

A leading technology company in Asia collaborated with iMerit to enhance the safety and cultural adaptability of its LLM-powered chatbot. iMerit’s team created multilingual prompts through role-playing diverse personas and trained the model using Reinforcement Learning with Human Feedback (RLHF).

Prompt-response pairs were ranked based on harmlessness, honesty, and helpfulness. An advanced prompt-generation UI was introduced, streamlining the annotation process with features like search integration and sensitivity coding. As a result, the chatbot now handles complex conversations more responsibly, aligning with human evaluation standards.

Wrapping Up

Automating Human-in-the-Loop systems doesn’t mean eliminating human involvement. Instead, it’s about reshaping their roles to better align with technological requirements. The objective is to develop more intelligent, transparent, and flexible systems.

Key Takeaways:

- Human oversight remains important to maintain the safety and fairness of context-aware AI decisions.

- Traditional HITL models have transformed into adaptive feedback-driven systems known as RHML.

- Self-aware AI systems possess the ability to detect uncertain situations, which helps determine the need for human supervision.

- Ethical automation requires strong governance to control bias and protect privacy.

- Real-world HITL implementations demonstrate that uniting human expertise with automated systems enhances AI performance.

Organizations working toward this balance often collaborate with experts who contribute human insight to AI development. iMerit supports this balance by incorporating human insight into AI development across critical areas.

The iMerit Ango Hub platform implements RLHF by allowing annotators to evaluate model results against multiple benchmarks, including relevance and coherence. The evaluations conducted by annotators help develop reward models that guide reinforcement learning to produce outputs that match human values and expectations.