The quality of AI models depends entirely on the data that trains them. While this principle seems straightforward, preparing training data involves complex decisions that determine project success. Data annotation—the process of labeling raw data to make it machine-readable—sits at the heart of this challenge. The choice between manual and automated annotation approaches directly impacts model accuracy, development timelines, and resource allocation. Each method has distinct advantages and limitations that must be weighed against project requirements, budget constraints, and quality standards.

Data Annotation, Explained

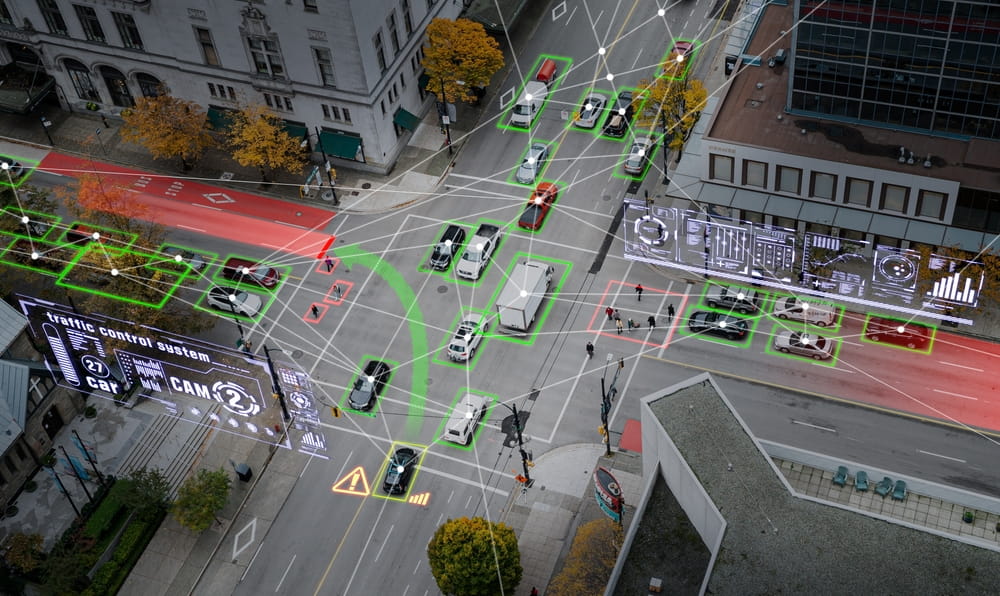

Data annotation transforms raw, unstructured data into structured, labeled datasets that machine learning algorithms can interpret and learn from. It involves human annotators or automated systems identifying and tagging relevant features within data, whether drawing bounding boxes around objects in images, transcribing audio files, or categorizing text documents. The annotation process varies significantly across different data types. Computer vision projects require annotators to identify objects, segment images, or track movement patterns. Natural language processing tasks involve sentiment analysis, entity recognition, and syntactic parsing. Each annotation type demands specific expertise and quality control measures to ensure the resulting dataset accurately represents the real-world scenarios a model will encounter. Quality annotation directly correlates with model performance. Inconsistent labeling, missing annotations, or subjective interpretations can introduce bias and reduce model accuracy. The complexity increases when dealing with edge cases, ambiguous data, or domain-specific requirements that demand specialized knowledge.Pros & Cons of Manual Data Annotation

Superior Accuracy for Complex Data

Human annotators excel at interpreting nuanced, ambiguous, or context-dependent information that automated systems cannot handle. They apply domain expertise to make sophisticated judgments about edge cases, recognize subtle patterns, and provide consistent quality through multiple review layers and consensus mechanisms.Exceptional Flexibility

Manual annotation adapts instantly to changing requirements, new guidelines, or unexpected data variations. Annotators can creatively handle edge cases, provide valuable feedback on annotation schemas, and adjust to evolving project needs without requiring system retraining or reconfiguration.Significant Cost and Time Investment

Manual annotation demands substantial human resources, creating higher per-instance costs that can make large-scale projects prohibitively expensive. The sequential nature of human work creates bottlenecks that extend timelines, particularly challenging for datasets requiring millions of annotations.Limited Scalability

Human annotation capacity faces natural constraints. Recruiting, training, and managing large annotation teams presents logistical challenges that can limit project scope. Maintaining consistency across multiple annotators requires extensive coordination and quality assurance processes.Human Error and Bias Risk

Despite quality controls, human annotators introduce variability through fatigue, subjective interpretations, and cognitive biases. Inter-annotator disagreement can compromise dataset quality, while personal biases may inadvertently skew annotation decisions and model performance.Pros & Cons of Automated Data Annotation

Rapid Processing and Scalability

Automated systems process massive datasets at unprecedented speeds, enabling quick iteration cycles and faster time-to-market. They handle millions of data points simultaneously without fatigue, making them ideal for large-scale projects with tight deadlines and continuous data streams.Cost-Effective at Scale

Once implemented, automated annotation provides services at marginal costs, making large datasets economically viable. The absence of ongoing labor expenses allows resource reallocation to other critical development activities while enabling experimentation with previously cost-prohibitive dataset sizes.Limited Complexity Handling

Automated annotation struggles with nuanced, context-dependent, or ambiguous data requiring human judgment. Systems may miss subtle patterns, misinterpret edge cases, or fail to adapt to unexpected variations that exceed their programmed capabilities.Training Data Dependency

Automated annotation effectiveness depends heavily on initial training data quality. Poor training data propagates errors throughout the entire process, creating circular challenges where high-quality annotation requires existing high-quality annotated datasets.Reduced Novel Scenario Performance

Automated systems struggle with data significantly different from their training distribution, leading to reduced accuracy in unexpected scenarios. They also lack the adaptability to handle new annotation categories or modified requirements without time-consuming retraining processes.When to Choose Manual vs Automated Data Annotation

| Factor | Manual Annotation | Automated Annotation |

| Data Complexity | High complexity, ambiguous cases, domain-specific requirements | Straightforward, well-defined patterns, standardized formats |

| Quality Requirements | Maximum accuracy needed, tolerance for higher costs | Good accuracy acceptable, cost efficiency prioritized |

| Dataset Size | Small to medium datasets (thousands to hundreds of thousands) | Large datasets (millions of instances) |

| Timeline | Flexible timelines, quality over speed | Tight deadlines, rapid iteration required |

| Budget | Higher budget allocation, quality investment | Cost-constrained projects, scalability focus |

| Domain Expertise | Specialized knowledge required | General annotation tasks, minimal expertise needed |

| Annotation Type | Complex object detection, medical imaging, legal documents | Simple classification, basic object recognition |

| Iteration Frequency | Stable requirements, infrequent changes | Frequent updates, continuous improvement cycles |