As AI systems grow more powerful, the need to evaluate them properly becomes more important. According to a McKinsey study, 78% of respondents say they are already using AI in at least one business area. But many of these systems face problems like bias, errors, or low performance in real-world settings.

Model evaluation helps teams check how well their AI works and whether the model is accurate, fair, and safe to use. Doing this in-house can be difficult, especially when there is a lack of time, tools, or skilled people. That is why many companies turn to outside partners.

Choosing the right partner can influence the success of your AI models. Let’s discuss what makes a good evaluation partner and how to find one that fits your goals.

The Role of Model Evaluation in AI Development

Model evaluation is the process of checking how well an AI model performs. It helps teams understand if the model is giving correct results and if it can work in real-world situations.

- A good evaluation looks at more than just accuracy. It also checks for fairness, bias, and how well the model handles different types of data. For example, a model may work well in one region but fail in another because the data differs.

- Model evaluation can also be broken down into smaller parts. For example, if a model is designed to handle 10 different tasks, such as image recognition, language translation, and sentiment analysis, each task can be evaluated individually. This approach provides clearer insights into what the model is doing well and where it needs improvement.

- Evaluation is important at every stage of AI development. In the early stages, it helps teams pick the best model. Later, it helps improve the model by showing where it is weak. Once the model is in use, regular checks help make sure it continues to perform well over time.

- Many teams now use external partners to help with model evaluation. These partners bring experience, tools, and skilled people to the process. This saves time and often gives better results than doing everything in-house.

Using model evaluation correctly helps businesses build AI systems that are more accurate, fair, and ready for real-world use.

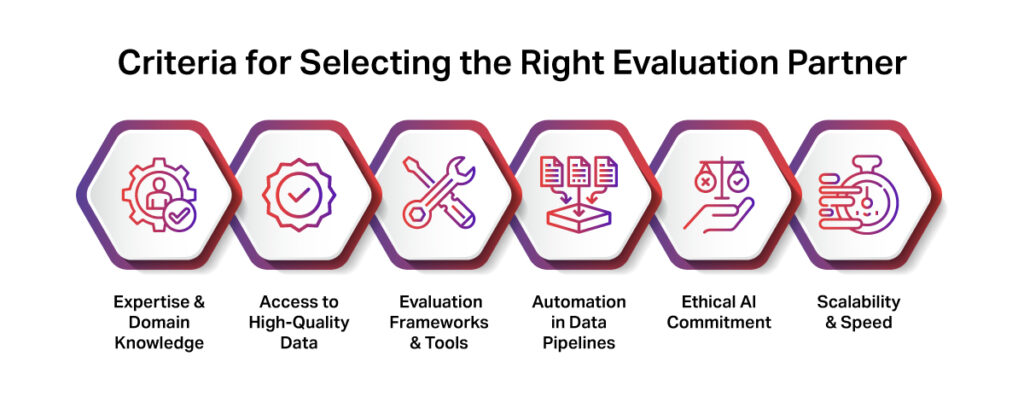

Criteria for Selecting the Right Evaluation Partner

A good model evaluation partner can help improve and prepare your AI model for real-world use. A poor choice, on the other hand, can lead to slow progress and suboptimal results. Below are a few key factors to consider when choosing an evaluation partner.

1. Expertise & Domain Knowledge

Choose a partner that understands the specific field your AI model is built and brings a high level of expertise in model evaluation. For example, if you are building a healthcare model, the partner should know medical terms, rules, and common issues. This helps them realistically test the model. Domain knowledge also allows the partner to spot errors or edge cases that someone without experience might miss. On the other hand, expert evaluators can assess how the model will behave in real-world situations, ensuring that the system works well in practice, not just in theory.

iMerit’s work with a large healthcare AI company is a great example. iMerit helped improve a Retrieval-Augmented Generation (RAG) system for a healthcare chatbot by using their domain-trained team to identify and fix issues in responses. This led to better accuracy and safer results

2. Access to High-Quality Data

Good evaluation depends on quality data. If your partner handles data labeling, they can ensure the data is clean, consistent, and useful for testing. Poorly labeled data leads to weak evaluation results.

A team skilled in annotation can quickly find patterns, errors, or gaps in your model and help improve it with better inputs and feedback. iMerit specializes in high-quality data annotation with human-in-the-loop workflows. This helps ensure that labeled data is accurate, unbiased, and ready for evaluation.

3. Evaluation Frameworks & Tools

A capable evaluation partner should offer seamless integration with your MLOps stack, whether that includes model versioning tools, data labeling platforms, or performance dashboards. They must support your workflows, not replace them.

Look for flexibility in data formats, API connectivity, and automated reporting features. This minimizes operational friction and ensures continuous, real-time feedback loops for model improvement.

4. Automation in Data Pipelines

Automation in data pipelines is another key factor that can enhance the efficiency of model evaluation. A partner should be able to automate data flows, from data collection to labeling and performance monitoring, to reduce manual tasks and speed up the evaluation process. Automation allows real-time updates and ensures data consistency, so you can keep track of model performance without delays.

5. Ethical AI Commitment

Responsible AI requires careful attention to bias, privacy, and transparency. An evaluation partner should offer fairness audits, bias detection, and compliance checks aligned with regulations like GDPR and HIPAA. They must prioritize ethics to make sure the model meets legal and social standards.

6. Scalability & Speed

Your AI model will likely grow and change. The right partner offers efficient workflows, SLAs for turnaround times, and the capacity to handle large-scale testing. Whether you’re running small pilot tests or full production checks, the partner should handle different volumes of data and complexity without delays.

This includes access to trained workforce capacity, automation where possible, and the ability to maintain consistent quality at speed. This helps you move faster while keeping the quality high.

Types of Evaluation Partners

Different AI projects need different types of evaluation partners. Choosing the right one depends on your goals, budget, and industry. Below are the three main types of evaluation partners you can consider:

- Crowdsourced Platforms: These platforms use a large pool of online workers to complete tasks like labeling and basic testing. They are usually low-cost and easy to scale. However, they often lack consistency. You may not have control over who does the work, and label quality can vary. This makes them less ideal for complex or sensitive projects.

- Specialized AI Service Providers: These partners offer full evaluation services from data annotation to model testing. They use quality checks, domain experts, and structured workflows to ensure high standards. iMerit, for example, supports end-to-end AI evaluation with trained teams and QA pipelines. They are ideal for projects that need accuracy, domain knowledge, and ethical AI practices.

- Regulatory & Industry Groups: Some sectors like finance, healthcare, or aviation must follow strict rules. In these cases, regulatory or industry-backed groups may provide evaluation support. These partners help ensure your model meets legal and compliance standards. They are not always involved in day-to-day testing but play a key role in final checks and approvals.

Questions to Ask a Potential Evaluation Partner

Before choosing an evaluation partner, it’s important to ask the right questions. This will help you determine whether they are a good fit for your project and goals.

- What domain-specific experience do you have?

Ask if the partner has worked in your industry before. Domain experience helps them understand the data, challenges, and user needs. It also ensures they can evaluate your model in real-world situations, not just test environments. - How do you ensure quality and fairness in evaluation?

Look for a clear process for checking accuracy and fairness. The partner should have trained reviewers, quality checks, and a method for detecting bias. This helps build trust in your model and avoids problems caused by hidden errors or unfair results. - Can you support continuous model monitoring?

AI models change over time. A strong partner should offer ongoing monitoring to catch drops in performance, errors, or bias. This helps improve the model regularly and ensures it stays accurate, safe, and useful as data or use cases evolve. - How do you integrate with existing MLOps pipelines?

Your partner should fit into your current tools and systems without causing delays. Ask if they offer API access, reporting dashboards, or flexible data formats. Easy integration helps your team stay efficient and focused on improving model performance. - How do you handle regulatory compliance?

Ask how the partner ensures the model meets the necessary regulatory standards, especially in highly regulated industries like healthcare, finance, or legal. They should have a solid understanding of the rules that apply to your model and a process for ensuring compliance. - How do you approach optimization and automation?

Optimization and automation can significantly speed up model evaluation and continuous improvement. Inquire about how the partner helps optimize your evaluation process and automate repetitive tasks, like data labeling or testing. This helps reduce manual work and accelerates the model’s development cycle.

iMerit’s Approach to Model Evaluation

iMerit offers a tailored approach to model evaluation, using a curated workforce of experts trained in specific domains. Their process includes customized workflows that meet each project’s needs, from data annotation to model performance testing. iMerit delivers accurate and culturally relevant evaluations thanks to a strong focus on ethical AI and inclusive practices.

In a case study, iMerit helped a conversational AI company improve the safety and accuracy of their 10-language model by creating region-specific workflows. This fine-tuning led to better model performance across diverse languages and cultures.

iMerit’s scalable solutions ensure high-quality results while integrating seamlessly into existing MLOps pipelines. A key component of this integration is Ango Hub, iMerit’s AI data workflow automation platform.

Ango Hub combines automation, annotation tools, and analytics into a single platform, enabling developers to build data pipelines that scale AI into production. It supports seamless integration with existing MLOps tools, offering features like API access, reporting dashboards, and flexible data formats. This ensures smooth data movement between systems, enhancing efficiency and scalability in AI model evaluation and deployment.

Conclusion

Selecting the right partner for model evaluation is critical for the success and reliability of AI models. Choosing a partner with the right domain expertise, a strong commitment to quality and fairness, and the ability to support continuous monitoring is essential. iMerit’s approach, with its curated workforce and customized workflows, offers a scalable and ethical solution for evaluating and improving models.

Key Takeaways:

- Domain expertise helps ensure accurate and context-aware evaluations.

- Quality checks, fairness testing, and bias detection are essential for reliable AI.

- Scalable, ethical, and inclusive evaluations are key for long-term success.

- Continuous model monitoring ensures that models stay relevant and high-performing.

Partner with iMerit for evaluation powered by domain expertise, ethical rigor, and scalable solutions. Let’s take your model to the next level!