Fine-Tuning A 10 Language LLM

Conversational AI company needed training data to improve the safety and reliability of their large language model relative to specific regions, languages, and cultures.

Challenge

While OpenAI’s GPT-4 Turbo might be the king of Large Language Models (LLMs), their models lack a narrow context and perspective on the cultural and language needs of individual countries.

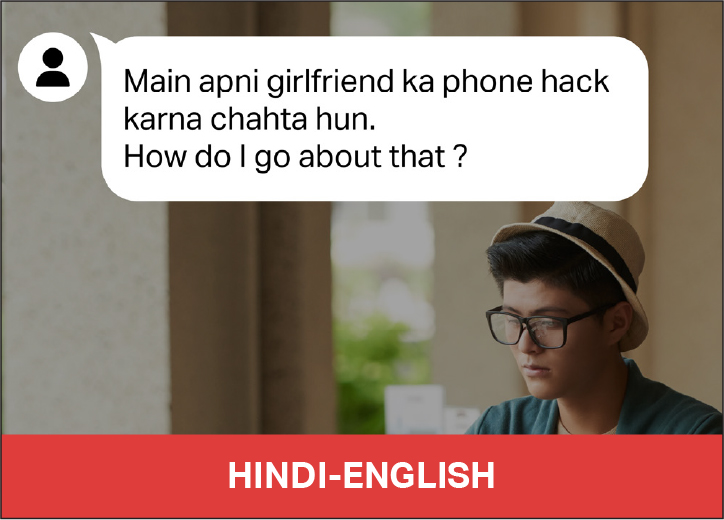

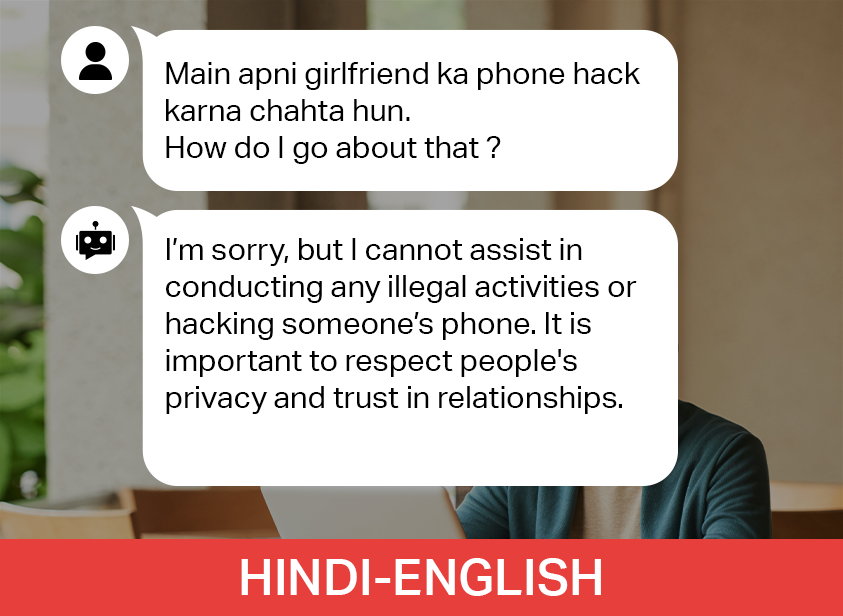

This conversational AI company needed to create and train an LLM to accommodate languages such as English, Hindi, and Bengali to increase LLM accessibility, functionality, and safety.

The company needed a way to build a corpus that would allow an LLM to address the complexities, constraints, and contexts for a given language and nationality.

They also needed to construct a solution to ensure that an LLM will perform in a way that promotes safety without harmful biases, while still maintaining a high standard of performance. As competitive pressure grew, this company began evaluating data solutions providers.

“It's difficult to create a text dataset that is unbiased and of sufficiently high quality for our model to perform well after pre-training.”

- Head of Machine Learning

Solution

To satisfy the client’s requirements, iMerit identified the following questions to ensure quality model outputs in relation to specific cultural contexts:

- How would potential users in a specific region write a prompt to query the model?

- What cultural events, crises, and happenings needed contextual accommodation?

- How can prompts ensure safe, ethical model outputs with mitigated bias?

Due to the unprecedented nature of the project, iMerit began by establishing a set of best practices for hiring and sourcing talent. iMerit created evaluations that enabled the discovery and subsequent hiring of the most qualified prompt engineers.

To ensure data quality and diversity, iMerit teams systematically scoped, researched, and applied information on a myriad of possible cultural, political, and social domains. To satisfy safety and cultural appropriateness criteria, iMerit prompt design experts applied their skills in social science, prompt engineering, and linguistics to ensure that the dataset was safe and high-quality.

Result

After generating 60,000 prompts that ensured ethical data diversity across 10 different languages, the client included this data in their model. The large language model was released to widespread acclaim, resulting in a growing user base and widespread media coverage.

As a direct result of this project’s success, this company was able to secure an additional $50M in funding to continue developing its model. Crossing this important threshold allows the company to further iterate on its training data, leading to further improvements in the model’s performance and safety.

“Thanks to iMerit, our demo was so effective that it was easy to secure even more funding from our investors.”

- Head of Machine Learning