Vision-Language-Action Model

for Autonomous Mobility

IMPROVED

Vehicular Safety

This major AI company came to iMerit to implement a vision-language-action model to improve model explainability, decision-making transparency, and overall safety

Challenge

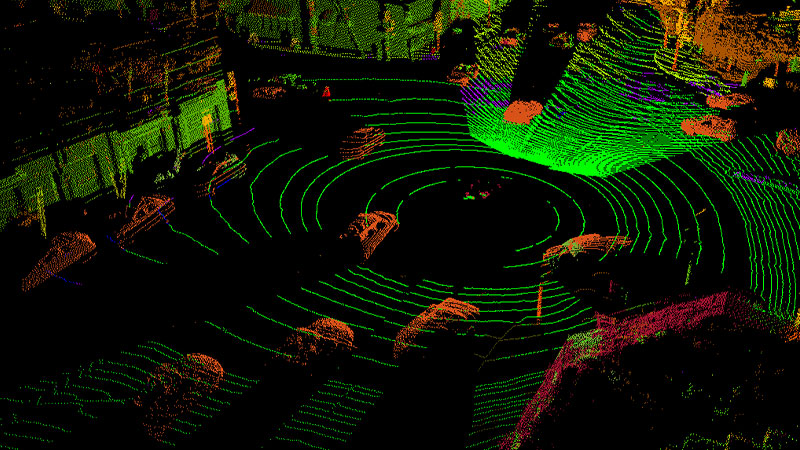

Vision-Language-Action models (VLMs) are bridging the gap between visual and linguistic understanding of artificial intelligence. However, their power hinges on high-quality training data. As an AI-first autonomous technology company, this autonomous vehicle company had invested in state-of-the-art research to apply VLMs across their autonomy stack. To improve model performance on US roads, this company needed a unique dataset with detailed information around human driving styles, rules of the road, and how to interact verbally with the passenger.

With a critical demonstration deadline on the horizon, this project was on a tight schedule. To make this deadline, the company needed annotators who were fluent in English with experience annotating data for model training. To date, their data annotation provider was struggling to maintain the throughput necessary for the tight demonstration deadline. Realizing they needed a solution quickly, they began evaluating data service providers.

“We needed curated data from experts who understand the rules of the road as well as what our models need to perform to make our demo a success. ”

- Head of Computer Vision

Solution

This company chose to work with iMerit due to their VLM experience and history of providing AI data solutions for autonomous mobility. To kick off this project, this company shared a dataset of both real and synthetic driving scenarios. iMerit autonomous vehicle domain experts were then tasked with assessing the nature of the driving conditions in each scenario. Specifically, analysts would assess and classify different features of each scenario including objects like traffic signs, brake lights, and crosswalks.

Analysts would also determine whether the scenario contained environmental abnormalities, such as construction zones, collisions, or pedestrians out of place. When such abnormalities were found, the analyst would classify and remove them. This data would then be made available to the VLM so it could communicate its actions more accurately. If confused, the VLM would inform the operator for added guidance.

After analyzing and grading thousands of scenarios, valuable learning instances were distilled into a dataset to further enhance operating performance.

“The data which we received far exceeded our targets for quality and speed, allowing us to further develop our models. ”

- Head of Computer Vision

Result

After training the model with iMerit datasets, the client found iMerit’s classifications were 95% accurate. iMerit also successfully improved time-per-task by 50%. The VLM was able to provide more clarity of the vehicle’s actions to the vehicle operator. These marked improvements in vehicular safety enabled this company to create a demonstration of their vehicle’s performance for investors and customers take out ahead of schedule.

This collaborative approach propelled the frontier of cutting-edge automotive technologies, solidifying this company’s position in shaping a safer and more efficient future on the roads. By leveraging a combination of VLM technology and AV expertise, the team successfully bridged the gap between model decision-making and audio explainability to the operator.