Training perception systems for autonomous vehicles, robotics, and drones requires more than just labeling images or point clouds in isolation. Multi-sensor fusion annotation—combining data from LiDAR, radar, RGB cameras, IMUs, and more- requires sophisticated platforms that handle time-synced streams, sensor calibration, and 3D visualization.

In this guide, we explore the top tools purpose-built to annotate complex, multi-modal sensor data. These platforms offer everything from fused 3D viewers to ML-assisted workflows, helping you accelerate training data creation while maintaining precision.

Here are the Top 7 tools for multi-sensor fusion annotation in 2025.

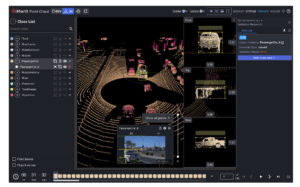

1. Ango Hub by iMerit — Designed for Multimodal Sensor Precision

Ango Hub is one of the few platforms purpose-built for real-world autonomous mobility workflows, where multi-sensor data is the norm. Whether it’s LiDAR-camera fusion, radar overlays, or GPS-aligned trajectory labeling, Ango Hub provides a robust framework for managing and annotating fused datasets.

Key Features:

- Synchronized Multi-Stream Playback: Align LiDAR, RGB camera, radar, and GPS streams with time-coded precision.

- Sensor-Aware Annotation Tools: Label directly in fused views or individually by stream, with real-time updates across modalities.

- Advanced Calibration Support: Visualize and correct sensor offsets, ensuring geometric alignment for accurate labeling.

- Custom Ontologies per Sensor: Define and apply taxonomies specific to each modality or task (e.g., depth objects, motion trails, pedestrian classes).

- QA at Fusion Level: Built-in expert review of fusion accuracy, annotation consistency, and frame continuity.

- Secure, Scalable Deployment: On-premise, hybrid, or cloud deployments tailored to compliance-heavy AV and robotics teams.

- Automation for Sensor Fusion Workflows: Tools for temporal alignment, sensor-aware pre-labeling, and annotation propagation streamline high-complexity pipelines

- API Integration and Developer Controls: Fusion-aware APIs for pipeline automation, validation workflows, and metadata management

Why It Stands Out:

Ango Hub combines technical excellence with human-in-the-loop (HITL) services, ensuring that annotations are not only sensor-accurate but also semantically aligned with real-world driving edge cases. It’s an ideal solution for AV companies looking to go beyond object-level labeling and move toward behavioral and contextual understanding across sensors.

2. Deepen AI – Calibration-aware labeling for advanced AV systems

Deepen AI offers a sensor fusion platform with a strong emphasis on calibration. Its suite includes tools for camera-LiDAR-radar alignment, 3D bounding boxes, segmentation, and time-synced labeling. Designed for automotive-grade projects, it also supports on-prem deployments and regulatory compliance needs.

Key Features:

- LiDAR-camera-radar calibration toolkit

- Frame synchronization and temporal labeling

- Side-by-side and overlay fusion views

- 3D/2D bounding box and segmentation tools

- Time-synced multi-sensor workflows

- On-prem and compliant deployments for AV

- 3D annotation across modalities

Deepen AI is well-suited for AV teams with advanced data pipelines that need a high level of calibration control and quality assurance.

3. AWS SageMaker Ground Truth – Scalable Labeling in AWS Ecosystem

SageMaker Ground Truth supports camera and LiDAR data annotation with tools to manage large datasets across distributed teams.

Key Features:

- Native support for 3D cuboid labeling on LiDAR point clouds

- Sensor fusion labeling capabilities via AWS RoboMaker

- Integration with Amazon S3, SageMaker training jobs, and Ground Truth Plus

- Pre-labeling and active learning workflows

Best if a team is already fully embedded in AWS cloud infrastructure.

4. CVAT – Open-Source Support for Fusion Annotation

CVAT (Computer Vision Annotation Tool) is a widely used open-source platform from Intel. While not designed explicitly for sensor fusion, it offers plugins and extensions that support basic fusion workflows.

Key Features:

- Support for 2D/3D annotation via extensions

- Community plugins for LiDAR and radar data

- Custom label schemas and API integration

- Lightweight for academic or early development use

Ideal for smaller teams or research labs looking for flexible, cost-effective tooling.

5. Scale AI – Fusion-First Enterprise Labeling

Scale AI powers fusion pipelines for some of the biggest AV companies. With support for synchronized LiDAR–camera annotation, built-in QA dashboards, and seamless automation, it’s designed for production-scale deployments. Now enhanced by its acquisition of Segment.ai, Scale supports developer-centric, multi-sensor workflows with added flexibility and automation.

Key Features:

- Fusion of LiDAR, radar, RGB, and thermal data

- Custom pipelines with QA workflows

- Model-assisted labeling and active learning

- Task types include 3D cuboids, segmentation, and classification

For high-volume AV projects needing programmatic scaling with automation in the loop.

6. Kognic: Safety-Critical Sensor Fusion Workflows

Kognic specializes in sensor fusion annotation for ADAS and autonomous vehicles, offering tools tailored to meet stringent safety, quality, and validation needs. With a focus on precise calibration and high configurability, it’s ideal for enterprise-grade automotive AI pipelines.

Key Features:

- Sensor fusion support with 3D LiDAR, radar, and camera inputs

- Customizable QA pipelines and ontologies

- Tools for ground truth management and scenario coverage

- Built-in compliance features for ISO 26262 and ASIL alignment

- Collaboration features for human-in-the-loop review and feedback

For teams managing cross-functional annotation pipelines with a focus on speed and quality.

7. SuperAnnotate: Collaborative Annotation for Complex Multi-modal Data

SuperAnnotate is a robust end-to-end data annotation platform designed to handle diverse modalities, including LiDAR, video, and image data. Its emphasis on automation, collaboration, and quality control makes it a solid choice for teams managing multi-sensor fusion projects at scale.

Key Features:

- Multi-modal support for LiDAR, video, and images

- AI-assisted pre-labeling and model integration workflows

- Advanced collaboration tools with role-based access

- Built-in quality management and plugin ecosystem

For teams working on high-stakes, regulation-heavy AI systems requiring rigorous quality control.

Final Thoughts: Fusion Demands Focus

As perception systems evolve, sensor fusion is no longer optional; it’s the backbone of robust AV performance. But annotation platforms must keep up with this complexity, offering precision syncing, multimodal tools, and real-time QA to ensure model reliability.

Ango Hub leads the pack by going beyond basic toolsets. With its fusion-native architecture, human-in-the-loop QA, and real-world deployment flexibility, it’s a trusted partner for teams building the next generation of autonomous systems.