The rapid adoption of Generative Artificial Intelligence (GenAI) is driving companies to accelerate their implementation of AI governance to reduce risk in AI-based workflows. Yet, as AI systems scale, ensuring safety becomes costly due to the complexity of infrastructure and monitoring bottlenecks.

Red-teaming helps address these risks by stress-testing AI models with complex or adversarial prompts to identify weaknesses before they are deployed in production. However, the process produces massive amounts of data, demanding significant human oversight and expert intervention. This need for human expertise makes scaling red-teaming a complicated challenge.

iMerit’s Ango Hub was built to solve this challenge by enabling structured, scalable, and efficient red-teaming workflows. With Ango Hub, organizations can move from ad-hoc testing to systematic evaluations that match the scale of modern generative AI.

The Importance of Red-Teaming in Generative AI

Red-teaming is a method for identifying Gen AI weaknesses before they cause harm. It involves using adversarial prompts, stress tests, and edge cases to see how the model reacts. For example, in 2024, researchers hacked Google’s Gemini and successfully took control of a smart home system. A structured red-teaming process could have flagged this weakness early and shown the need for stronger safeguards.

These failures show that red-teaming for AI systems goes beyond technical checks and plays a key role in ethics and regulation. Governments and regulators now expect organizations to test AI systems for safety and demonstrate compliance.

Effective red-teaming for LLMs and other generative AI systems provides the evidence needed for audits while also helping to build public trust. As generative AI scales, structured and continuous red-teaming becomes an essential safeguard for both enterprises and users.

The Scaling Challenge

As AI models grow larger and more powerful, red-teaming becomes challenging to scale. Here are some of the issues companies face when performing red-teaming at scale.

- Traditional red-teaming for AI is difficult to scale. It relies heavily on human effort, which makes the process slow, costly, and resource-intensive. Teams often work with fragmented tools and lack structured workflows. This makes it hard to reproduce tests or measure results consistently. This creates coverage gaps and limits the reliability of findings.

- The feedback loop is another challenge. When issues are discovered, it can take weeks or months for that feedback to be integrated into model training, slowing down improvements.

- As generative AI models grow larger, their attack surface expands. More parameters, broader training data, and complex interactions mean more potential entry points for misuse.

- The variety of risks also adds to the challenge. A model needs to be tested for bias, misinformation, safety, privacy, and more. Each of these areas requires different prompts, workflows, and expertise. Scaling across all these risk areas without standardized processes quickly becomes unsustainable.

- Tracking and reproducing failures is another barrier. Once a model breaks under a specific prompt, teams need to record it, share it, and test it again after fixes. Without proper systems, these insights can be lost.

- Red-teaming is also resource-intensive. Recruiting skilled testers, annotators, and domain experts requires significant investment that many organizations cannot sustain. The trade-off is that cutting costs often means reduced test coverage or less diverse expertise, which increases the chance of missing critical risks. Additionally, adversarial behavior also evolves rapidly. So tests that were relevant one month may already be outdated the next.

Finally, measuring the impact of red-teaming efforts is challenging, as harmful outputs are often context-specific and may not be revealed during limited testing.

Ango Hub: What It Is and How It Helps

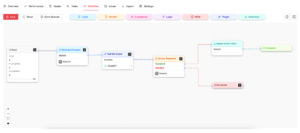

The scaling challenges highlight the need for a structured solution. Ango Hub addresses them by providing a centralized platform where teams can design, run, and track red-teaming campaigns in a consistent and repeatable way.

It is an advanced annotation, labeling, and evaluation platform designed to manage complex AI workflows. For generative AI and large language models (LLMs), Ango Hub provides the structure needed to run large-scale red-teaming programs with precision. Instead of scattered tools and inconsistent methods, teams can work within a unified platform that ensures every test follows defined steps and produces measurable results.

For red-teaming, Ango Hub offers several key features.

- Centralized workflow and pipeline design: Ango allows users to flexibly design workflows, from data ingestion to labeling, QA, and evaluation. So teams follow consistent steps.

- SDK, API, and plugin integration: Ango Hub supports SDKs, APIs, and custom plugins that let teams connect models and external platforms like Snowflake or Databricks directly into their workflows. Models can pre-label data, run automated evaluations, or be accessed through integrations such as the ChatGPT plugin. This setup enables faster inference, real-time evaluation, and continuous feedback within one platform.

- Multimodal support & 3D annotation: Ango handles diverse data formats (text, image, video) and supports 3D point cloud / multi-sensor fusion projects. This is useful when testing generative systems that produce or consume multiple modalities.

- Automation + human-in-the-loop: The platform supports automated accelerators (such as pre-labeling) to reduce manual effort, while human experts and reviewers use the built-in issue mechanism and error codes to flag and track problems consistently across This hybrid approach helps strike a balance between speed and quality.

- Quality control & reproducibility: Ango enables the assignment of multiple annotators per asset, benchmark comparisons, review flows (accept, reject, fix), and guideline uploads to standardize work. All data, results, and revisions are tracked and maintained.

- Deep Reasoning Lab (DRL) for generative AI: Ango Hub includes a Deep Reasoning Lab, which allows experts to interactively test generative AI and LLMs by posing complex, step-by-step scenarios (such as chain-of-thought reasoning), evaluating outputs, and capturing corrections in context, ensuring models are assessed on deeper reasoning tasks rather than just surface-level responses.

Together, these features enable teams to centralize, standardize, and scale their red-teaming workflows, managing adversarial prompt libraries, routing misbehaviors for expert review, and iteratively feed back corrections into model tuning.

Benefits for Organizations

Ango Hub provides organizations with several clear advantages for red-teaming.

- Efficiency and cost reduction: Manual red-teaming is expensive and time-consuming. Ango Hub introduces workflow automation, pre-labeling, and centralized task management. These features speed up evaluations and reduce the need for repeated manual work, cutting both time and cost.

- Quality and consistency: Ango Hub applies standard rules and benchmarks across projects. This reduces variation in results and makes it easier to compare performance over time.

- Scalability across models and domains: It also supports projects involving text, image, video, and multimodal content. This makes it easy to extend red-teaming scenarios without starting from scratch.

- Governance and auditability: Users can also record every test and outcome. This creates a clear trail for internal reviews and external audits. It also helps teams to build trust with stakeholders by showing evidence of systematic testing.

- Faster time to production with fewer risks: It helps companies to refine their models before deployment by catching problems early and incorporating them into workflows. This shortens iteration cycles and makes it safer to bring generative AI systems into production.

Instead of scattered scripts and ad-hoc testing, Ango Hub gives you a disciplined, scalable framework.

How Ango Hub Powers Scalable Red-Teaming

Ango Hub integrates with MLOps and governance pipelines to enable teams to run red-teaming tests more reliably. It supports integrations with platforms like Databricks and Snowflake through its SDK. These integrations enable users to securely transfer data between their core infrastructure and Ango Hub for annotation, evaluation, and feedback.

Ango Hub goes beyond basic automation by letting teams design workflows tailored for red-teaming. Teams can build custom red-teaming workflows or pipelines that define how data is ingested, pre-labeled by models, annotated, reviewed, and then evaluated. For red-teaming, teams can insert adversarial prompts or test cases directly into the pipeline and evaluate model responses in a consistent framework. This turns testing into a repeatable process that can be scaled across different models and scenarios.

It also powers red teaming with multimodal testing. Ango Hub handles not only text but also images, video, audio, document formats, and diverse data types. This is especially important for red-teaming generative models that handle multiple modalities, or when moderation needs to go beyond plain text.

Ango Hub supports feedback loops by enabling evaluation results to flow into dashboards and reporting tools, giving teams visibility into performance trends and error rates. These dashboards display key metrics, including annotator accuracy, edge case volume, reviewer feedback, and error rates. Teams can use these insights to fine-tune models, improve pipelines, and adjust red-team tests.

Here are some use cases showing how red-teaming with Ango Hub can work:

- Prompt injection attack testing: Builds a campaign where adversarial or malicious prompts are fed to the model, produce outputs, and then have experts check where the model is vulnerable.

- Validation of content moderation: Tests how a model responds to hate speech, toxicity, and misinformation. Use multimodal content (text + image) to ensure safeguards work across formats.

- Safety and regulatory compliance: Ensures outputs meet regulatory standards (e.g., for privacy, for non-discrimination), using logged evaluations, human reviews, and metrics for audit trails.

Case Studies: Ango Hub in Action

To see how these principles apply in practice, the following case studies show how red-teaming has been used to test generative AI in high-stakes healthcare settings.

Red-Teaming RAG Healthcare Chatbots

In one project, iMerit used Ango Hub to improve the reliability of retrieval-augmented generation (RAG) chatbots for healthcare. A multi-expert red-teaming initiative was set up to simulate adversarial prompts, biased questions, and misuse of sensitive data. The goal was to identify vulnerabilities in how the chatbot retrieved and generated information.

Using structured workflows, annotators tested the system across multiple risk areas and identified weaknesses that could lead to misinformation or privacy concerns. These findings guided refinements in both retrieval pipelines and safety filters.

Red-Teaming Clinical LLMs

At the 2024 Machine Learning for Healthcare Conference, researchers presented a study showing how red-teaming can expose risks in clinical LLMs. Using adversarial prompts, they uncovered issues such as incorrect medical advice, biased outputs, and unsafe recommendations.

The study highlighted how structured red-teaming AI is critical for stress-testing AI in sensitive domains like healthcare, where even minor errors can have serious consequences.

Practical Red-Teaming with Ango Hub (Hypothetical Workflow)

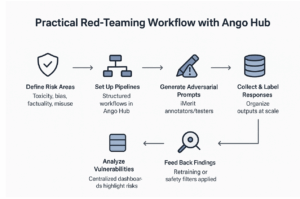

A structured red-teaming workflow in Ango Hub could look like this:

- Define risk categories such as toxicity, bias, factuality, and misuse.

- Deploy pipelines in Ango Hub that guide testing from prompt generation to evaluation.

- Engage distributed iMerit annotators/testers to create adversarial prompts that probe model weaknesses.

- Collect and label responses at scale to ensure outputs are categorized and measurable.

- Analyze vulnerabilities using centralized dashboards that highlight trends and risks, providing a comprehensive view of your security posture.

- Feed findings back into retraining data pipelines or safety filters to strengthen future model performance.

- This approach makes red-teaming systematic, repeatable, and transparent.

The Bigger Picture: Building Trustworthy Generative AI

Red-teaming is an ongoing process that must adapt as models and risks change. Ango Hub makes this easier by combining collaboration, data tracking, and continuous evaluation.

For organizations, this means more than just catching problems. It is about building trust. Customers, partners, and regulators want to know that AI systems are safe and reliable. A platform like Ango Hub helps meet these expectations by making testing structured, transparent, and easy to track.

By embedding red-teaming into the development cycle, companies can release AI models with greater confidence. They can also respond faster when new risks arise. Over time, this creates stronger and more trustworthy AI systems that benefit both businesses and society.

Conclusion

Red-teaming is essential in the generative AI era, where risks like bias, misinformation, and misuse can spread rapidly. iMerit’s Ango Hub addresses this challenge with structured workflows and automation that support large teams. The platform makes red-teaming scalable and repeatable, allowing organizations to test more efficiently, explore broader scenarios, and strengthen their models with confidence.

Key Takeaways:

- Red-teaming ensures AI systems are stress-tested for safety and reliability.

- Scaling these efforts requires structure, automation, and human expertise.

- Ango Hub offers workflow automation, auditability, and multimodal support.

- Enterprises can future-proof their generative AI by adopting platforms like Ango Hub.

Talk to an expert to learn how iMerit Ango Hub can help your team scale red-teaming and ensure safer AI deployments.