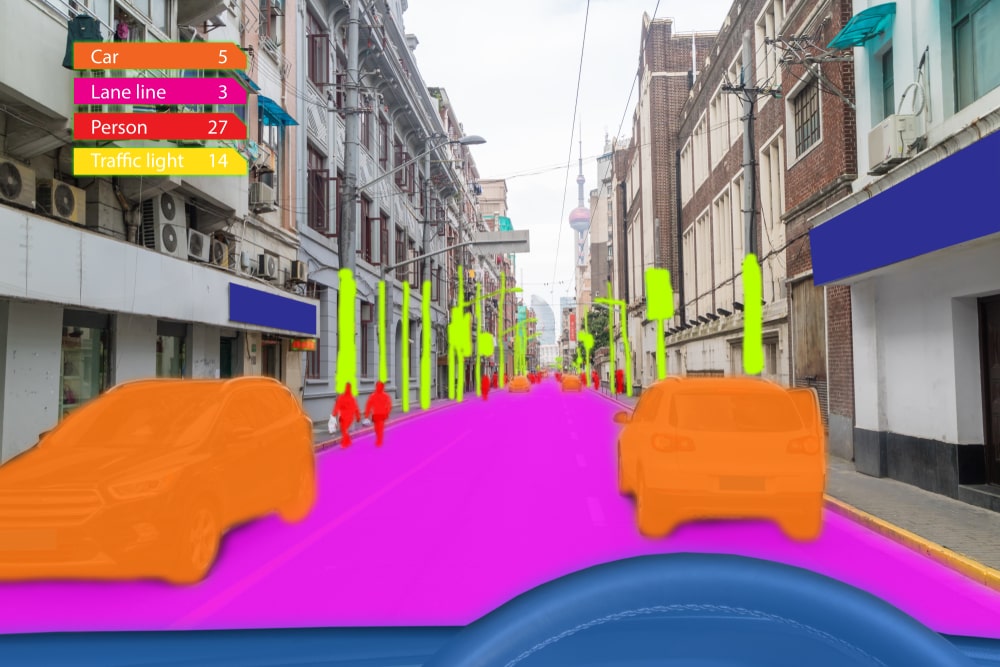

Precision matters when training machines to see the world as we do. Whether teaching autonomous vehicles to navigate traffic or empowering AI to assist radiologists in detecting anomalies, image segmentation plays a foundational role. However, not all segmentation is created equal. The distinction between semantic and instance segmentation isn’t just technical—it directly impacts model performance, scalability, and decision-making.

Understanding the difference between these approaches is important if you’re building or training computer vision systems. Semantic segmentation tells your model what something is; instance segmentation tells it which one. This subtle difference has major implications for how machines process and act on visual data.

Understanding Image Annotation

At the core of computer vision training is image annotation—the process of labeling pixels, objects, or areas within an image to help models learn to identify and distinguish visual elements. Annotation quality directly influences a model’s accuracy and effectiveness. Techniques vary widely depending on the task: bounding boxes, polygons, and segmentation masks each serve different objectives. For image segmentation specifically, annotation gets granular—each pixel is assigned a class or an object label. That level of detail supports nuanced understanding but also requires a smart choice between semantic and instance segmentation.What is Semantic Segmentation?

Semantic segmentation classifies every pixel in an image into a predefined category. In this method, all pixels that belong to a given class—say, “car” or “tree”—are labeled the same, without distinction between separate objects of the same class. So, if there are five cars in an image, semantic segmentation will assign them all the same label: “car.” This is effective for scenarios where the identity of each object doesn’t matter—what’s more important is the presence and coverage of a class within the image. It’s widely used in medical imaging, environmental monitoring, and satellite image analysis, where detailed class-based pixel labeling drives insights.What is Instance Segmentation?

Instance segmentation goes a step further. Like semantic segmentation, it classifies pixels by object class—but it also distinguishes between individual instances of the same class. That means every car in an image isn’t just labeled “car”—they’re labeled “car 1,” “car 2,” and so on. This approach is essential for applications that rely on counting, tracking, or differentiating between objects in close proximity. For example, in autonomous driving, it’s not enough to know that multiple cars are ahead—the vehicle must differentiate between them to avoid collisions and follow traffic dynamics properly. Instance segmentation enables that level of intelligence.Key Differences Between Semantic Segmentation vs Instance Segmentation

| Key Differences | Semantic Segmentation | Instance Segmentation |

| Object Identity Recognition | Labels all objects of the same class identically. Example: All crop fields labeled “agriculture.” | Assigns a unique label to each object. Example: Each field labeled as “field 1,” “field 2,” etc. |

| Use Cases | Best when object count or individual position doesn’t matter. Example: Highlighting total forest area. | Needed when individual object tracking is required. Example: Tracking separate tree clusters or land parcels. |

| Data Complexity | Simpler annotation; less resource-intensive. Example: Labeling all tumor pixels as “tumor.” | Requires detailed labeling of each object. Example: Identifying each tumor separately in medical scans. |

| Model Complexity | Lower computational demands. Example: Suitable for basic classification tasks in controlled settings. | Higher computational load and model sophistication. Example: Differentiating pedestrians in busy traffic scenes. |

| Output Granularity | Classifies pixels without separating instances. Example: All cars in a lot labeled as “car.” | Maintains clear object boundaries. Example: Each car in a lot outlined and counted individually. |