Over 3.6 billion medical imaging procedures are conducted worldwide every year, forming a crucial part of diagnostics. Medical imaging has evolved from X-rays to CT scans. However, certain challenges exist, including longer scan times and low image quality.

Generative AI has the potential to address these challenges in medical imaging. Previous AI models mostly focused on classification and detection. However, generative AI reconstructs images from limited data using Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and diffusion models. This makes generative AI practical for clinical use. This article will discuss how generative AI enhances MRI, CT, and Ultrasound imaging. We will also explore the future of diagnostic imaging and current limitations.

Current Limitations in Medical Imaging

Despite the advances in medical imaging technology, some challenges still need to be addressed. Let’s take a closer look at them:

MRI (Magnetic Resonance Imaging)

MRI provides excellent image quality but has practical limitations that can affect its efficiency and accessibility.

- Long Scan Times: MRI scans typically take 20 to 60 minutes, depending on the protocol and body part. Increased timing can lead to patient discomfort and reduced throughput in clinical settings.

- High Costs and Limited Accessibility: MRI systems require substantial financial resources to operate and maintain. This reduces their application in healthcare facilities with minimal funding options.

- Resolution and Motion Artifacts: The patient’s motion during the MRI process may cause blurring, ringing, and ghosting on the images. These motion-induced artifacts can compromise diagnostic accuracy and may require additional imaging.

CT (Computed Tomography)

CT scans provide rapid, detailed imaging but raise concerns regarding radiation and soft tissue.

- Radiation Exposure: When patients are exposed to several imaging procedures, CT scans accumulate ionizing radiation. Radiation exposure from a single year of CT scans could potentially result in more than 100,000 future cancer cases.

- Low-Dose Image Noise: Decreasing the radiation dose can provide safer results, but it produces noisy and grainy images. This makes it difficult to detect small lesions and analyze delicate diagnostic details.

- Poor Soft Tissue Contrast: CT imaging better visualizes bones and air-filled spaces. However, it is less capable of detecting soft tissue differences. This limits the soft tissue evaluation and neurological assessments.

Ultrasound

Ultrasound is readily available, but its diagnostic quality depends on skill and is subject to imaging artifacts.

- Operator Dependency: Users with minimal experience tend to create scans that are either incomplete or of poor quality. The inconsistent results between exams diminish both diagnostic reliability and clinical certainty.

- Speckle Noise and Shadowing: Ultrasound images often have two major problems, including speckle noise and acoustic shadowing. These artifacts create visual disturbances that can mask important structures such as blood vessels, organs, or tumors and produce false readings.

- Subjective Interpretation: Many ultrasound examination types have no established standard measurement criteria. Because clinicians have differing training levels and experiences, they interpret medical images differently. Subjective interpretation methods increase the likelihood of inconsistent and missed diagnoses.

How Generative AI Addresses These Challenges

Generative AI helps solve these problems by making medical imaging faster, clearer, and less stressful for patients. Let’s see how:

| Challenge Area | Generative AI Solution | Benefits |

|---|---|---|

| Speeding Up Scans | Synthesizes complete medical images from limited input data using anatomical knowledge. | Reduces scan time in MRI and CT, fewer sampling steps or X-ray angles required. |

| Enhancing Image Quality | Uses GANs to denoise ultrasound and improve low-dose CT images. | Enhances clarity, sharpness, and structure visibility. |

| Reducing Invasiveness | Reconstructs high-quality images from low-contrast scans, mimicking the effects of contrast agents. | Reduces radiation and contrast agent use, avoids additional procedures. |

| High-Quality Labeled Data | Utilizes expert-annotated datasets (e.g., via iMerit) to train AI models with precision. | Ensures model accuracy, improves safety, and effectiveness in diagnostics. |

Modality-Specific Innovations of Generative AI in Medical Imaging

Generative AI improves each type of scan in different ways, depending on the imaging technology used. Here are a few examples:

MRI

Acceleration and Reconstruction

AI-based parallel imaging learn patterns from fully sampled MRI data to estimate the missing k-space information. Compressed sensing techniques further improve reconstruction by using sparsity and prior knowledge to recover images from the undersampled inputs.

Low-Field Image Enhancement

Generative Adversarial Networks (GANs) transform low-field portable MRI scanner images into high-quality results comparable to high-field MRI outputs. This extends MRI availability to locations with limited resources.

CT

Low-Dose Imaging

Generative models such as VAEs, GANs, or diffusion models are trained on high-dose CT image data to learn the statistical distribution of anatomical structures. One notable approach is Sparse-view CT reconstruction using learned priors. It is based on deep learning networks such as Universal Network for Biomedical Image Segmentation (U-Net) or Residual Neural Network (ResNet) to post-process traditional iterative reconstruction.

Artifact Removal

Convolutional Neural Networks (CNNs) and transformer-based models are trained to recognize and remove artifacts in medical images. Metal artifact reduction (MAR) methods use training in image and sinogram domains to eliminate beam hardening and streak artifacts. Motion artifact correction models employ temporal consistency through RNNs for blurring detection and anatomical preservation.

Ultrasound

Real-Time Enhancement

The denoising and resolution of ultrasound images are implemented through lightweight CNNs designed for real-time inference. Models like Deep Residual Networks (ResNets) are trained to transform low-quality input frames into high-quality frames. Some methods address speckle noise by training with adversarial networks or using frequency-domain losses designed for ultrasound noise.

3D Volume Generation

Generative models, particularly encoder-decoder networks with spatial transformer networks, synthesize 3D volumes from a sequence of 2D images. Based on anatomical knowledge, these models predict spatial correlations between frames and fill in missing volume slices. Methods like GANs have been applied to generate anatomical structures between two sparse 2D scans.

Operator Assistance

The RL agents and supervised CNNs control the positioning of the probes. Some systems provide real-time feedback by comparing the current view with the ” normal ” view (e.g., heart apical four-chamber). This also helps increase the inter-operator consistency and allows for semi-autonomous scanning.

Real-World Applications and Research Projects

Several projects and studies have already shown the influence of generative AI on medical imaging. Let’s discuss a few of them:

- fastMRI: The fastMRI initiative (NYU and Facebook AI) reveals that AI can reconstruct knee MRI images with only one-fourth of the normal volume of data, making scans approximately 4× faster. The images produced were diagnostically equivalent to standard MRI.

- LowGAN: A GAN known as LowGAN was used to convert 64-mT portable MRI scans into synthetic 3T-quality scans in a study. The outcome was enhanced clarity of brain structures and lesions, almost equal to traditional high-field MRI.

- Diffusion Blend: University of Michigan researchers created DiffusionBlend, a diffusion model that can reconstruct a whole 3D CT image from very few X-ray views. This method results in high-quality CT images at very low radiation doses.

- Virtual Contrast: An Oxford spin-off, AiSentia, developed a generative model that generated virtual contrast-enhanced CT images from non-contrast scans. The AI’s output highlighted blood vessels and organs without contrast in trials.

- Fetal Imaging: GANs have been applied in prenatal imaging to create realistic fetal ultrasound images to supplement training datasets. For instance, adding GAN-synthesized fetal brain images to a dataset enhanced an AI model’s capacity to classify standard ultrasound planes.

- Ultrasound-to-MRI: Another experimental application is translating ultrasound to another modality. A deep learning model produced MRI-like images from ultrasound scans of the fetal brain to show how AI can generate additional diagnostic views from an initial ultrasound.

Cross-Modality and Future-Looking AI Techniques

Generative AI is improving imaging types and creating new frontiers using cross-modality techniques. Let’s discuss a few of them:

- Modality-to-Modality Translation: Generative models such as CycleGANs can translate images between various modalities. They convert ultrasound images to MRI-like images. This allows clinicians to obtain all-inclusive diagnostic information without exposing patients to various imaging procedures.

- Multi-Modal Fusion: AI models can integrate information from various scans to create an overall picture. For instance, a system could combine a patient’s CT and MRI data into a single aligned model to ensure nothing is overlooked.

- Anomaly Detection: Cross-modality models may also help with anomaly detection. If the MRI output does not match the expected, it can notify clinicians about a potential problem.

- Synthetic Data for Training Algorithms: Generative AI models, such as GANs and diffusion models, generate synthetic medical images for training. These synthetic datasets address data scarcity and class imbalances in medical imaging. AI-derived synthetic images enrich training data. This helps create more resilient and generalizable diagnostic algorithms.

- Virtual Hybrid Imaging Sensors: Integrating AI with imaging hardware combines data from several imaging modalities in real-time. This provides clinicians with enriched diagnostic information. Such advances improve imaging capabilities without the necessity of new hardware.

Data Privacy and Ethical Considerations

Certain ethical considerations must be considered when integrating generative AI in medical imaging.

- Bias and Fairness: Generative models may not work well if it is exposed to unseen data. According to a report in Nature Medicine, AI algorithms for chest X-ray interpretation do not work well for Black female marginalized groups. AI models must be trained on diverse and representative datasets to ensure fairness and avoid such biases.

- Privacy: Models can usually create de-identified synthetic images, but it is important to ensure that it does not memorize or reproduce snippets of real images. Rigid anonymization protocols are required when training and deploying these models.

- Transparency and Safety: Radiologists must know what the AI has done to an image. It is vital to validate that AI results do not create false results or remove actual ones. Through transparency, patient consent, and validation, we can safely use the benefits of generative AI.

- Using Synthetic vs. Real Imaging Data: AI models can generate synthetic data that augments pre-existing datasets and mitigates problems of data scarcity. This helps limit access to actual patient records. However, the use of synthetic data implies the risk of producing misleading information that can undermine the reliability of the results.

Future Outlook

The future holds even more integrations of generative AI into healthcare and medical imaging. A few examples include:

Real-time AI in Clinics

Imagine an MRI scanner that instantly provides AI-enhanced images or an ultrasound machine that gives the operator AI-driven recommendations as they work. Such instant feedback can simplify the scanning process and reduce repetitive scans by making it more efficient.

New Imaging Workflows

Generative AI could introduce new workflows, like ultra-fast, low-dose scanning. It may also help enhance images to a diagnostic level. Multi-modal AI could become the new standard. This means a single scan could generate multiple types of reconstructions. For example, an ultrasound might also create a CT-like view.

AI Failure Scenarios and Building Trust

Confidence in clinical practices depends on the reliability of AI tools in medical imaging. Various approaches, including fail-safes, constant monitoring, and user-friendly interfaces, can accomplish this. Another aspect of earning trust is transparency in explaining the system’s strengths and limits.

Role of Interdisciplinary Collaboration in Scaling Adoption

Building an effective AI system requires close collaboration across disciplines. Radiologists and technicians need to be trained to operate with AI and recognize when to trust it and when to verify it. Continuous cooperation of radiologists, engineers, and ethicists will create standards and best practices.

Conclusion

Generative AI will not replace radiologists but will enhance their work. To strengthen healthcare delivery, the aim is to create machines that can understand, explain, and augment human decisions. With proper design, governance, and integration, generative AI can become an effective clinical asset.

Key Takeaways

- Generative AI models like GANs, VAEs, and diffusion networks improve imaging by creating detailed images from low-dose inputs or partial data.

- AI can combine information from MRI, CT scans, and other imaging to diagnose and reduce the number of scans.

- AI-generated synthetic data can improve the algorithm learning process for availability, imbalance, and privacy of the data.

- The use of generative AI should be validated and trained on diverse data.

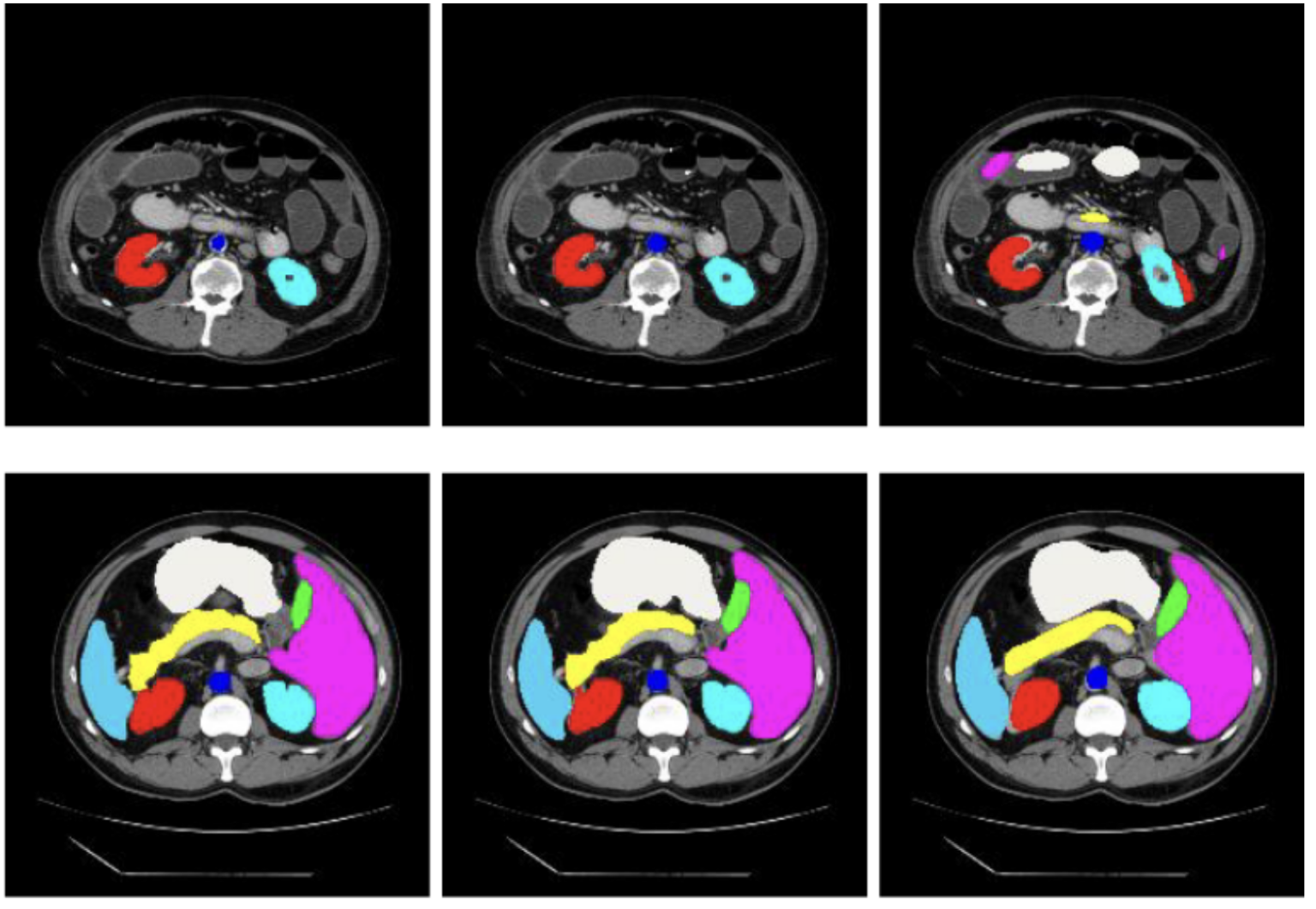

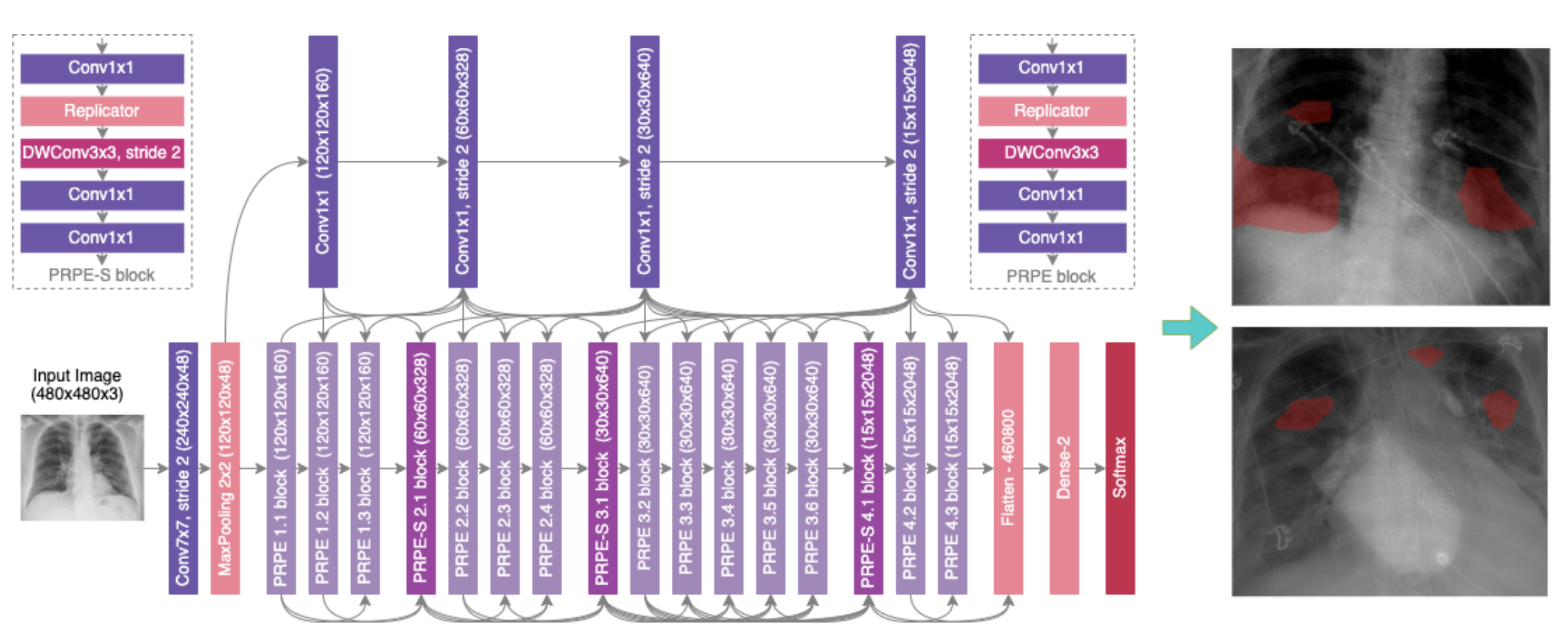

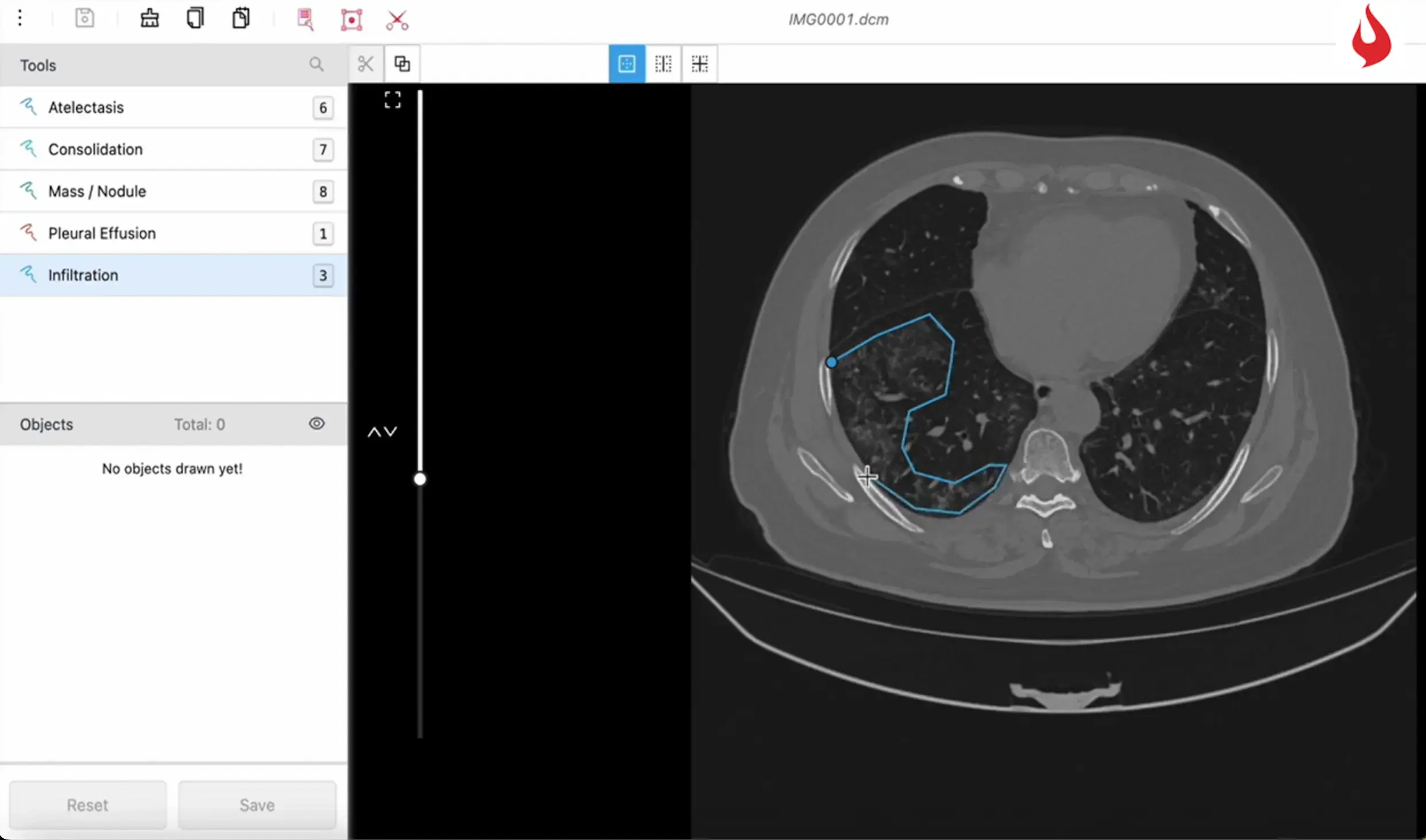

However, it relies on high-quality labeled data to work safely and accurately. iMerit offers expert-annotated data required for training and testing generative models. Imerit’s Radiology Annotation Product Suite is based on the Ango Hub platform that includes advanced features such as 3D multiplanar views, magnetic lasso, and level tracing, making annotation easier and more precise.

The platform can read and process DICOM, NIfTI, and TIFF files, making it suitable for handling various types of medical images. iMerit is also designed to align with FDA submission guidelines and follows HIPAA-compliant procedures.

It has a team of medical annotators to provide accurate labeling in formats to help create reliable AI applications in radiology.