Leading Autonomous Mobility Company partners with iMerit for LiDAR Annotation to build a 3D Perception System

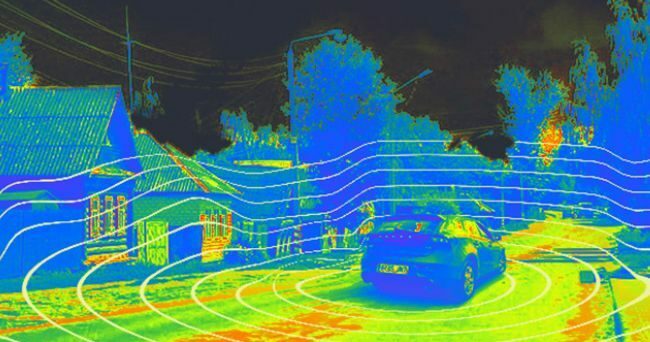

We partnered with this company to support them with data annotation across 2D images and 3D point clouds. 3D perception systems are highly dependent on data quality for improved performance, and the company was looking at target identification in LiDAR frames with lane marking, road boundaries, traffic lights, and others.

With our human-in-the-loop workflows, data labeling on 3D LiDAR frames for poles, pedestrians, signs, cars, and barriers, was achieved seamlessly and accurately.